6 Enterprise Kubernetes Takeaways from KubeCon San Diego

We just returned from KubeCon + CloudNativeCon 2019 held in sunny San Diego on Nov 18-21. KubeCon San Diego 2019 drew more than 12,000 attendees, a 50% increase since the last event in Barcelona just 6 months ago.

While at the event, we interacted with more than 1,800 attendees and had more than 1,300 attendees complete our survey at the booth to give us an insight into their use of Kubernetes.

Here are the six most important takeaways from the survey results:

1) Massive Increase in Planned Scale of Kubernetes Deployments

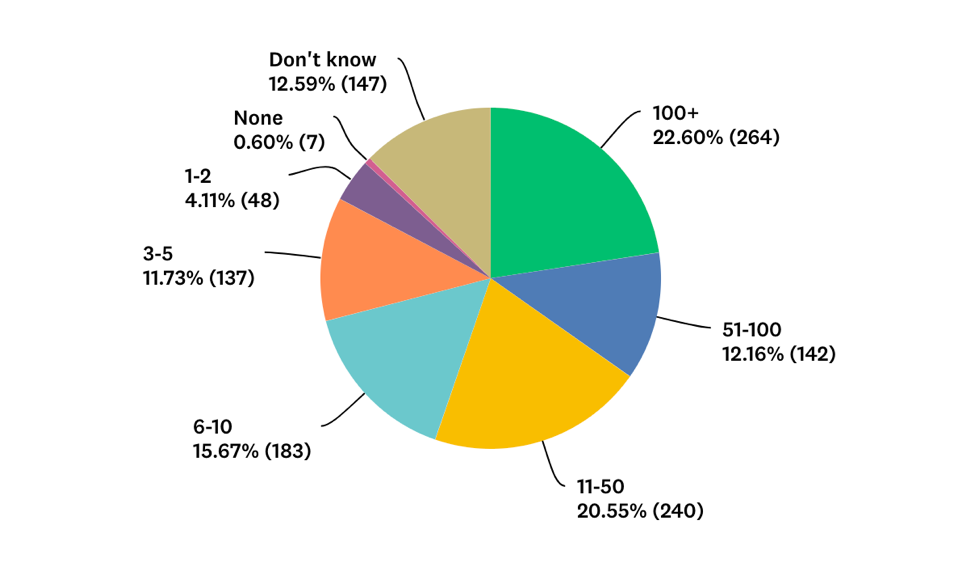

Kubernetes is moving to the mainstream given the scale of Kubernetes clusters that companies plan to run. More than 406 of the survey respondents said that they will be running 50 or more clusters in production within the next 6 months! That's an astonishing number of companies planning to run Kubernetes at such a massive scale.

What's more, in some of our in-person conversations, we discovered that companies are running hundreds of nodes in just one or two clusters. The scale is both in terms of the number of nodes as well as the number of clusters.

2) Monitoring, Upgrades, and Security Patching Are the Biggest Challenges

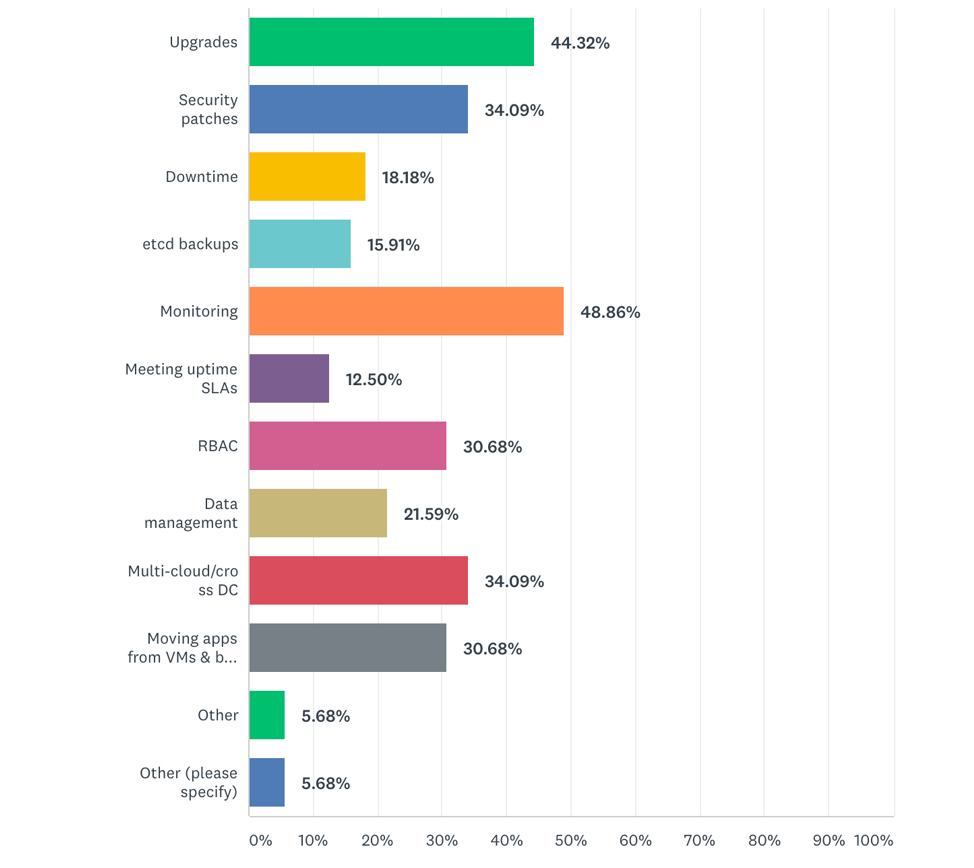

Running at a massive scale presents unique challenges. It's pretty easy to deploy one or two Kubernetes clusters for proof of concepts or development/testing. Managing it reliably at scale in production is quite another matter, especially when you have dozens of clusters and hundreds of nodes.

Kubernetes' complexity and abstraction are necessary and they are there for good reasons, but it also makes it hard to troubleshoot when things don't work as expected. No wonder then that 48.86% of the survey respondents indicated that monitoring at scale is their biggest challenge, followed by upgrades (44.3%) and security patching (34.09%).

DevOps, PlatformOps, and ITOps teams need observability, metrics, logging, service mesh and they need to get to the bottom of failures quickly to ensure uptime and SLAs. The rise of Prometheus, fluentd, and Istio is a direct response to these needs. However, now they need to keep up to date with these and other constantly changing CNCF projects that continue to evolve at a rapid pace.

3) 24×7 Support and 99.9% Uptime SLA is Critical for Large Scale Deployments

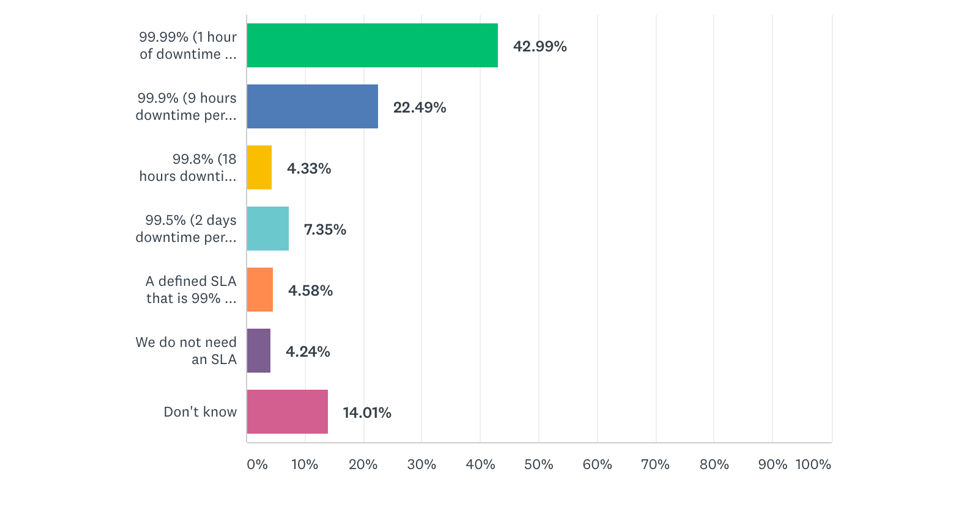

The scale at which companies are running Kubernetes is a good proxy for a significant number of production applications that are serving end-users. 65% of the respondents indicated their end-users require 99.9% or higher uptime.

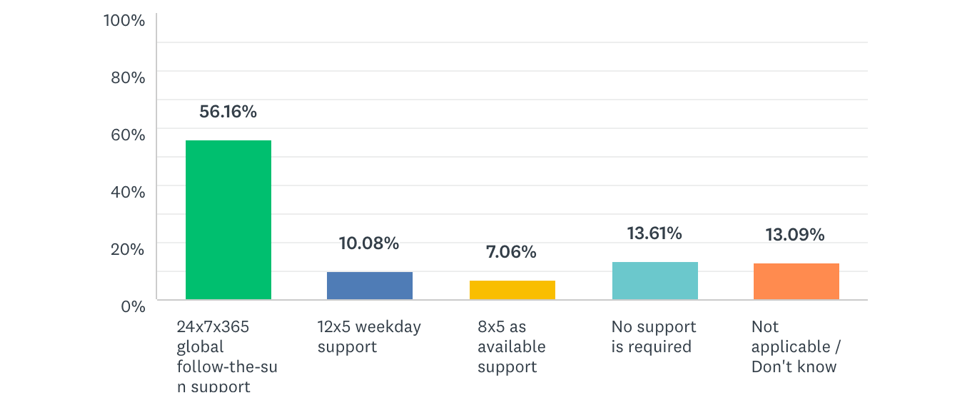

DevOps, Platform Ops, and IT Ops teams need to architect the entire solution from the bare metal up (especially in on-premises data centers or edge environments) so they can confidently ensure workload availability while performing complex day-2 operations such as upgrades, security patching, infrastructure troubleshooting failures at the network, storage, compute, operating system, and Kubernetes layers. All of this requires proactive support that ensures quick responses to production incidents. Not surprisingly then, 56.16% of the respondents mentioned that their organization needs to provide a 24x7x365, follow-the-sun support model.

4) Kubernetes Edge Deployments Are Growing

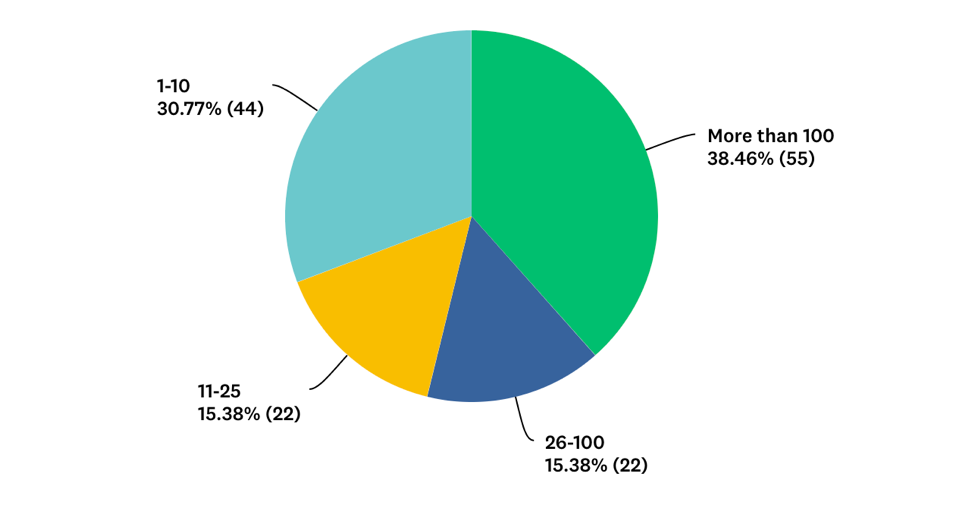

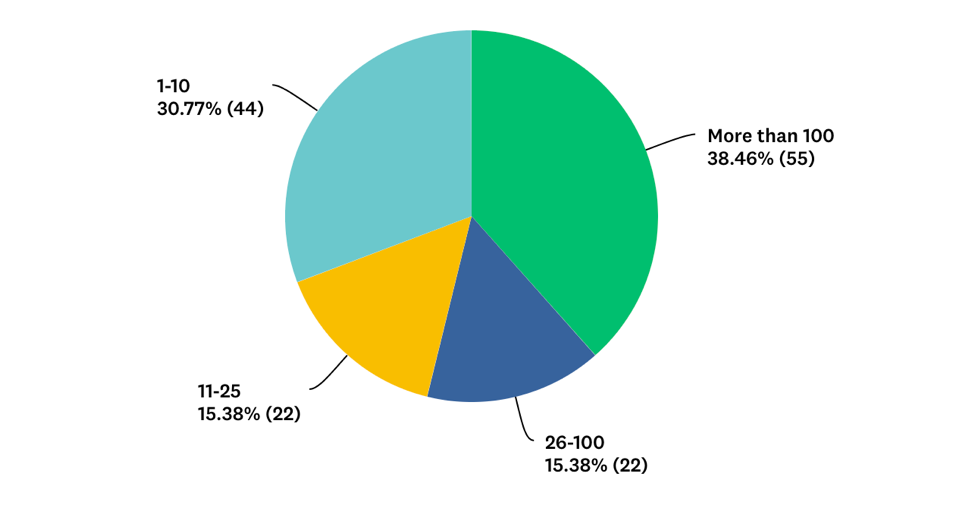

We wanted to find out if Kubernetes is being considered for edge computing use cases. 145 survey respondents indicated they have an edge deployment using Kubernetes. The surprising thing was the geographical scale of these deployments. 38.5% responded that they are running Kubernetes in 100 or more locations!

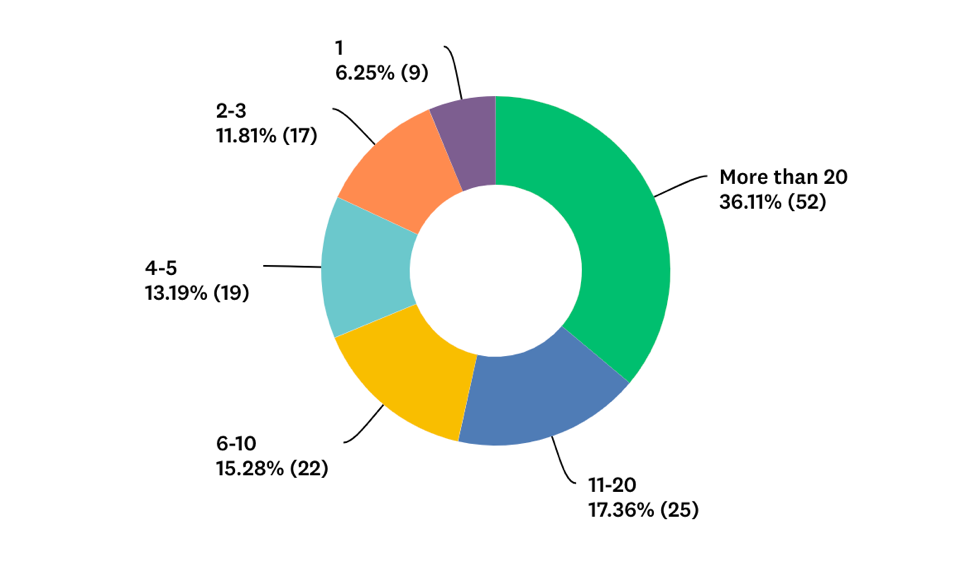

The survey data also indicated that these edge locations could be characterized as "thick" edges since they are running a significant number of servers. Almost 47% of the respondents said that these locations are running 11 or more servers in each location.

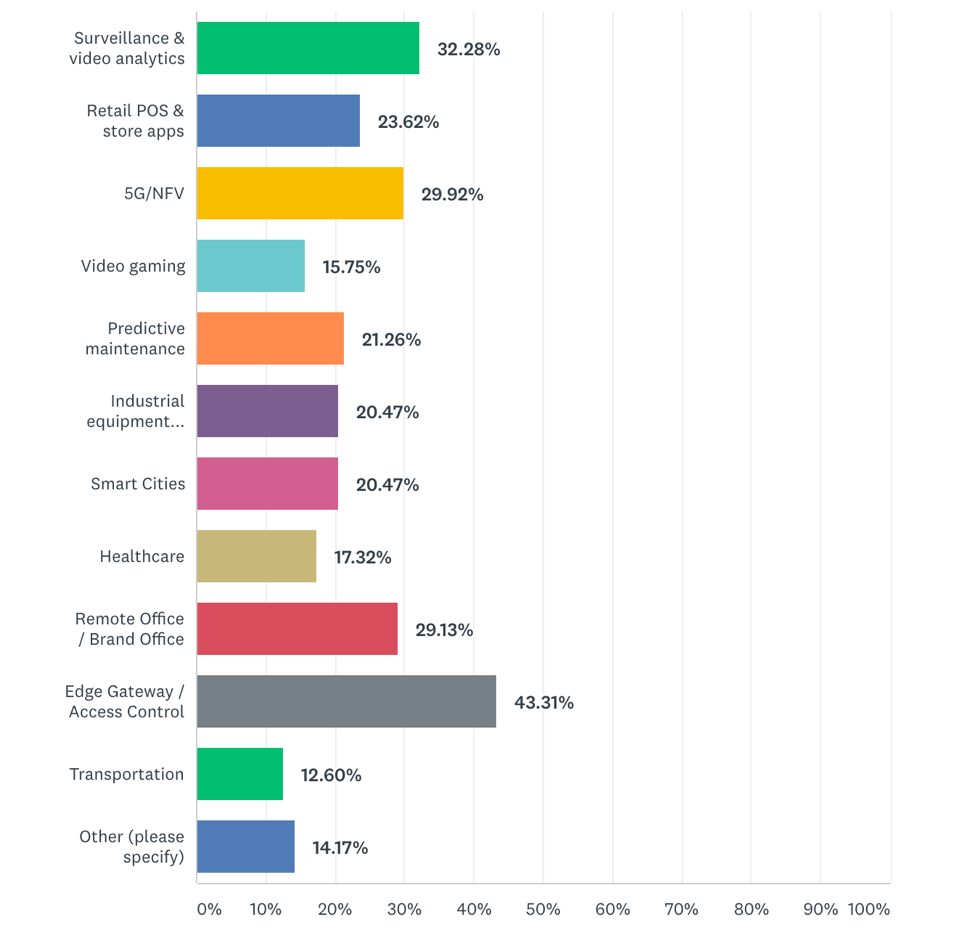

It's quite a challenge to scale dozens or hundreds of pseudo-data centers that need to be managed with low or no touch, usually with no staff and little access. These scenarios included edge locations owned by the company (e.g. retail stores), and in the case of on-premises software companies, their end customers' data centers. Given the large scale, traditional data center management processes won't apply. The edge deployments should support heterogeneity of location, remote management, and autonomy at scale; enable developers; integrate well with public cloud and/or core data centers.

The survey also highlighted the diversity of use cases being deployed. The top two applications being deployed at the edge are Edge gateways/access control (43.3%) and Surveillance and video analytics (32.28%).

5) Multi-Cloud Deployments Are The New Normal

In many cases, the majority of respondents indicated that they run Kubernetes on BOTH on-premises and public cloud infrastructure. This is another evidence that multi-cloud or hybrid cloud deployment are becoming the new normal. With the number of different, mixed environments (private/public cloud as well as at the edge) projected to continue to grow — avoiding lock-in, ensuring portability, interoperability and consistent management of K8s across all types of infrastructure - would all become even more critical for enterprises in the years ahead.

6) Access to Kubernetes Talent for a DIY Approach Remains Challenging

An unusual aspect of KubeCon is the large booths that are operated by major enterprises (e.g., Capital One) just to recruit Kubernetes talent. Walmart made a recruitment pitch in their keynote session. This year there were 15 major enterprises in CNCF's "end user sponsor" category who are looking for talent.

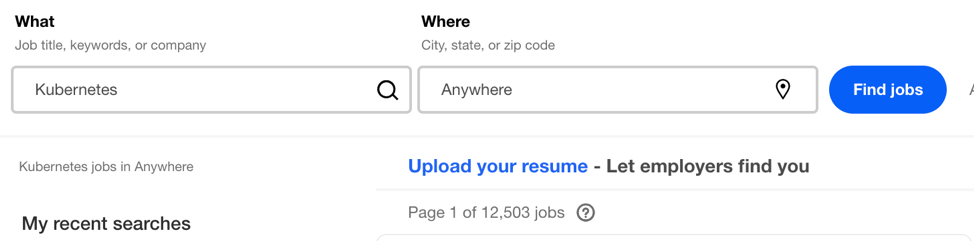

A quick search on Indeed.com shows 12,503 jobs (up from 9,934 just 6 months ago!)

Many companies are looking to hire Kubernetes talent, but it continues to be a challenge to find the requisite skills.

Summary

It's astonishing to see the massive scale of Kubernetes deployments. The companies at KubeCon that are spearheading these large-scale Kubernetes deployments are positioning themselves to rapidly deliver superior customer experiences giving them a distinct competitive edge in a fast-moving digital economy. Not only are they using it in their core data centers and the public cloud, but they are also deploying a staggering number of Edge Computing use cases from vertical industries ranging from retail and manufacturing to automotive and 5G rollouts.

Many mainstream enterprises will follow in their footsteps and see success with Kubernetes in production in the very near future. However, new challenges arise at scale that DevOps, Platform Ops, and IT Ops teams at major enterprises need to tackle going forward, especially when their end-users demand 99.9%+ uptime and round-the-clock support in mission-critical production environments.

The complexity of Kubernetes makes it difficult to run and operate at scale, particularly for multi/hybrid cloud environments — spanning on-premises data centers, edge locations, public cloud infrastructure. Hiring talent and retaining them to run Kubernetes operations is getting increasingly difficult.

Further Reading

Survey Reveals Rapid Growth in Kubernetes Usage, Security Still a Concern

Understanding Kubernetes From Real-World Use Cases