NGINX With Eureka Instead of Spring Cloud Gateway or Zuul

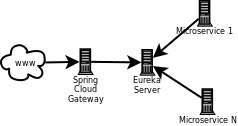

For one of my clients I have a Eureka-based microservices horizontally scaled architecture. As an entry point of the project, I was first using Zuul, and later on, I replaced it with Spring Cloud Gateway. The simplified architecture of the project looks like this:

Both Zuul and Spring Cloud Gateway have integrated flawlessly with Eureka service discovery. However, the whole project was hosted on AWS EC2. In AWS EC2, you are paying for resources/Virtual Machines that you are using. It is better if you use smaller Virtual Machine Instances because the price will be lower.

After some adjusting, I’ve managed to use the AWS t3.medium instance for the Spring Cloud Gateway. It is four times bigger than the average instance used for the project — AWS t3.micro instance. Also, it turns out that there is a memory leak in Spring Cloud Gateway (here, here, and here). Some of the reported problems are already closed; nevertheless, I didn’t manage to make my Spring Cloud Gateway in production to work without a memory leak.

The memory consumption of it was increasing slowly in time and eventually ended with Out of Memory Error (EOM). I have built a very resilient system, which can restore back the fallen Spring Cloud Gateway. However, the cost of this operation was a few minutes of downtime for two weeks.

Maybe the problem was with me, and I was unable to make a proper resolution of the EOM errors. However, instead of digging deeper into the memory leak problem, I decided to take an alternative approach, which if I was right, should have additionally helped me to resolve the ‘4x’ problem (four times bigger instances for Spring Cloud Gateway).

The question that I asked my self was: What if I can use the NGINX instead of Spring Cloud Gateway? According to this article: NGINX is far ahead of Spring Cloud Gateway and far ahead of Zuul for small-sized AWS instances. Zuul has a slight advantage against NGINX. However, on very large AWS instance types - far bigger than the currently used instance for Spring Cloud Gateway.

Using NGINX instead of Spring Cloud Gateway sounds wonderful. However, there was one "insignificant" problem there: NGINX wasn’t made to work with Eureka service discovery.

This looked like a deal-breaker for me, so using the NGINX instead of Spring Cloud Gateway, was something that seemed great, but not possible for the moment.

It took me a while to figure out the solution of how to use NGINX together with Eureka service discovery.

The solution started to get shape after I heard about the NGINX Hot Reload feature. According to the NGINX Documentation:

Once the master process receives the signal to reload configuration, it checks the syntax validity of the new configuration file and tries to apply the configuration provided in it. If this is a success, the master process starts new worker processes and sends messages to old worker processes, requesting them to shut down. Otherwise, the master process rolls back the changes and continues to work with the old configuration. Old worker processes, receiving a command to shut down, stop accepting new connections and continue to service current requests until all such requests are serviced. After that, the old worker processes exit.

In other words, what if I wrote a service that can read the configuration of microservices from the Eureka service discovery and can translate it to the NGINX configuration? Then, I just had to add a Cron Job or periodic task, which would reload the NGINX configuration with the latest microservices state from the Eureka service discovery.

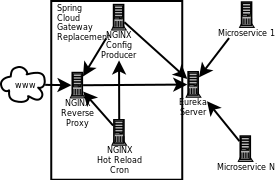

Now, the simplified project infrastructure should look this way:

Here is the explanation of the scheme above:

NGINX Config Producer should generate an initial NGINX configuration.

NGINX (NGINX Reverse Proxy) will be started with the initial configuration generated from the NGINX Config Producer.

After a certain period of time, the NGINX Hot Reload Cron will ask the NGINX Config Producer to regenerate the NGINX configuration. Then, it will reload the NGINX with the newly generated configuration.

It is that simple!

So, I’ve done it. Now my "Spring Cloud Gateway Replacement" is running on AWS t3.micro instance (four times smaller than the previous Spring Cloud Gateway instance). For the moment, it seems that there are not memory leaks or anything what so ever.

This was pretty much the story about the "Spring Cloud Gateway Replacement". If you are curious about the implementation(s) you can continue reading about the details :)

Implementation Details

I’ve created a few sample projects in order to show how the system really works. The projects are:

Vanilla Eureka Service Discovery — it also is available as Docker image here.

Demo microservice that connects with Vanilla Eureka Service Discovery. Available as Docker image here.

Demo Spring Cloud Gateway Replacement — Contains NGINX Config Producer, script that starts NGINX, and runs the periodic tasks for reloading NGINX Configuration.

The implementation of the sample projects is slightly different than the real implementation used in production environment. It is simplified in order to show the main idea how to use the NGINX with the Eureka service discovery.

NGINX Config Producer

Here is the GitHub implementation of the producer.

In order to implement the NGINX Config Producer you need a few things:

Eureka client.

NGINX config template.

You should consider the possibility to make the NGINX Config Producer as thin as possible because I have decided to run it on the same instance as the NGINX.

Eureka Client

Anyone can easily plug the full-blown Eureka Client implementation via the Spring Boot init page. However, considering the "point 3", I was willing to sacrifice some of the Eureka Client implementation features in favor of a lower footprint for the "NGINX Config Producer" service.

So, I decided to use my Simple Netflix Eureka client using Quarkus, which I have developed earlier during Spring Boot to Quarkus Migration for one of my microservices. The client only has two features: registerApp and getNextServer, but it turns out that they are enough for me.

What I’ve earned here as an additional benefit is that using Quarkus Framework instead of Spring Boot results in an incredibly small application footprint (less than 16Mb of memory).

NGINX Config Template

You just need an NGINX configuration file with a few placeholders to replace. The placeholders are for NGINX ports and to replace upstream servers extracted from the Eureka service discovery.

The default.conf file can be found here. Let me explain some parts of the file.

Upstream Servers

xxxxxxxxxx

upstream demo {

__DEMO_MICROSERVICE_SERVERS__

}

Here, all the microservice servers retrieved from the Eureka client should be stored. This is how it should look like:

xxxxxxxxxx

upstream demo {

server 172.94.14.97:24465;

server 172.94.14.97:24461;

server 172.94.14.97:24463;

server 172.94.14.97:24462;

server 172.94.14.97:24464;

}

Bad Gateway Server

xxxxxxxxxx

server {

listen __SERVER_BAD_GATEWAY_PORT__ default_server;

server_name _;

return 502;

}

Imagine the case when all microservices are down. In that case, the "upstream demo" will be empty. If it is NGINX, it will refuse to reload the configuration. In order to prevent putting zero microservice servers, we shall add as an upstream server this server which will return the 502 (Bad Gateway) error.

Retry Logic

xxxxxxxxxx

proxy_next_upstream error timeout http_502 http_503 http_504 http_429 ;

We should ensure the case when some of the microservices are down and then NGINX should go to the next server. This is done by the snippet above.

Hide Some Sensitive Endpoints

xxxxxxxxxx

location ~* ^.*(/health|/info|/metrics|/env).*$ {

return 403;

}

location = /health {

types { } default_type "application/json; charset=UTF-8";

return 200 "{\"status\": \"UP\"}";

}

In that way, we will disable some endpoints and ensure the health check for the NGINX server.

Pass All Requests to the "Demo" Microservice

xxxxxxxxxx

location / {

expires -1;

proxy_pass http://demo;

}

Nothing special here. If you have more than one microservice you have to put more blocks like this one.

NGINX Config Producer Implementation

The common logic of the “NGINX configuration producer” is extracted here, in a dedicated project for common/useful Quarkus libraries.

Then, you just have to fill the microservices related parts:

xxxxxxxxxx

private String addDemoMicroserviceConfig(String config) {

return buildUpstreamServers(getServers(getDemoMicroserviceName()))

.map(s -> StringUtils.replace(config, DEMO_MICROSERVICE_SERVERS_PLACEHOLDER, s))

.orElse(StringUtils.replace(config, DEMO_MICROSERVICE_SERVERS_PLACEHOLDER, getBadGatewayServer()));

}

The NginxService source can be found here.

Playing and Testing With the Sample

In order to play with samples, you will need Docker installed. I am working on a Linux environment. That’s why some of the services and tests are started through bash.

Start the Eureka service discovery

This will start Eureka on port 24455.

xxxxxxxxxx

docker run -i -d --rm -p 24455:24455 \

--name=otaibe-nginx-with-eureka-demo-eureka-server \

triphon/otaibe-nginx-with-eureka-demo-eureka-server

The Eureka is set with enableSelfPreservation=false. The Eureka dashboard is available on http://localhost:24455/.

Start the NGINX Config Producer

You can start the service through the following script: docker_run.sh from the NGINX Config Producer project.

You can start the script in the following way:

xxxxxxxxxx

bash /path/to/script/docker_run.sh <EUREKA_HOST_PORT> <HOST_NAME_OR_IP>

#Example:

bash /path/to/script/docker_run.sh 172.94.14.97:24455 172.94.14.97

Note that the localhost or 127.0.0.1 will not work because the process will be running inside the container.

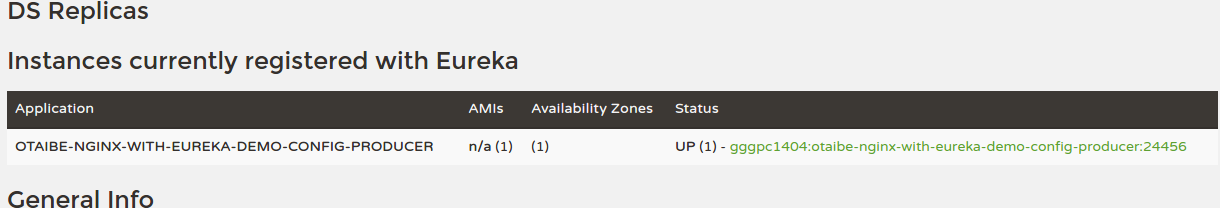

You can check if the service is available through Eureka dashboard (http://localhost:24455/)

Also, you can check the generation of the NGINX configuration file on this URL: http://localhost:24456/nginx/config.

Start and Hot Reload NGINX

You can do that through the docker_run_nginx.sh from the NGINX Config Producer project. This is the script. There isn't any additional configuration there.

xxxxxxxxxx

set -x #echo on

export SERVICE_NAME=otaibe-nginx-with-eureka-demo

export WORKDIR=/tmp/$SERVICE_NAME

mkdir -p $WORKDIR

curl -o $WORKDIR/default.conf http://localhost:24456/nginx/config

docker run -i -d --rm -p 24457:24457 --network host \

--name=$SERVICE_NAME \

--volume=$WORKDIR:/etc/nginx/conf.d \

nginx:1.16

x=1

while [ $x -eq 1 ]

do

sleep 30

curl -o $WORKDIR/default.conf http://localhost:24456/nginx/config

docker exec -i -d $SERVICE_NAME nginx -s reload

done

The script will also download the NGINX configuration and will hot-reload it every 30 seconds.

You can try to hit the demo microservice through the NGINX: http://localhost:24457/rest

As expected the NGINX will respond with 502 error, because we haven’t started any microservice yet.

Starting the Demo Microservice

It can be started from the docker_run.sh from the Demo Microservice Project.

You have to start a new terminal because the last one is already taken by the NGINX start and reload script. You can start the script in the following way:

xxxxxxxxxx

bash /path/to/script/docker_run.sh <QUARKUS_HTTP_PORTS> <EUREKA_HOST_PORT> <HOST_NAME_OR_IP>

#Example:

bash /path/to/script/docker_run.sh "24463 24464 24465" 172.94.14.97:24455 172.94.14.97

This will start three demo microservices on ports 24463, 24464, and 24465. You should wait a while in order the configuration to be reloaded in NGINX and you can try again to hit the demo microservice: http://localhost:24457/rest.

You should have the following result in your terminal:

xxxxxxxxxx

$ curl http://localhost:24457/rest

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

Let's have some fun with the demo system. You can use the curl_tests.sh from the NGINX Config Producer project:

xxxxxxxxxx

#set -x #echo on

for i in {1..15} ; do

curl http://localhost:24457/rest && echo ''

done

As you can see, it will hit the NGINX 15 times. Here is the result on my end:

xxxxxxxxxx

$ bash src/test/resources/shell/curl_tests.sh

application-name=otaibe-nginx-with-eureka-demo-microservice-24464; applicationid=53c6b5a2-8856-4065-b609-d607ee8a6c43

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24464; applicationid=53c6b5a2-8856-4065-b609-d607ee8a6c43

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24464; applicationid=53c6b5a2-8856-4065-b609-d607ee8a6c43

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24464; applicationid=53c6b5a2-8856-4065-b609-d607ee8a6c43

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24464; applicationid=53c6b5a2-8856-4065-b609-d607ee8a6c43

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

Every demo microservice was hit five times. Let's stop one of the demo microservices:

xxxxxxxxxx

docker stop otaibe-nginx-with-eureka-demo-microservice-24464

The result is:

xxxxxxxxxx

$ bash src/test/resources/shell/curl_tests.sh

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

The "otaibe-nginx-with-eureka-demo-microservice-24464" microservice has gone. Right?

Let's start three other microservices:

xxxxxxxxxx

bash /path/to/script/docker_run.sh "24460 24461 24462" 172.94.14.97:24455 172.94.14.97

Wait for a while, and the result is:

xxxxxxxxxx

$ bash src/test/resources/shell/curl_tests.sh

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24462; applicationid=d8e69a45-311b-4212-88f7-6b8f0dce3495

application-name=otaibe-nginx-with-eureka-demo-microservice-24460; applicationid=bd537f07-f840-4fd8-b3e7-30e2e1db21ff

application-name=otaibe-nginx-with-eureka-demo-microservice-24461; applicationid=3b3996af-6d00-46cf-94b7-8580704a331d

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24462; applicationid=d8e69a45-311b-4212-88f7-6b8f0dce3495

application-name=otaibe-nginx-with-eureka-demo-microservice-24460; applicationid=bd537f07-f840-4fd8-b3e7-30e2e1db21ff

application-name=otaibe-nginx-with-eureka-demo-microservice-24461; applicationid=3b3996af-6d00-46cf-94b7-8580704a331d

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

application-name=otaibe-nginx-with-eureka-demo-microservice-24465; applicationid=6ece791f-5635-49b5-bf3b-43055f2cc837

application-name=otaibe-nginx-with-eureka-demo-microservice-24462; applicationid=d8e69a45-311b-4212-88f7-6b8f0dce3495

application-name=otaibe-nginx-with-eureka-demo-microservice-24460; applicationid=bd537f07-f840-4fd8-b3e7-30e2e1db21ff

application-name=otaibe-nginx-with-eureka-demo-microservice-24461; applicationid=3b3996af-6d00-46cf-94b7-8580704a331d

application-name=otaibe-nginx-with-eureka-demo-microservice-24463; applicationid=f8b0d4d6-0feb-45bc-af37-d6295b7fec50

All five demo microservices are shown now :). Seems that everything works as expected. Correct?

Stopping the System

Just stop all the containers:

xxxxxxxxxx

docker stop otaibe-nginx-with-eureka-demo-microservice-24462 \

otaibe-nginx-with-eureka-demo-microservice-24461 \

otaibe-nginx-with-eureka-demo-microservice-24460 \

otaibe-nginx-with-eureka-demo-microservice-24465 \

otaibe-nginx-with-eureka-demo-microservice-24463 \

otaibe-nginx-with-eureka-demo \

otaibe-nginx-with-eureka-demo-config-producer \

otaibe-nginx-with-eureka-demo-eureka-server

You can verify that there are no containers left:

xxxxxxxxxx

docker ps | grep otaibe-nginx-with-eureka-demo

The only thing left is to stop the docker_run_nginx.sh from the NGINX Config Producer. Go to the terminal where it is running and press the Ctrl+C.

We’re done :)

Conclusion

In my opinion, using the NGINX Hot Reload feature combined with a service that can dynamically build the up to date NGINX configuration and then reload it, is a very powerful combination. In that way, NGINX can be involved with any kind of service discovery, and you will have a great, fast, and flexible API Gateway, with the smallest footprint possible.