The Impact of AI and Platform Engineering on Cloud Native's Evolution: Automate Your Cloud Journey to Light Speed

Editor's Note: The following is an article written for and published in DZone's 2024 Trend Report, Cloud Native: Championing Cloud Development Across the SDLC.

2024 and the dawn of cloud-native AI technologies marked a significant jump in computational capabilities. We're experiencing a new era where artificial intelligence (AI) and platform engineering converge to transform cloud computing landscapes. AI is now merging with cloud computing, and we're experiencing an age where AI transcends traditional boundaries, offering scalable, efficient, and powerful solutions that learn and improve over time. Platform engineering is providing the backbone for these AI systems to operate within cloud environments seamlessly.

This shift entails designing, implementing, and managing the software platforms that serve as the fertile ground for AI applications to flourish. Together, the integration of AI and platform engineering in cloud-native environments is not just an enhancement but a transformative force, redefining the very fabric of how services are now being delivered, consumed, and evolved in the digital cosmos.

The Rise of AI in Cloud Computing

Azure and Google Cloud are pivotal solutions in cloud computing technology, each offering a robust suite of AI capabilities that cater to a wide array of business needs. Azure brings to the table its AI Services and Azure Machine Learning, a collection of AI tools that enable developers to build, train, and deploy AI models rapidly, thus leveraging its vast cloud infrastructure. Google Cloud, on the other hand, shines with its AI Platform and AutoML, which simplify the creation and scaling of AI products, integrating seamlessly with Google's data analytics and storage services.

These platforms empower organizations to integrate intelligent decision-making into their applications, optimize processes, and provide insights that were once beyond reach.

A quintessential case study that illustrates the successful implementation of AI in the cloud is that of the Zoological Society of London (ZSL), which utilized Google Cloud's AI to tackle the biodiversity crisis. ZSL's "Instant Detect" system harnesses AI on Google Cloud to analyze vast amounts of images and sensor data from wildlife cameras across the globe in real time. This system enables rapid identification and categorization of species, transforming the way conservation efforts are conducted by providing precise, actionable data, leading to more effective protection of endangered species.

Such implementations as ZSL's not only showcase the technical prowess of cloud AI capabilities but also underscore their potential to make a significant positive impact on critical global issues.

Platform Engineering: The New Frontier in Cloud Development

Platform engineering is a multifaceted discipline that refers to the strategic design, development, and maintenance of software platforms to support more efficient deployment and application operations. It involves creating a stable and scalable foundation that provides developers the tools and capabilities needed to develop, run, and manage applications without the complexity of maintaining the underlying infrastructure. The scope of platform engineering spans the creation of internal development platforms, automation of infrastructure provisioning, implementation of continuous integration and continuous deployment (CI/CD) pipelines, and the insurance of the platforms' reliability and security.

In cloud-native ecosystems, platform engineers play a pivotal role. They are the architects of the digital landscape, responsible for constructing the robust frameworks upon which applications are built and delivered. Their work involves creating abstractions on top of cloud infrastructure to provide a seamless development experience and operational excellence.

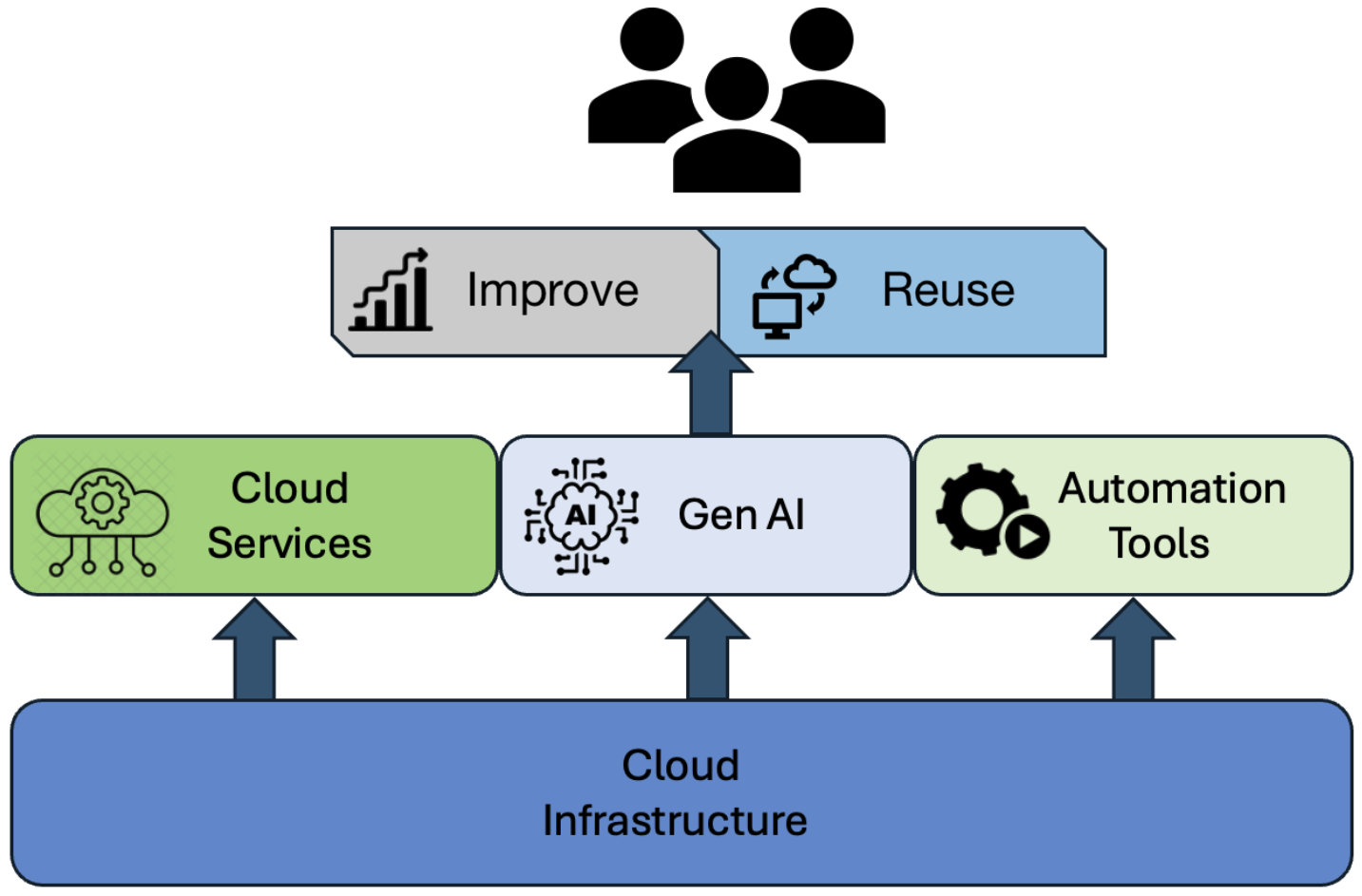

Figure 1. Platform engineering from the top down

Platform engineers enable teams to focus on creating business value by abstracting away complexities related to environment configurations, along with resource scaling and service dependencies. They guarantee that the underlying systems are resilient, self-healing, and can be deployed consistently across various environments.

The convergence of DevOps and platform engineering with AI tools is an evolution that is reshaping the future of cloud-native technologies. DevOps practices are enhanced by AI's ability to predict, automate, and optimize processes. AI tools can analyze data from development pipelines to predict potential issues, automate root cause analyses, and optimize resources, leading to improved efficiency and reduced downtime. Moreover, AI can drive intelligent automation in platform engineering, enabling proactive scaling and self-tuning of resources, and personalized developer experiences.

This synergy creates a dynamic environment where the speed and quality of software delivery are continually advancing, setting the stage for more innovative and resilient cloud-native applications.

Synergies Between AI and Platform Engineering

AI-augmented platform engineering introduces a layer of intelligence to automate processes, streamline operations, and enhance decision-making. Machine learning (ML) models, for instance, can parse through massive datasets generated by cloud platforms to identify patterns and predict trends, allowing for real-time optimizations. AI can automate routine tasks such as network configurations, system updates, and security patches; these automations not only accelerate the workflow but also reduce human error, freeing up engineers to focus on more strategic initiatives.

There are various examples of AI-driven automation in cloud environments, such as implementing intelligent systems to analyze application usage patterns and automatically adjust computing resources to meet demand without human intervention. The significant cost savings and performance improvements provide exceptional value to an organization. AI-operated security protocols can autonomously monitor and respond to threats more quickly than traditional methods, significantly enhancing the security posture of the cloud environment.

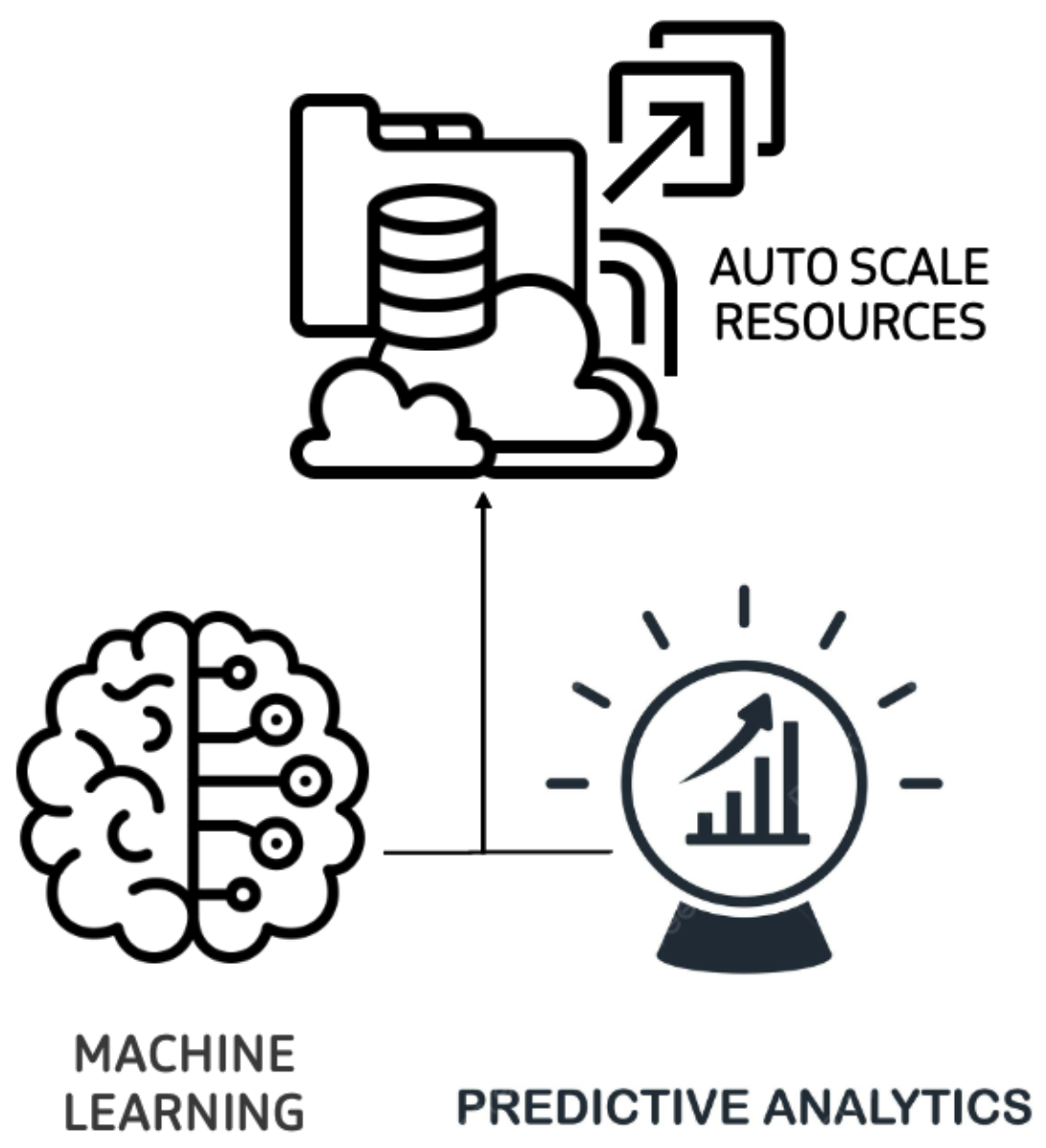

Predictive analytics and ML are particularly transformative in platform optimization. They allow for anticipatory resource management, where systems can forecast loads and scale resources accordingly. ML algorithms can optimize data storage, intelligently archiving or retrieving data based on usage patterns and access frequencies.

Figure 2. AI resource autoscaling

Moreover, AI can oversee and adjust platform configurations, ensuring that the environment is continuously refined for optimal performance. These predictive capabilities are not limited to resource management; they also extend to predicting application failures, user behavior, and even market trends, providing insights that can inform strategic business decisions. The proactive nature of predictive analytics means that platform engineers can move from reactive maintenance to a more visionary approach, crafting platforms that are not just robust and efficient but also self-improving and adaptive to future needs.

Changing Landscapes: The New Cloud Native

The landscape of cloud native and platform engineering is rapidly evolving, particularly with leading cloud service providers like Azure and Google Cloud. This evolution is largely driven by the growing demand for more scalable, reliable, and efficient IT infrastructure, enabling businesses to innovate faster and respond to market changes more effectively.

In the context of Azure, Microsoft has been heavily investing in Azure Kubernetes Service (AKS) and serverless offerings, aiming to provide more flexibility and ease of management for cloud-native applications.

- Azure's emphasis on DevOps, through tools like Azure DevOps and Azure Pipelines, reflects a strong commitment to streamlining the development lifecycle and enhancing collaboration between development and operations teams.

- Azure's focus on hybrid cloud environments, with Azure Arc, allows businesses to extend Azure services and management to any infrastructure, fostering greater agility and consistency across different environments.

In the world of Google Cloud, they've been leveraging expertise in containerization and data analytics to enhance cloud-native offerings.

- Google Kubernetes Engine (GKE) stands out as a robust, managed environment for deploying, managing, and scaling containerized applications using Google's infrastructure.

- Google Cloud's approach to serverless computing, with products like Cloud Run and Cloud Functions, offers developers the ability to build and deploy applications without worrying about the underlying infrastructure.

- Google's commitment to open-source technologies and its leading-edge work in AI and ML integrate seamlessly into its cloud-native services, providing businesses with powerful tools to drive innovation.

Both Azure and Google Cloud are shaping the future of cloud-native and platform engineering by continuously adapting to technological advancements and changing market needs. Their focus on Kubernetes, serverless computing, and seamless integration between development and operations underlines a broader industry trend toward more agile, efficient, and scalable cloud environments.

Implications for the Future of Cloud Computing

AI is set to revolutionize cloud computing, making cloud-native technologies more self-sufficient and efficient. Advanced AI will oversee cloud operations, enhancing performance and cost effectiveness while enabling services to self-correct. Yet integrating AI presents ethical challenges, especially concerning data privacy and decision-making bias, and poses risks requiring solid safeguards. As AI reshapes cloud services, sustainability will be key; future AI must be energy efficient and environmentally friendly to ensure responsible growth.

Kickstarting Your Platform Engineering and AI Journey

To effectively adopt AI, organizations must nurture a culture oriented toward learning and prepare by auditing their IT setup, pinpointing AI opportunities, and establishing data management policies. Further:

- Upskilling in areas such as machine learning, analytics, and cloud architecture is crucial.

- Launching AI integration through targeted pilot projects can showcase the potential and inform broader strategies.

- Collaborating with cross-functional teams and selecting cloud providers with compatible AI tools can streamline the process.

- Balancing innovation with consistent operations is essential for embedding AI into cloud infrastructures.

Conclusion

Platform engineering with AI integration is revolutionizing cloud-native environments, enhancing their scalability, reliability, and efficiency. By enabling predictive analytics and automated optimization, AI ensures cloud resources are effectively utilized and services remain resilient. Adopting AI is crucial for future-proofing cloud applications, and it necessitates foundational adjustments and a commitment to upskilling. The advantages include staying competitive and quickly adapting to market shifts.

As AI evolves, it will further automate and refine cloud services, making a continued investment in AI a strategic choice for forward-looking organizations.

This is an excerpt from DZone's 2024 Trend Report,

Cloud Native: Championing Cloud Development Across the SDLC.

Read the Free Report