Percona XtraDB Cluster (PXC): How Many Nodes Do You Need?

Written by Stephane Combaudon.

A question I often hear when customers want to set up a production PXC cluster is: “How many nodes should we use?”

Three nodes is the most common deployment, but when are more nodes needed? They also ask: “Do we always need to use an even number of nodes?”

This is what we’ll clarify in this post.

This is all about quorum

I explained in a previous post that a quorum vote is held each time one node becomes unreachable. With this vote, the remaining nodes will estimate whether it is safe to keep on serving queries. If quorum is not reached, all remaining nodes will set themselves in a state where they cannot process any query (even reads).

To get the right size for you cluster, the only question you should answer is: how many nodes can simultaneously fail while leaving the cluster operational?

- If the answer is 1 node, then you need 3 nodes: when 1 node fails, the two remaining nodes have quorum.

- If the answer is 2 nodes, then you need 5 nodes.

- If the answer is 3 nodes, then you need 7 nodes.

- And so on and so forth.

Remember that group communication is not free, so the more nodes in the cluster, the more expensive group communication will be. That’s why it would be a bad idea to have a cluster with 15 nodes for instance. In general we recommend that you talk to us if you think you need more than 10 nodes.

What about an even number of nodes?

The recommendation above always specifies odd number of nodes, so is there anything bad with an even number of nodes? Let’s take a 4-node cluster and see what happens if nodes fail:

- If 1 node fails, 3 nodes are remaining: they have quorum.

- If 2 nodes fail, 2 nodes are remaining: they no longer have quorum (remember 50% is NOT quorum).

Conclusion: availability of a 4-node cluster is no better than the availability of a 3-node cluster, so why bother with a 4th node?

The next question is: is a 4-node cluster less available than a 3-node cluster? Many people think so, specifically after reading this sentence from the manual:

Clusters that have an even number of nodes risk split-brain conditions.

Many people read this as “as soon as one node fails, this is a split-brain condition and the whole cluster stop working”. This is not correct! In a 4-node cluster, you can lose 1 node without any problem, exactly like in a 3-node cluster. This is not better but not worse.

By the way the manual is not wrong! The sentence makes sense with its context.

There could actually reasons why you might want to have an even number of nodes, but we will discuss that topic in the next section.

Quorum with multiple data centers

To provide more availability, spreading nodes in several datacenters is a common practice: if power fails in one DC, nodes are available elsewhere. The typical implementation is 3 nodes in 2 DCs:

Notice that while this setup can handle any single node failure, it can’t handle all single DC failures: if we lose DC1, 2 nodes leave the cluster and the remaining node has not quorum. You can try with 4, 5 or any number of nodes and it will be easy to convince yourself that in all cases, losing one DC can make the whole cluster stop operating.

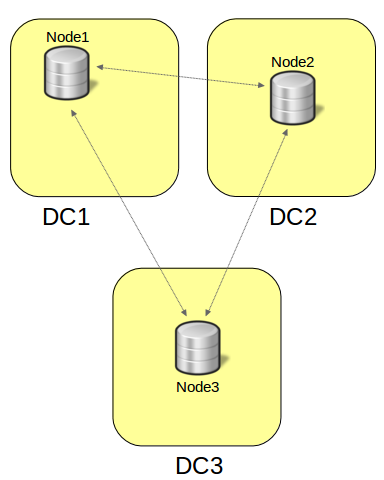

If you want to be resilient to a single DC failure, you must have 3 DCs, for instance like this:

Other considerations

Sometimes other factors will make you choose a higher number of nodes. For instance, look at these requirements:

- All traffic is directed to a single node.

- The application should be able to fail over to another node in the same datacenter if possible.

- The cluster must keep operating even if one datacenter fails.

The following architecture is an option (and yes, it has an even number of nodes!):

Conclusion

Regarding availability, it is easy to estimate the number of nodes you need for your PXC cluster. But node failures are not the only aspect to consider: Resilience to a datacenter failure can, for instance, influence the number of nodes you will be using.