Building a Real-Time Alerting Solution With Zero Code

As our digital universe continues to expand at an exponential rate, we find ourselves standing at the precipice of a paradigm shift driven by the rise of streaming data and real-time analytics. From social media updates to IOT sensor data, from market trends to weather predictions, data streams are burgeoning, and developers are seizing the opportunity to extract valuable insights instantaneously. Such a dynamic landscape opens up myriad use cases spanning across industries, offering a more reactive, informed, and efficient way of decision-making. Among these use cases, one that stands out for its universal applicability and growing relevance is 'real-time alerting.' This feature has the potential to revolutionize various sectors by providing proactive responses to potential issues, thereby fostering efficiency, productivity, and safety. From identifying potential system failures in IT infrastructure to triggering alerts for abnormal health readings in medical devices, let's delve deeper into how real-time alerting can be leveraged to transform the way we interact with data.

Simplifying the Complex

Historically, the development of real-time solutions posed significant challenges. The primary issues centered around managing continuous data streams, ensuring exactly one semantics such that the same alerts don't get triggered repeatedly, processing them in real-time, and dealing with high volumes of data. Traditional batch processing systems were ill-equipped to handle such tasks as they were designed for static, finite data sets. Furthermore, creating stream processing applications requires specialized knowledge and expertise in dealing with complex architectures, resource management, and in-depth programming capabilities, making it an arduous task for many organizations.

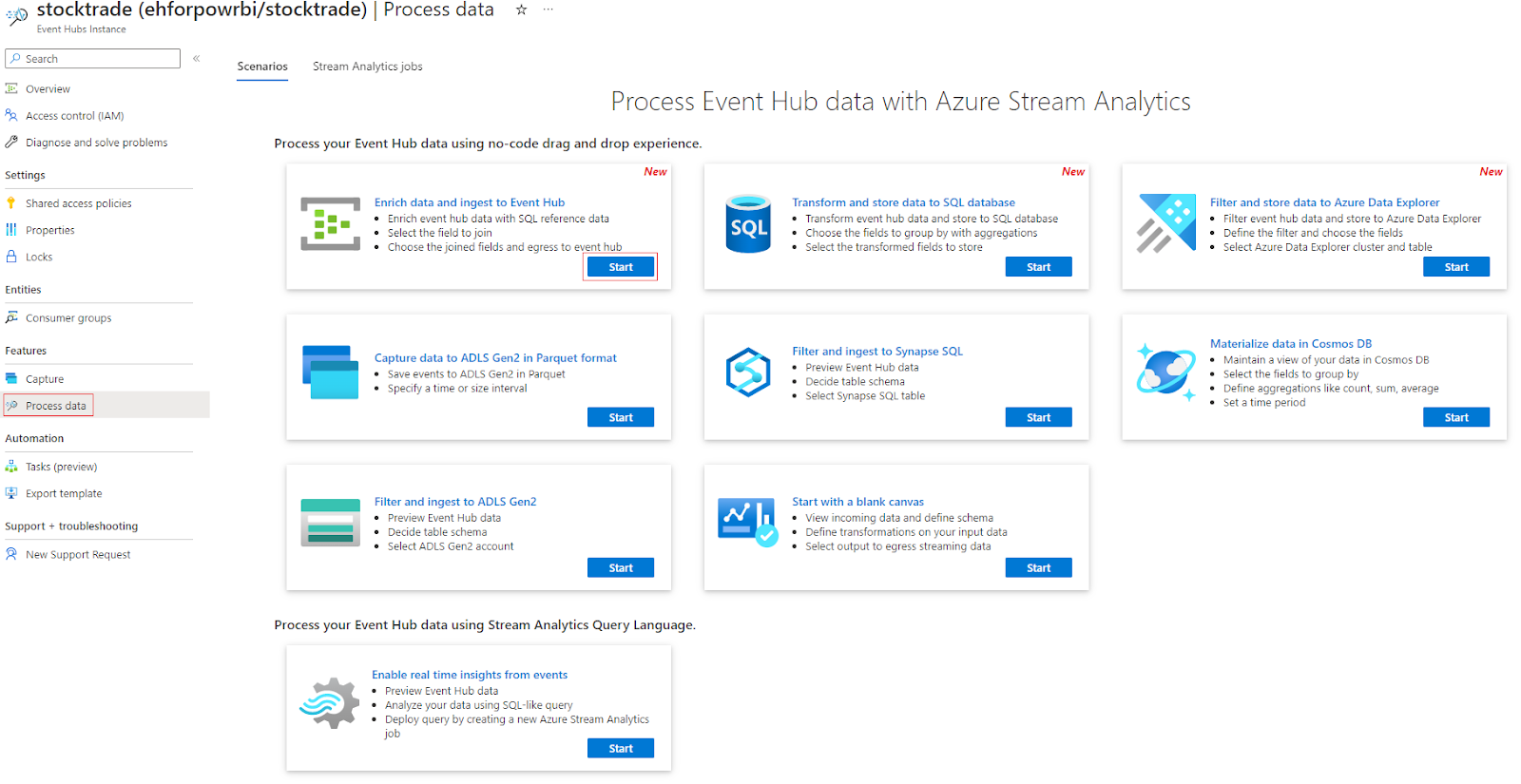

However, the advent of no-code tooling has significantly simplified the creation of real-time solutions. Azure Stream Analytics provides an easy-to-use, fully managed event-processing engine that helps run real-time analytics and complex event computations on streaming data. It abstracts away the complexity of underlying infrastructure, thereby enabling developers to focus on defining processing logic to manipulate streaming data. The zero code integration with inputs and outputs also makes it easy to quickly connect to read from sources and write to destinations such as Azure Event Hubs. Furthermore, integration with Azure Logic Apps provides a straightforward way to create workflows and automate tasks. The tool's visual designer allows users to design and automate scalable workflows with minimum coding effort, thus accelerating the process of building, deploying, and managing real-time solutions. Such advancements have democratized access to real-time analytics, making it a more feasible proposition for a broader range of organizations and use cases.

Overview of the Real-Time Alerting Solution

Our real-time alerting solution leverages the power of Azure's integrated suite of services to provide a seamless, efficient, and effective workflow. It consists of four key components. Firstly, Azure Event Hubs serve as the initial source of streaming input data, accommodating millions of events per second from any connected device or service. This continuous inflow of data then feeds into the second component, Azure Stream Analytics. As the stream processing engine, Azure Stream Analytics evaluates the incoming data based on defined queries and detects when specific conditions are met. When a defined condition is triggered, the processed output is sent to another Azure Event Hub. This triggers the third component, a Logic App that's configured to spring into action whenever a new message arrives in the Event Hub. Finally, the Logic App sends out the alert notification via the desired channel, be it email, SMS, or another communication platform. Each of these components plays a crucial role in delivering a robust, scalable, and efficient real-time alerting solution, catering to diverse use cases and ensuring that critical information is relayed immediately.

Exactly Once Processing Guarantees

In the world of stream processing and real-time alerting, the semantics of data processing — specifically, 'exactly once' versus 'at least once' — play a crucial role in the accuracy and efficiency of the system.

'At least once' semantics guarantee that every message will be processed, but not that it will be processed only once. This approach can lead to the same data point being processed multiple times, particularly in the event of network hiccups, system failures, or node restarts. While this ensures no data loss, it can also lead to duplicate outputs. In a real-time alerting scenario, this could result in the same alert being triggered and sent multiple times for the same data point, which can lead to confusion, redundancy, and a diminished user experience.

On the other hand, 'exactly once' semantics ensures that each data point is processed precisely one time — no more, no less. This eliminates the risk of duplicate processing and, hence, duplicate alerts. In the case of a job or node restart, the system maintains the state so that it knows exactly where it left off and can resume processing without repeating any data points. Azure Stream Analytics provides 'exactly once' semantics out of the box when reading from and writing to Azure Event Hubs, ensuring the accuracy and reliability of the real-time alerting solution we build.

Defining the Streaming Business Logic

Azure Stream Analytics provides an intuitive interface where you simply need to select your Azure subscription, the Event Hubs namespace, and the instance you wish to connect to. The platform abstracts away all the complexity, handling the intricacies of establishing and managing connections, security, and permissions. This seamless integration empowers even non-technical users to tap into the power of streaming analytics, transforming data into actionable insights in real-time.

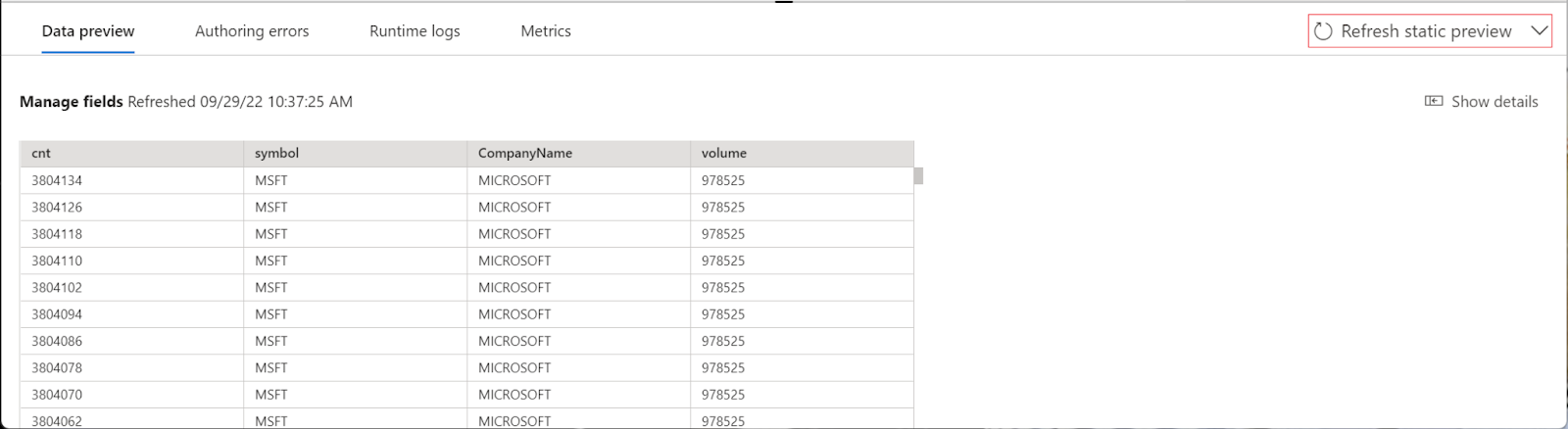

Once you've connected your Event Hub input in Azure Stream Analytics, the exploration and manipulation of your streaming data become a breeze. A distinct advantage of this tool is that it provides an immediate visual representation of your incoming data stream, allowing you to easily understand its structure and content. The power of this interface doesn't stop there.

By using the intuitive drag-and-drop no-code editor, you can build sophisticated business logic with ease. Want to enrich your streaming data with reference data? Simply drag your reference data source into the editor and connect it with your streaming data. This visual interface allows you to join, filter, aggregate, or perform other transformations without the need for complex SQL statements or code scripts.

Moreover, Azure Stream Analytics offers a unique feature to validate the output of each step in your processing logic. This enables you to ensure that your transformations are accurate and produce the expected results before they are sent to the output sink, thereby reducing the risk of inaccurate data causing confusion or triggering false alerts. This combination of visualization, intuitive design, and validation makes Azure Stream Analytics a powerful streaming processing engine.

Source

Source

Once you start your job, the compute engine will continuously read from the Azure Event Hub, execute the business logic you've implemented, and produce output events to the Azure Event Hub instance configured. Now that we have real-time data being evaluated in a continuous mode for the condition, the next step is to see how we can leverage Logic Apps to send an alert to an endpoint of our choice.

Using Logic Apps to Send Notifications

Creating a Logic App with Azure Event Hub messages as triggers is a straightforward process that empowers you to automate and optimize your workflows. The process begins within the Logic Apps Designer. First, you create a new Logic App and choose the "When an event is pushed to an Event Hub" trigger. This will allow your app to react to new messages arriving in your specified Event Hub. After selecting the trigger, you will need to provide connection parameters, including the name of your Azure subscription, the Event Hub namespace, and the specific Event Hub instance you wish to monitor. With this in place, your Logic App is now configured to spring into action whenever a new message arrives in your Event Hub.

Azure Logic Apps provides extensive connectivity options, supporting numerous services out-of-the-box, which allow them to trigger a variety of actions based on the incoming data. You can add an action in your Logic App workflow to send an email using built-in connectors like Office 365 Outlook or Gmail. You simply need to specify the necessary parameters, such as the recipient's email, subject, and body text.

In addition, Azure Logic Apps can also be configured to send SMS alerts by leveraging SMS gateway services like Twilio. You can add an action to send an SMS with Twilio, specify the necessary parameters, such as the recipient's number and the message text, and your Logic App will then send an SMS alert whenever the specified trigger event occurs.

These are just a couple of examples of the vast number of alerting endpoints supported by Azure Logic Apps. The platform includes built-in support for numerous services across various domains, including storage services, social media platforms, other Azure services, and more, all to ensure that your real-time alerting system can be as versatile and comprehensive as your use-case demands.

Conclusion

The advent of no-code tools such as Azure Stream Analytics and Logic Apps has profoundly transformed the way we approach building real-time alerting solutions. By abstracting away the complex underlying details, these tools have democratized access to real-time analytics, empowering both technical and non-technical users alike to easily harness the power of streaming data. Developers can now connect to streaming data sources, visualize and manipulate data streams, and even apply sophisticated business logic, all with a simple drag-and-drop interface. Meanwhile, Logic Apps can be configured to trigger actions, like sending emails or SMS, based on the insights derived from the data. Importantly, this democratization does not come at the cost of performance, scale, or reliability. The solutions built with these tools can handle massive volumes of data, process it in real-time, and deliver reliable alerts, all while ensuring 'exactly once' semantics. As we move forward in the age of data-driven decision-making, these advancements will continue to empower more people and organizations to derive immediate, actionable insights from their data, revolutionizing industries and our everyday lives.