Building a CI/CD Pipeline With Kubernetes: A Development Guide With Deployment Considerations for Practitioners

Editor's Note: The following is an article written for and published in DZone's 2024 Trend Report, Kubernetes in the Enterprise: Once Decade-Defining, Now Forging a Future in the SDLC.

In the past, before CI/CD and Kubernetes came along, deploying software to Kubernetes was a real headache. Developers would build stuff on their own machines, then package it and pass it to the operations team to deploy it on production. This approach would frequently lead to delays, miscommunications, and inconsistencies between environments. Operations teams had to set up the deployments themselves, which increased the risk of human errors and configuration issues. When things went wrong, rollbacks were time consuming and disruptive. Also, without automated feedback and central monitoring, it was tough to keep an eye on how builds and deployments were progressing or to identify production issues.

With the advent of CI/CD pipelines combined with Kubernetes, deploying software is smoother. Developers can simply push their code, which triggers builds, tests, and deployments. This enables organizations to ship new features and updates more frequently and reduce the risk of errors in production.

This article explains the CI/CD transformation with Kubernetes and provides a step-by-step guide to building a pipeline.

Why CI/CD Should Be Combined With Kubernetes

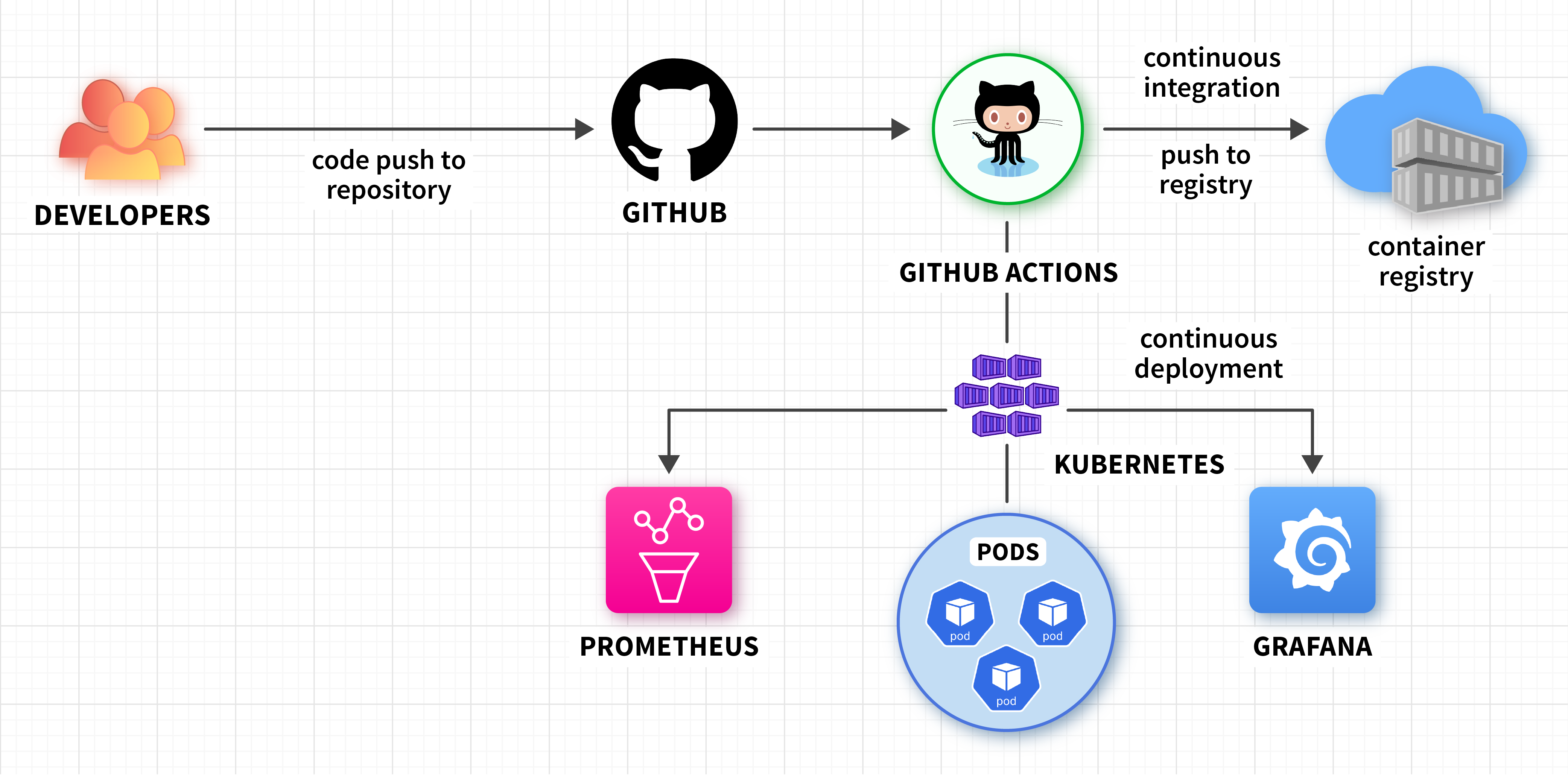

CI/CD paired with Kubernetes is a powerful combination that makes the whole software development process smoother. Kubernetes, also known as K8s, is an open-source system for automating the deployment, scaling, and management of containerized applications. CI/CD pipelines, on the other hand, automate how we build, test, and roll out software. When you put them together, you can deploy more often and faster, boost software quality with automatic tests and checks, cut down on the chance of pushing out buggy code, and get more done by automating tasks that used to be done by hand. CI/CD with Kubernetes helps developers and operations teams work better together by giving them a shared space to do their jobs. This teamwork lets companies deliver high-quality applications rapidly and reliably, gaining an edge in today's fast-paced world. Figure 1 lays out the various steps:

Figure 1. Push-based CI/CD pipeline with Kubernetes and monitoring tools

There are several benefits in using CI/CD with Kubernetes, including:

- Faster and more frequent application deployments, which help in rolling out new features or critical bug fixes to the users

- Improved quality by automating testing and incorporating quality checks, which helps in reducing the number of bugs in your applications

- Reduced risk of deploying broken code to production since CI/CD pipelines can conduct automated tests and roll-back deployments if any problems exist

- Increased productivity by automating manual tasks, which can free developers' time to focus on important projects

- Improved collaboration between development and operations teams since CI/CD pipelines provide a shared platform for both teams to work

Tech Stack Options

There are different options available if you are considering building a CI/CD pipeline with Kubernetes. Some of the popular ones include:

- Open-source tools such as Jenkins, Argo CD, Tekton, Spinnaker, or GitHub Actions

- Enterprise tools, including but not limited to, Azure DevOps, GitLab CI/CD, or AWS CodePipeline

Deciding whether to choose an open-source or enterprise platform to build efficient and reliable CI/CD pipelines with Kubernetes will depend on your project requirements, team capabilities, and budget.

Impact of Platform Engineering on CI/CD With Kubernetes

Platform engineering builds and maintains the underlying infrastructure and tools (the "platform") that development teams use to create and deploy applications. When it comes to CI/CD with Kubernetes, platform engineering has a big impact on making the development process better. It does so by hiding the complex parts of the underlying infrastructure and giving developers self-service options.

Platform engineers manage and curate tools and technologies that work well with Kubernetes to create a smooth development workflow. They create and maintain CI/CD templates that developers can reuse, allowing them to set up pipelines without thinking about the details of the infrastructure. They also set up rules and best practices for containerization, deployment strategies, and security measures, which help maintain consistency and reliability across different applications. What's more, platform engineers provide ways to observe and monitor applications running in Kubernetes, which let developers find and fix problems and make improvements based on data.

By building a strong platform, platform engineering helps dev teams zero in on creating and rolling out features more without getting bogged down by the complexities of the underlying tech. It brings together developers, operations, and security teams, which leads to better teamwork and faster progress in how things are built.

How to Build a CI/CD Pipeline With Kubernetes

Regardless of the tech stack you select, you will often find similar workflow patterns and steps. In this section, I will focus on building a CI/CD pipeline with Kubernetes using GitHub Actions.

Step 1: Setup and prerequisites

- GitHub account – needed to host your code and manage the CI/CD pipeline using GitHub Actions

- Kubernetes cluster – create one locally (e.g., MiniKube) or use a managed service from Amazon or Azure

- kubectl – Kubernetes command line tool to connect to your cluster

- Container registry – needed for storing Docker images; you can either use a cloud provider's registry (e.g., Amazon ECR, Azure Container Registry, Google Artifact Registry) or set up your own private registry

- Node.js and npm – install Node.js and npm to run the sample Node.js web application

- Visual Studio/Visual Studio Code – IDE platform for making code changes and submitting them to a GitHub repository

Step 2: Create a Node.js web application

Using Visual Studio, create a simple Node.js application with a default template. If you look inside, the server.js in-built generated file will look like this:

// server.js

'use strict';

var http = require('http');

var port = process.env.PORT || 1337;

http.createServer(function (req, res) {

res.writeHead(200, { 'Content-Type': 'text/plain' });

res.end('Hello from kubernetes\n');

}).listen(port);Step 3: Create a package.json file to manage dependencies

Inside the project, add a new file Package.json to manage dependencies:

// Package.Json

{

"name": "nodejs-web-app1",

"version": "0.0.0",

"description": "NodejsWebApp",

"main": "server.js",

"author": {

"name": "Sunny"

},

"scripts": {

"start": "node server.js",

"test": "echo \"Running tests...\" && exit 0"

},

"devDependencies": {

"eslint": "^8.21.0"

},

"eslintConfig": {

}

}Step 4: Build a container image

Create a Dockerfile to define how to build your application's Docker image:

// Dockerfile

# Use the official Node.js image from the Docker Hub

FROM node:14

# Create and change to the app directory

WORKDIR /usr/src/app

# Copy package.json and package-lock.json

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the rest of the application code

COPY . .

# Expose the port the app runs on

EXPOSE 3000

# Command to run the application

CMD ["node", "app.js"]Step 5: Create a Kubernetes Deployment manifest

Create a deployment.yaml file to define how your application will be deployed in Kubernetes:

// deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nodejs-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nodejs-app

template:

metadata:

labels:

app: nodejs-app

spec:

containers:

- name: nodejs-container

image: nodejswebapp

ports:

- containerPort: 3000

env:

- name: NODE_ENV

value: "production"

---

apiVersion: v1

kind: Service

metadata:

name: nodejs-service

spec:

selector:

app: nodejs-app

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: LoadBalancerStep 6: Push code to GitHub

Create a new code repository on GitHub, initialize the repository, commit your code changes, and push it to your GitHub repository:

git init

git add .

git commit -m "Initial commit"

git remote add origin "<remote git repo url>"

git push -u origin mainStep 7: Create a GitHub Actions workflow

Inside your GitHub repository, go to the Actions tab. Create a new workflow (e.g., main.yml) in the .github/workflows directory. Inside the GitHub repository settings, create Secrets under actions related to Docker and Kubernetes cluster — these are used in your workflow to authenticate:

//main.yml

name: CI/CD Pipeline

on:

push:

branches:

- main

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Set up Node.js

uses: actions/setup-node@v2

with:

node-version: '14'

- name: Install dependencies

run: npm install

- name: Run tests

run: npm test

- name: Build Docker image

run: docker build -t <your-docker-image> .

- name: Log in to Docker Hub

uses: docker/login-action@v1

with:

username: ${{ secrets.DOCKER_USERNAME }}

password: ${{ secrets.DOCKER_PASSWORD }}

- name: Build and push Docker image

uses: docker/build-push-action@v2

with:

context: .

push: true

tags: <your-docker-image-tag>

deploy:

needs: build

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Set up kubectl

uses: azure/setup-kubectl@v1

with:

version: 'latest'

- name: Set up Kubeconfig

run: echo "${{ secrets.KUBECONFIG }}" > $HOME/.kube/config

- name: Deploy to Kubernetes

run: kubectl apply -f deployment.yamlStep 8: Trigger the pipeline and monitor

Modify server.js and push it to the main branch; this triggers the GitHub Actions workflow. Monitor the workflow progress. It installs the dependencies, sets up npm, builds the Docker image and pushes it to the container registry, and deploys the application to Kubernetes.

Once the workflow is completed successfully, you can access your application that is running inside the Kubernetes cluster. You can leverage open-source monitoring tools like Prometheus and Grafana for metrics.

Deployment Considerations

There are a few deployment considerations to keep in mind when developing CI/CD pipelines with Kubernetes to maintain security and make the best use of resources:

- Scalability

- Use horizontal pod autoscaling to scale your application's Pods based on how much CPU, memory, or custom metrics are needed. This helps your application work well under varying loads.

- When using a cloud-based Kubernetes cluster, use the cluster autoscaler to change the number of worker nodes as needed to ensure enough resources are available and no money is wasted on idle resources.

- Ensure your CI/CD pipeline incorporates pipeline scalability, allowing it to handle varying workloads as per your project needs.

- Security

- Scan container images regularly to find security issues. Add tools for image scanning into your CI/CD pipeline to stop deploying insecure code.

- Implement network policies to limit how Pods and services talk to each other inside a Kubernetes cluster. This cuts down on ways attackers could get in.

- Set up secrets management using Kubernetes Secrets or external key vaults to secure and manage sensitive info such as API keys and passwords.

- Use role-based access control to control access to Kubernetes resources and CI/CD pipelines.

- High availability

- Through multi-AZ or multi-region deployments, you can set up your Kubernetes cluster in different availability zones or regions to keep it running during outages.

- Pod disruption budgets help you control how many Pods can be down during planned disruptions (like fixing nodes) or unplanned ones (like when nodes fail).

- Implement health checks to monitor the health of your pods and automatically restart if any fail to maintain availability.

- Secrets management

- Store API keys, certificates, and passwords as Kubernetes Secrets, which are encrypted and added to Pods.

- You can also consider external secrets management tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault if you need dynamic secret generation and auditing.

Conclusion

Leveraging CI/CD pipelines with Kubernetes has become a must-have approach in today's software development. It revolutionizes the way teams build, test, and deploy apps, leading to more efficiency and reliability. By using automation, teamwork, and the strength of container management, CI/CD with Kubernetes empowers organizations to deliver high-quality software at speed. The growing role of AI and ML will likely have an impact on CI/CD pipelines — such as smarter testing, automated code reviews, and predictive analysis to further enhance the development process. When teams adopt best practices, keep improving their pipelines, and are attentive to new trends, they can get the most out of CI/CD with Kubernetes, thus driving innovation and success.

This is an excerpt from DZone's 2024 Trend Report, Kubernetes in the Enterprise: Once Decade-Defining, Now Forging a Future in the SDLC.

Read the Free Report