Creating Custom Skills for Chatbots With Plugins

The field of conversational AI has rapidly advanced, with Large Language Models such as ChatGPT, Claude, and BARD demonstrating impressive natural language abilities. With chatbots becoming more capable, businesses are eager to use them for customer support, document generation, market research, and more. However, out-of-the-box chatbots have limitations in terms of industry-specific knowledge, integration with business systems, and personalization of responses.

The out-of-the-box chatbots can be enhanced by augmenting them with specific capabilities that perform specialized tasks that can work together to solve the problem at hand. These capabilities can be implemented as plugins that can be invoked with the help of LLMs. By adopting a plugin framework, the foundational models can be leveraged to orchestrate and invoke the necessary plugins in the right sequence.

These plugins can not only enable seamless integration of external data sources like databases, content management systems, and API-driven services but also facilitate the addition of specialized modules like industry-specific QA, user context, sentiment analysis, and other enhancements. With the right plugin framework and supporting plugin builder tools, multiple skills can be assembled into a powerful, customized conversational application.

The right architecture allows plugins to future-proof chatbot investments by abstracting the underlying AI engine. By upgrading the core while using better foundation models, skills that have already been developed can be retained. In this way, enterprises can maximize their return on investment in conversational AI. As AI advances, robust custom experiences can be delivered by treating foundation models as a platform. Let's take a look at an approach to how to construct a plugin builder framework.

Considerations for Building a Plugin Builder Framework

The chatbot core provides the base natural language processing and reasoning capabilities through Large Language models like BERT, GPT-3, etc., whereas the plugins provide additional skills, integrate external data sources, edit responses, etc. The plugin framework acts as an intermediary between the chatbot's core and the plugins. It provides interfaces for plugins to register and interact to extend the capabilities of the application.

Some of the primary responsibilities of the framework include:

- Allow plugins to register through a simple API like

register_plugin(PluginClass) - Maintain a registry of active plugins and the skills they provide

- Route user inputs through the plugin pipeline to collect pre/post-processing

- Invoke interrupt plugins for specific inputs to override default processing

- Manage to combine multiple skills like Q&A, recommendations, search, etc.

- Encapsulate chatbot core from plugins — upgrades don't affect each other

Plugins can hook into the framework at different extension points. This enables plugins to extend chatbots in a modular and customizable way. The framework abstracts the complexity.

Plugin Definition

Here is a sample design for a plugin class. Since the plugin invocation will be orchestrated by the LLM, it is necessary to describe the input format and the attributes of the plugin so that the LLM invokes the plugin with the right input. A base plugin class is defined, which serves as a template. The plugins will be a subclass. The below sample uses the 'Weather plugin' as an example. Other information, such as the version release status (experimental, beta, etc.) can be captured as part of the subclass or as attributes of the base class.

# plugin_base.py

class Plugin:

def __init__(self, name, description, keywords, input_example, skills):

self.name = name

self.keywords = keywords

self.description = description

self.input_example = input_example

self.skills = skills

def process(self, input, context):

# Plugin logic

return response

## Example Weather Plugin

#plugins/weather.py

from plugin_base import Plugin

class WeatherPlugin(Plugin):

def __init__(self):

super().__init__(

name='weather',

description='Given the location, this plugin will return 24hr weather forecast',

input_example = 'Example 1 using ZipCode; \n\n location:08859 \n\n Example 2 using City and State; \n\n location: Parlin,NJ \n\n',

keywords=['weather', 'forecast'],

skills=['weather_forecast']

)

#initialize invoking the external Weather API

self.weather_api = WeatherAPI()

def process(self, input, context):

location = self._extract_location(input)

forecast = self.weather_api.get_forecast(location)

response = f"The weather forecast for {location} is: {forecast}"

return Response(text=response)

def _extract_location(self, input):

# Logic to extract location from input text

return locationOnce the plugins are defined, the framework needs to maintain a registry of skills and which plugins provide them. A plugin registry needs to be defined such that all the plugins that are supported by the ChatBot can be registered. Experimental plugins can be placed in a separate folder or identified in the metadata associated with the plugin.

# plugin_registry.py

from typing import List

from plugin_base import Plugin

class PluginRegistry:

plugins: List[Plugin] = []

def register_plugin(self, plugin: Plugin):

self.plugins.append(plugin)

def get_plugins_by_keywords(self, keywords: List[str]) -> List[Plugin]:

matched = []

for plugin in self.plugins:

if any(kw in plugin.keywords for kw in keywords):

matched.append(plugin)

return matchedThe next step is initializing and registering the plugins. The sample code below demonstrates how this can be done.

# Example plugin imports

from plugins.weather import WeatherPlugin

from plugins.calendar import CalendarPlugin

# Create registry

registry = PluginRegistry()

# Register plugins

weather = WeatherPlugin()

registry.register_plugin(weather)

calendar = CalendarPlugin()

registry.register_plugin(calendar)Execution Pipeline

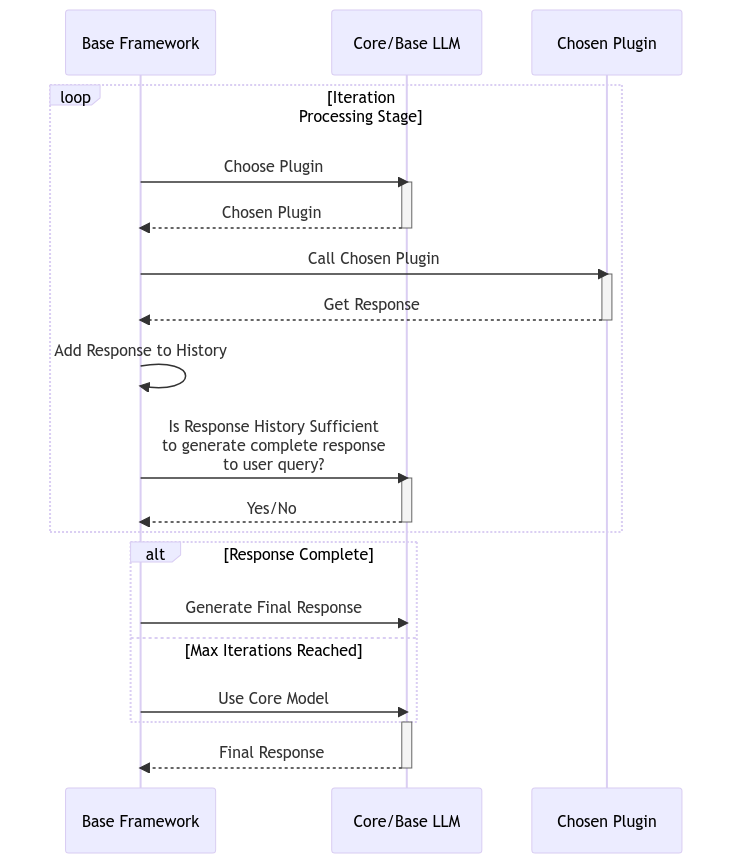

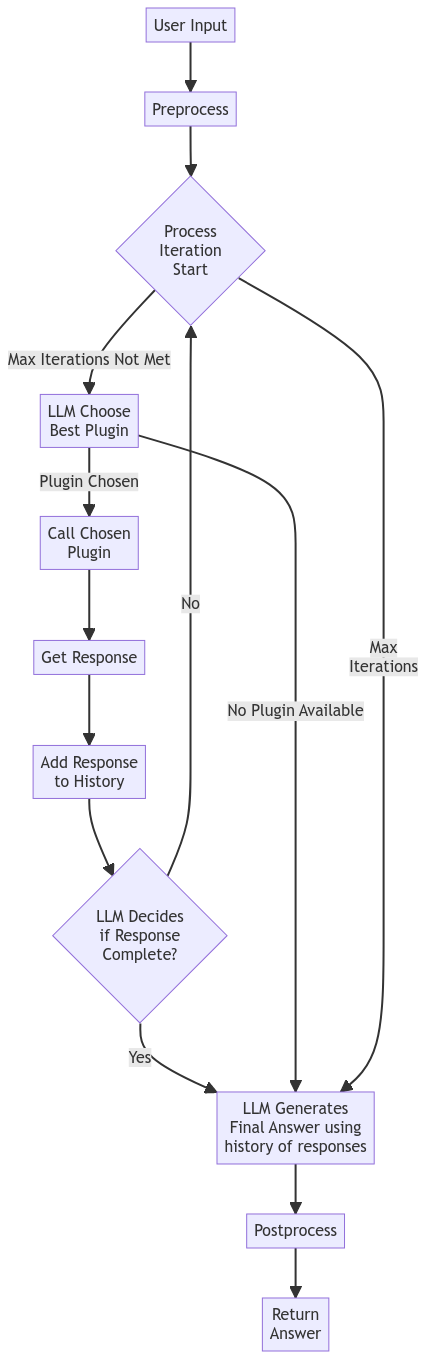

Calling the right plugin to invoke requires some thought process and, if done correctly, will enable the framework to be extensible. An approach is to design the execution process as a pipeline with different stages. The different stages of the execution flow within the plugin framework can be described below.

- Pre-process: Plugins can modify or enrich the user input before sending it to the core. Useful for normalization, spelling corrections, etc.

- Process: This stage can be driven and orchestrated with the help of a large language model. This phase involves extracting the intent of the user input and determining the appropriate plugin to execute to retrieve the answer. This would be an iterative approach where responses from the previous iterations are used to determine if the user input can be answered in full. If not, another iteration ensues where the most appropriate plugin that can most likely fill in the missing gap in the answer is invoked.

- Post-process: Plugins can modify the chatbot's response before returning to the user. This allows for adding variability.

- Interrupt: The normal execution pipeline can be interrupted to be either taken over by humans or other plugins for certain types of inputs that they can handle. This can be useful if the conversation is not going in the right direction and the user's tone is trending towards negativity.

This is what the pipeline can look like.

Below is a sample code that can be adopted to build the base of the plugin framework execution pipeline:

def pipeline(user_input):

# Preprocessing plugins

for plugin in preprocess_plugins:

user_input = plugin.preprocess(user_input)

# Process phase

response_histories = []

response = None

completed = False

final_response = ""

while not completed:

# Let the LLM pick the best plugin for the question, given prior responses

chosen_plugin = large_lang_model.select_best_plugin(user_input, response_histories, all_plugins=process_plugins)

if chosen_plugin:

response_text = chosen_plugin.process(user_input)

response = {

'text': response_text,

'plugin_name': chosen_plugin.name

}

response_histories.append(response)

# Let LLM decide if the answer is complete

completed = large_lang_model.is_answer_complete(user_input, response_histories)

final_response = large_lang_model.generate_final_ans(user_input, response_histories)

# If LLM couldn't select a plugin or couldn't determine completion, use core model

if not chosen_plugin or not response:

# Call the 'generate_incomplete_answer' method instead of the generic 'generate_response'

final_response = large_lang_model.generate_incomplete_answer(user_input, response_histories)

completed = True

# Postprocessing plugins

for plugin in postprocess_plugins:

final_response = plugin.postprocess(final_response)

return final_responseIt's important to handle scenarios that might lead to infinite loops. Additional safeguards can be added to allow for only a certain number of plugins to be utilized to answer a specific user query. This not only protects the chatbot from complex queries that may have no answers but also leads to improved performance.

The methods that are invoked on the large language model class can be implemented by adopting prompt engineering techniques. For example, when implementing the select_best_plugin method, an option would be to pass the list of plugins with description, examples, the user input, and the right prompt instruction to select one plugin that would best answer the user's input.

Checking if the answer is complete and iteratively leveraging different plugins to fully answer the user query is loosely based on the Chain-of-Thought prompting technique.

Example Plugin Scenarios

Integration of the Calendar API addresses one of the major limitations of chatbots - the invisibility of user calendars preventing scheduling support. By developing plugins for chatbots on calendar platforms like Google and Office365, businesses can enable features that improve productivity. Employees can check for availability, automatically schedule meetings, receive reminders, and manage travel plans. This creates tremendous value for corporate situations, sales teams, executive assistants, facility managers, and anyone else who needs to effectively manage systems.

Examples of conversations when you allow a chatbot to interact with a user’s calendar.

User: "What's my schedule today?"

Framework calls the calendar plugin.

Plugin: It retrieves the user's calendar events from the Google Calendar API and returns "You have a team meeting today from 2-3pm with dinner with friends scheduled for 7pm."

User: "Schedule a meeting with John next Wednesday at 10am."

Framework calls the calendar plugin with the new user input.

Plugin: Uses the Calendar API to verify availability and confirm appointments and returns"You and John are scheduled for Wednesday at ten o'clock in the morning."

Tasks and domain-specific Q&A plugins address another shortcoming of generic chatbots — a lack of in-depth knowledge of key techniques and terminology. Areas such as insurance, healthcare, law, and finance have complex business models and regulations. Developing Q&A plugins with expert knowledge in these areas provides a cheaper alternative to advanced human training. Subject matter experts can be made available to clients 24/7. By combining FAQs with written routes, chatbots turn into domain advisors for customer support. This allows companies that rely on specialized knowledge to offer better personal service.

Sample conversations when you allow a chatbot to answer domain-specific questions.

User: "What is the procedure for filing an insurance claim?"

Framework passes input to insurance Q&A plugin.

Plugin: Looks up questions in the knowledge base to find the answer and returns "Here are the steps to file an insurance claim..."

Plugins allow you to tap into an external knowledge base and unlock the full potential of a chatbot. Even more advanced models have knowledge limitations based on training data. Combining chatbots with knowledge models and databases creates a virtually unlimited storage of information. Companies can enable market research, improve customer support, and better manage knowledge. By building plugins on external knowledge bases such as company wikis, financial data feeds, or public knowledge graphs, chatbots gain access to the world’s vast wealth of knowledge. This helps overcome data barriers and makes chatbots more advanced.

Sample conversations when you connect a chatbot with advanced structured data.

User: "What is the population of Canada?"

Framework Passes questions to knowledge base plugin.

Plugin: Looks up the facts in a database and returns "The population of Canada is about 38 million."

Here is some sample code for a plugin that provides industry/domain-specific Q&A capability:

## Example Domain Expert Plugin

#plugins/domain_expert_plugin.py

from plugin_base import Plugin

class DomainExpertPlugin(Plugin):

def __init__(self):

super().__init__(

name='domain_expert',

description='I am an expert plugin that can answer questions about the domain.',

input_example = 'Example 1 using accounting; \n\n What is the difference between GAAP and non-GAAP? \n\n Example 2 using Finance; \n\n What is EBITA? \n\n',

keywords=['cash flow', 'liability'],

skills=['answer_finance_questions','answer_accounting_questions']

)

# Called when user input is received

def process(self, input):

# Check if this plugin can handle the input

if self.can_answer(input):

response = self.generate_answer(input)

# Return response to framework

return response

# Not handled, return control

return None

def can_answer(self, input):

# A rudimentary way to check if this plugin can answer the question/input

if "finance" in input or "accounting" in input:

return True

else:

return False

def generate_answer(self, input):

# Logic to lookup in knowledge base

# and compose response

return response

# Register plugin

registry.register_plugin(DomainExpertPlugin())Additional Benefits of Building a Plugin Framework

In addition to the obvious benefit of enabling chatbots with domain knowledge and other skills, the following benefits can also be realized.

- Plugins can be enabled to access live data like calendars, IoT systems, transaction records, and real-time feeds via APIs.

- Plugins can add more variability, personality, and contextual awareness to chatbot responses.

- A plugin framework like the one described in this article facilitates rapid experimentation by combining multiple skills like Q&A, recommendations, search, etc.

- Plugins implemented properly maintain user privacy by processing data locally and anonymizing any user data sent to third parties.

- Plugins encapsulate specific skills and knowledge decoupled from the chatbot's base model, allowing easier upgrading to newer chatbot generations over time.

- Already built plugins get "smarter" when paired with improved base Large Language models, saving rework.

Conclusion

Extending the capabilities of the chatbot's base Large Language Model by adding domain knowledge, integrating with external APIs, and customizing responses, a robust chatbot plugin framework can offer great benefits of providing flexibility and extensibility. Rather than being constrained by a rigid, monolithic framework, plugins enable chatbots to unlock new skills. Using the right architecture is the key to properly organizing these skills. A well-designed plugin-building framework acts as a middleware layer, providing extension points for plugins to fit in without compromising the independence of the chatbot's base model. This enables stable improvements over time and also supports rapid iterations. As new and more advanced chatbot models inevitably emerge, existing plugins can seamlessly integrate with the next-generation base model. The accumulated business value in upgraded skills is preserved rather than lost. This makes designing a robust plugin framework an important best practice for production chatbot deployments. By adopting some of the principles outlined in this article, businesses can effectively adapt chatbots to present and future needs.