AOT Compilation Make Java More Power

I experimented with a previous article to explore about 6000 classes being loaded for a simple hello world spring boot rest application. Although the Quarkus version seems optimized by reducing the number to 3300+, It is still way too much. In this article, I will introduce how we can use Java AOT compilation to eliminate the dead code in Java and therefore improve the performance dramatically.

The experiment uses Quarkus as a framework.

If you prefer to view videos to get the main idea, you can view youtube in this article directly.

Two Perspective to View Java Performance

Time to First Response

Java applications have a warm-up effect. It is not a charming feature when they are running on a container platform like Kubernetes.

Because in a highly dynamic environment, applications come and go very quickly, like metabolism. Under serverless architecture, the app lifecycle is extremely fast. So the warm-up effect would make Java applications out of harmony.

There are two well-known reasons cause this:

Lazy initialization

Traditionally lazy initialization is a strategy to optimize and save the memory and CPU resource by deferring the task until a request comes. Java framework and server especially has a reputation for using it historically, and sometimes this technique was used aggressively. The container environment doesn't favor this feature.

JVM warm-up

This is the nature of JVM. JIT compilation behavior is to selectly compiling the hot code, so-called hotspot OpenJDK. It means it will only do level 2 optimization for the code in the hotpath, and JVM would not do the optimization until the app is warmed up.

RSS (Whole Process Memory Consumption)

RSS(Resident Set Size) means whole process memory consumption.

Java applications usually take a lot of memory, even a hello world application. Let’s say a spring-boot hello world program; you would need:

JVM (only JVM 11 size is 260+M)

Application Server, e.g., Tomcat, or vertx, netty or undertow depending on your choice, giving you the ability to run the server and servlet things.

Some smart Framework enables you to do smart Java programming.

Imagine how many things that are loaded are not relevant to start the hello world logic.

But why does it matter now, but not before? This is because the cost is shared before containers and microservice got popular. Multiple Java applications like “war” or a single big monolithic application share a single JVM and Application Server.

Java AOT Compilation Eliminate the Dead Code

The idea is not very new. Oracle announced the Graalvm project in 2018 April; it includes a native-image tool that can turn a JVM-based application to native execute the binary.

It looks like this:

xxxxxxxxxx

$ native-image -jar my-app.jar \

-H:InitialCollectionPolicy=com.oracle.svm.core.genscavenge.CollectionPolicy$BySpaceAndTime \

-J-Djava.util.concurrent.ForkJoinPool.common.parallelism=1 \

-H:FallbackThreshold=0 \

-H:ReflectionConfigurationFiles=...

-H:+ReportExceptionStackTraces \

-H:+PrintAnalysisCallTree \

-H:-AddAllCharsets \

-H:EnableURLProtocols=http \

-H:-JNI \

-H:-UseServiceLoaderFeature \

-H:+StackTrace \

--no-server \

--initialize-at-build-time=... \

-J-Djava.util.logging.manager=org.jboss.logmanager.LogManager \

-J-Dio.netty.leakDetection.level=DISABLED \

-J-Dvertx.logger-delegate-factory-class-name=io.quarkus.vertx.core.runtime.VertxLogDelegateFactory \

-J-Dsun.nio.ch.maxUpdateArraySize=100 \

-J-Dio.netty.allocator.maxOrder=1 \

-J-Dvertx.disableDnsResolver=true

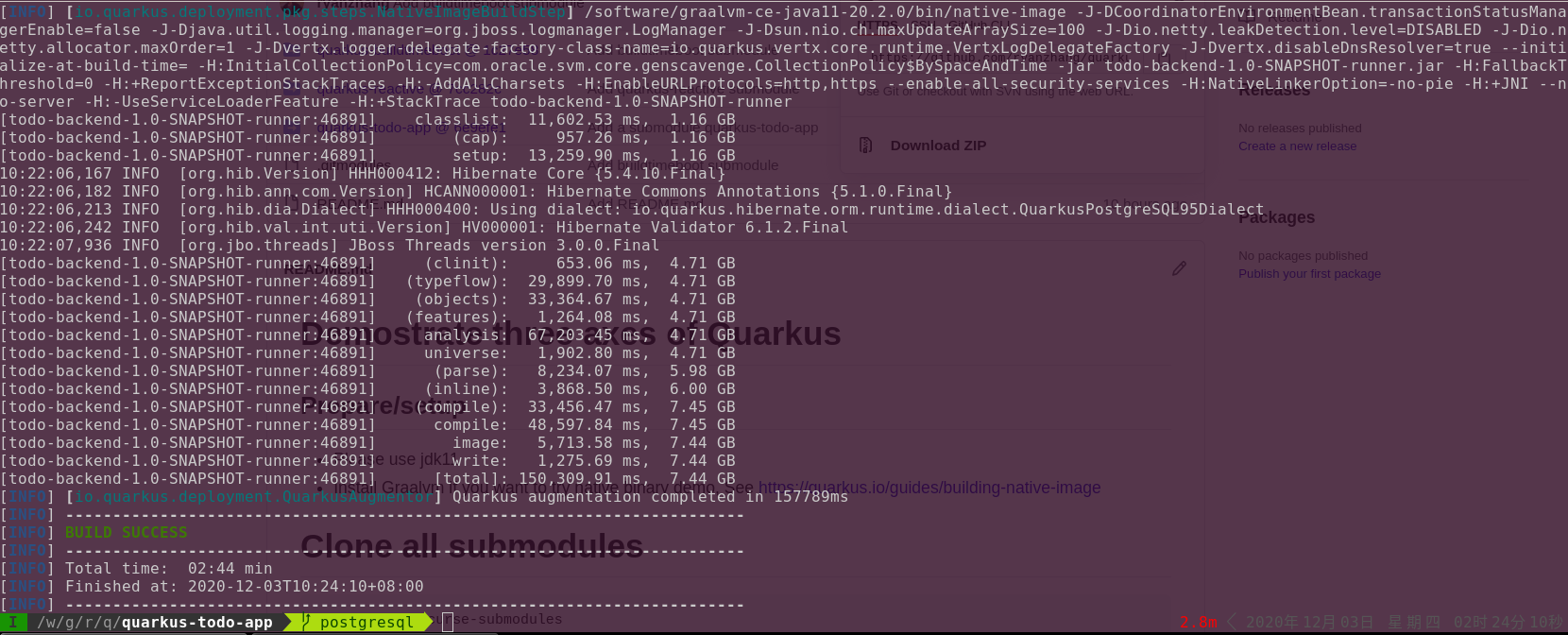

When I build the application by :

./mvnw package -Pnative

You will get:

Quarkus-maven-plugin did all the hard work and automatically configured all the configuration of native build options for us.

The performance contrast is obvious; the following diagram is referenced from quarkus.io:

Now let’s focus on the contrast between Quarkus native mode(Green color) and Quarkus JVM mode(Blue color). ( If you are interested in how Quarkus do differently in JVM mode, I explored a little about how to build time boot improves the performance in my previous article.)

I am going to test a real-world use case: a todo app connect PostgreSQL using hibernate.

I have recorded a short video to reflect the performance impact:

Basically, in the video, what I demonstrate is:

RSS is 7x times lower compared to native mode than JVM.

I can easily start up 250 instances of my application in native mode. However, 50 instances of the JVM mode cause my CPU to be very busy.

The time to first response difference is huge as well. Even though the scalability for native vs. JVM is 250 to 25.

So Java native mode running performance is very promising.

If you prefer to code and see it yourself, here is the code and guide.

How Does Java AOT Work

From the diagram and experiment, Java AOT compilation performs great. Now Let’s see how it works.

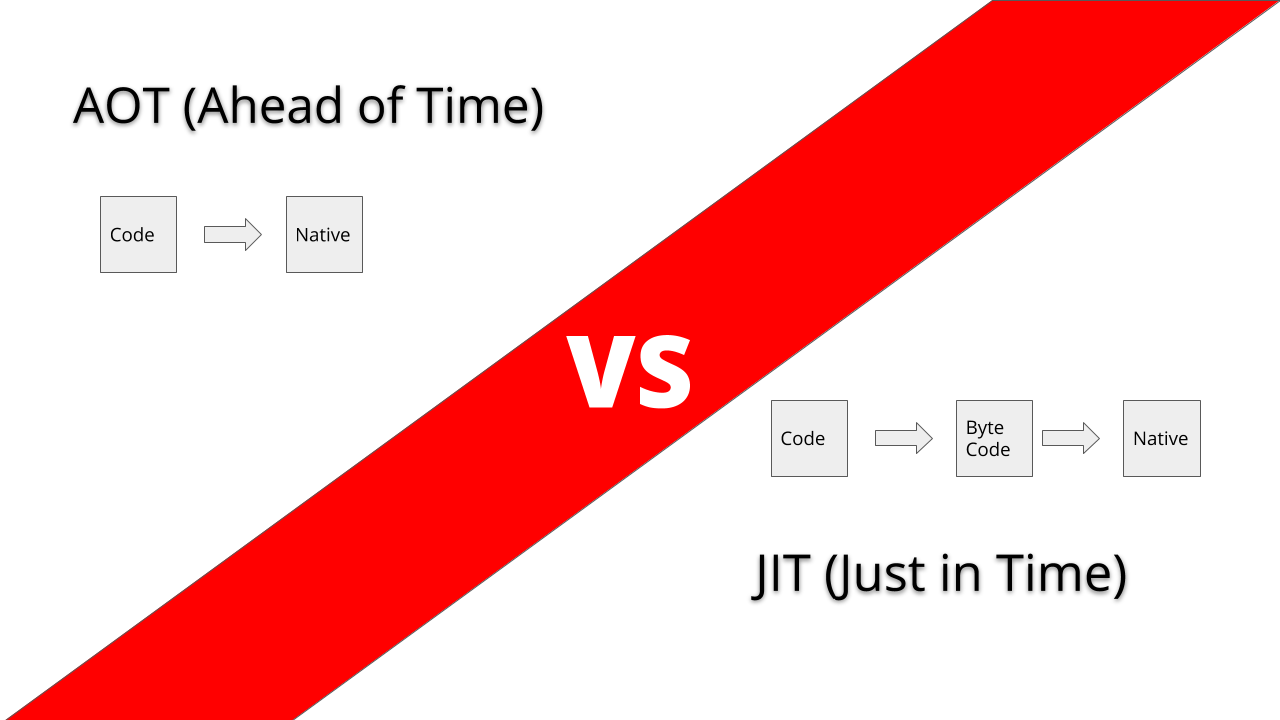

Firstly, we need to understand traditionally; Java used a JIT mechanism to run java bytecode.

When the application starts to run, the java command would start the JVM and interpret and compile behavior during the runtime. There are a lot of optimizations being done to optimize this compilation on the fly. Using a lot of code cache technology. One big driver for this is to enable cross-platform compatibility.

AOT does differently compile the byte code directly into native binary before runtime (i.e., at build time). As a consequence, you would bind to specific hardware architecture. It only compiles to x86 architecture and drops another arch compatibility. The tradeoff is worthwhile since we only target our scenario for a Linux container that has already become ubiquitous in the cloud.

There are two major technical challenges for this solution.

Make “Open World” Java to “Closed World” Java

The difficulty is proportional to how many Java dependencies you are using. With 25 years of development, the Java ecosystem is huge and complete.

In so many years, java became java because it’s a dynamic compile language instead of a static language. It provides so many features like reflection, classloading during runtime, dynamic proxy, generating code during runtime, etc. A lot of de-facto features like annotation-driven programming(declarative), CDI depends, java lambda depends on those Java dynamic nature. It can never be an easy path to transform the bytecode into native binary from scratch.

In my demo, I used hibernate + PostgreSQL to do a TODO app that works perfectly fine in native mode. This is because the Quarkus community has optimized a large list of libraries and frameworks.

If your apps are OK with the existing quarkus extensions list (i.e., optimized libraries, I generate the list by running quarkus 1.8.3.Final), then the Java AOT for your application is highly feasible, not difficult at all.

Tuning Configuration for Graalvm Command Image

Fortunately, this is already handled smoothly by the quarkus-maven-plugin. What you need to do is -Pnative by running mvn. I would never be willing to get my hands dirty to do native-image command by myself.

In summary, Java ahead of time compilation looks very promising for improving java performance. However, it also seems quite challenging for most developers.

Choosing an easy path is very important and evaluate if your application can be fully transformed into a native is also important. I recommend you read https://quarkus.io/guides/building-native-image for further build native execute information.