Telemetry Pipelines Workshop: Filtering Events with Fluent Bit

Are you ready to get started with cloud-native observability with telemetry pipelines?

This article is part of a series exploring a workshop guiding you through the open source project Fluent Bit, what it is, a basic installation, and setting up the first telemetry pipeline project. Learn how to manage your cloud-native data from source to destination using the telemetry pipeline phases covering collection, aggregation, transformation, and forwarding from any source to any destination.

In a previous article in this series, we explored the use case covering metric collection processing. In this article, we step back and look closer at how we can use the filtering phase to modify events even based on conditions in those events.

You can find more details in the accompanying workshop lab.

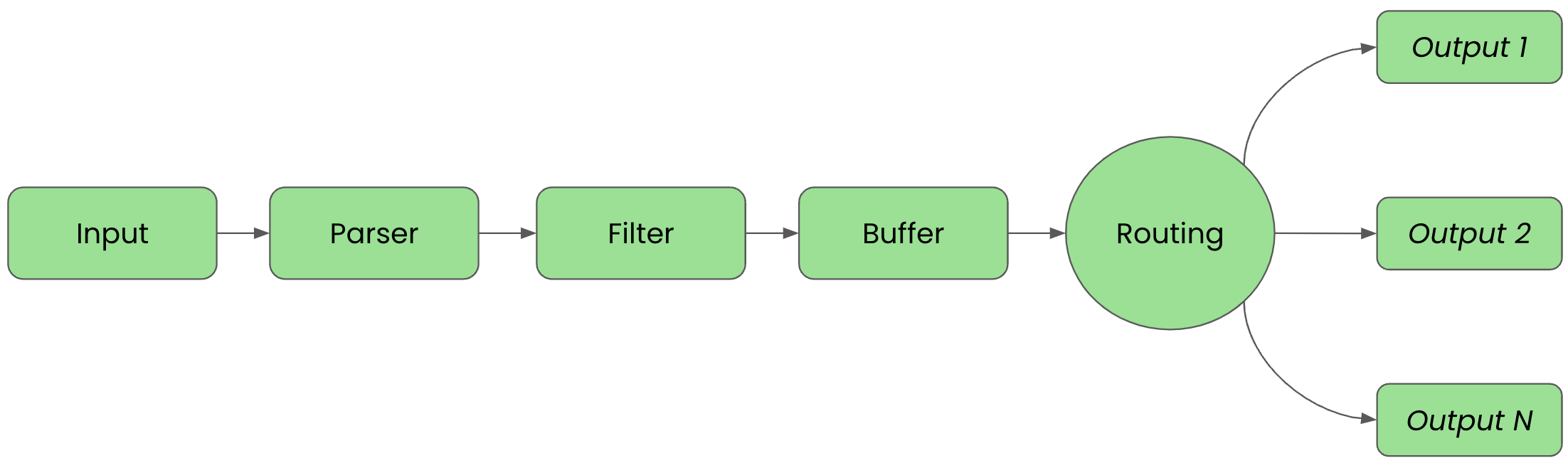

Before we get started, it's important to review the phases of a telemetry pipeline. In the diagram below we see them laid out again. Each incoming event goes from input to parser to filter to buffer to routing before they are sent to its final output destination(s).

For clarity in this article, we'll split up the configuration into files that are imported into a main fluent bit configuration file we'll name workshop-fb.conf.

Filtering Basics: Modifying Events

For this example, we're going to add to the filtering phase of our pipeline that's ingesting two input events, dropping a key value from those incoming events. First, we need to define several generated input events for the INPUT phase, which is done in our configuration file inputs.conf:

# This entry generates a test INFO log level message and tags it for the workshop.

[INPUT]

Name dummy

Tag workshop.info

Dummy {"message":"This is workshop INFO message", "level":"INFO", "color": "yellow"}

# This entry generates a test ERROR log level message and tags it for the workshop.

[INPUT]

Name dummy

Tag workshop.err

Dummy {"message":"This is workshop ERROR message", "level":"ERROR", "color": "red"}

Explore the dummy input plugin documentation for all the details, but this plugin generates fake events on set intervals, 1 second by default. There are three keys used to set up our inputs:

Name: The name of the plugin to be usedTag: The tag we assign, can be anything, to help find events of this type in the matching phaseDummy: Where the exact event output can be defined; by default it just sends{ "message" : "dummy"}.

Our configuration is tagging each INFO level event with workshop.info and ERROR level event with workshop.error. The configuration also overrides the default "dummy" message with custom event text.

Now we're going to add to the filtering phase using the existing generated input events to drop a key value. To do that we are adding a new filter file (use your favorite editor) called filters.conf. We are going to remove the color key from all events using a filter plugin called Modify. This filter will apply to all incoming events and remove the key color if it exists.

# This filter is applied to all events, removing the key 'color' if it exists. [FILTER] Name modify Match * Remove color

We'll use a simple output configuration, just printing the events to our console over the standard output channel. To do this, we'll configure our outputs.conf file using our favorite editor:

# This entry directs all tags (it matches any we encounter) to print to standard output, # which is our console. [OUTPUT] Name stdout Match *

With our inputs, outputs, and filtering configured, we set up our main configuration file, workshop-fb.conf, as follows:

# Fluent Bit main configuration file. # # Imports section. @INCLUDE inputs.conf @INCLUDE outputs.conf @INCLUDE filters.conf

To see if our event routing configuration works, we can test run it with our Fluent Bit installation, first using the source installation followed by the container version. Below the source install is shown from the directory we created to hold all our configuration files:

# source install. # $ [PATH_TO]/fluent-bit --config=workshop-fb.conf

The console output should look something like this, noting that we've cut out the ASCII logo at start-up:

...

[2024/03/05 16:04:15] [ info] [input:dummy:dummy.0] initializing

[2024/03/05 16:04:15] [ info] [input:dummy:dummy.0] storage_strategy='memory' (memory only)

[2024/03/05 16:04:15] [ info] [input:dummy:dummy.1] initializing

[2024/03/05 16:04:15] [ info] [input:dummy:dummy.1] storage_strategy='memory' (memory only)

[2024/03/05 16:04:15] [ info] [output:stdout:stdout.0] worker #0 started

[2024/03/05 16:04:15] [ info] [output:file:file.1] worker #0 started

[2024/03/05 16:04:15] [ info] [output:file:file.2] worker #0 started

[2024/03/05 16:04:15] [ info] [sp] stream processor started

[0] workshop.info: [[1709651056.709431000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1709651056.709919000, {}], {"message"=>"This is workshop ERROR message", "level"=>"ERROR"}]

[0] workshop.info: [[1709651057.710029000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1709651057.710178000, {}], {"message"=>"This is workshop ERROR message", "level"=>"ERROR"}]

[0] workshop.info: [[1709651058.710925000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1709651058.711017000, {}], {"message"=>"This is workshop ERROR message", "level"=>"ERROR"}]

[0] workshop.info: [[1709651059.709075000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1709651059.709165000, {}], {"message"=>"This is workshop ERROR message", "level"=>"ERROR"}]

[0] workshop.info: [[1709651060.708977000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1709651060.709316000, {}], {"message"=>"This is workshop ERROR message", "level"=>"ERROR"}]

...

Note that the same full output of alternating generated event lines with INFO and ERROR messages run until exiting with CTRL_C. Note the color key has been removed.

Let's now try testing our configuration by running it using a container image. We need to add our filters' configuration to the Buildfile we created to build a new container image and insert our configuration files.

FROM cr.fluentbit.io/fluent/fluent-bit:3.0.1 COPY ./workshop-fb.conf /fluent-bit/etc/fluent-bit.conf COPY ./inputs.conf /fluent-bit/etc/inputs.conf COPY ./outputs.conf /fluent-bit/etc/outputs.conf COPY ./filters.conf /fluent-bit/etc/filters.conf

Using the adjusted Buildfile, rebuild a new container image giving it a new version tag, as follows:

$ podman build -t workshop-fb:v3 -f Buildfile STEP 1/5: FROM cr.fluentbit.io/fluent/fluent-bit:3.0.1 STEP 2/5: COPY ./workshop-fb.conf /fluent-bit/etc/fluent-bit.conf --> f996832fc565 STEP 3/5: COPY ./inputs.conf /fluent-bit/etc/inputs.conf --> a027909c37d3 STEP 4/5: COPY ./outputs.conf /fluent-bit/etc/outputs.conf --> 4e60000963dd STEP 5/5: COPY ./filters.conf /fluent-bit/etc/filters.conf COMMIT workshop-fb:v3 --> 335c52958686 Successfully tagged localhost/workshop-fb:v3 335c52958686cccabaf4807341607f6e9c287d3c613f1f2cc1d0c8401254c92b

Now we'll run our new container image, but we need a way for the container to write to the two log files so that we can check them (not internally on the container filesystem). We mount our local workshop directory to the containers tmp directory so we can see the files on our local machine as follows:

$ podman run workshop-fb:v3

The output looks exactly like the source output above, just with different timestamps, and the color key has been removed. Again you can stop the container using CTRL_C.

Filtering Basics: Conditionally Modifying Events

For this section, we'll take the above configuration and now conditionally add a new key to select events. This is again done in the filtering phase using the existing generated input events.

To do that, we are modifying our previous filter file (use your favorite editor) called filters.conf. Still using the filter plugin called Modify, we make the following adjustments, adding a new filter with the condition that if met will remove one key and add another:

# This filter is applied to all events and removes the key 'color' if it exists. [FILTER] Name modify Match * Remove color # This filter conditionally modifies events that match. [FILTER] Name modify Match * Condition Key_Value_Equals level ERROR Remove level Add workshop_status BROKEN

Using either source or container version as shown above, we run our filtered pipeline and our console output should look something like this, noting that we've cut out the ASCII logo at startup:

...

[2024/04/15 20:01:49] [ info] [input:dummy:dummy.0] initializing

[2024/04/15 20:01:49] [ info] [input:dummy:dummy.0] storage_strategy='memory' (memory only)

[2024/04/15 20:01:49] [ info] [input:dummy:dummy.1] initializing

[2024/04/15 20:01:49] [ info] [input:dummy:dummy.1] storage_strategy='memory' (memory only)

[2024/04/15 20:01:49] [ info] [output:stdout:stdout.0] worker #0 started

[2024/04/15 20:01:49] [ info] [output:file:file.1] worker #0 started

[2024/04/15 20:01:49] [ info] [output:file:file.2] worker #0 started

[2024/04/15 20:01:49] [ info] [sp] stream processor started

[0] workshop.info: [[1713204110.187426000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1713204110.189145000, {}], {"message"=>"This is workshop ERROR message", "workshop_status"=>"BROKEN"}]

[0] workshop.info: [[1713204111.187644000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1713204111.187957000, {}], {"message"=>"This is workshop ERROR message", "workshop_status"=>"BROKEN"}]

[0] workshop.info: [[1713204112.183134000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1713204112.183361000, {}], {"message"=>"This is workshop ERROR message", "workshop_status"=>"BROKEN"}]

[0] workshop.info: [[1713204113.183987000, {}], {"message"=>"This is workshop INFO message", "level"=>"INFO"}]

[0] workshop.error: [[1713204113.184215000, {}], {"message"=>"This is workshop ERROR message", "workshop_status"=>"BROKEN"}]

...

Be sure to scroll to the right in the above window to see the full console output.

Note the color key has been removed from all events and the events with a level key set to ERROR now have a new key saying the workshop status is broken.

This completes our use cases for this article, be sure to explore this hands-on experience with the accompanying workshop lab.

What's Next?

This article walked us through how we can use filtering to modify events and base that filtering on conditions if needed with Fluent Bit. The series continues with the next step where we'll explore a use case deal with back-pressure on our telemetry pipelines.

Stay tuned for more hands-on material to help you with your cloud-native observability journey.