A Filebeat Tutorial: Getting Started

The ELK Stack is no longer the ELK Stack — it's being renamed the Elastic Stack. The simple reason for this being that it has incorporated a fourth component on top of Elasticsearch, Logstash, and Kibana: Beats, a family of log shippers for different use cases and sets of data.

Filebeat is probably the most popular and commonly used member of this family, and this article seeks to give those getting started with it the tools and knowledge they need to install, configure, and run it to ship data into the other components in the stack.

What Is Filebeat?

Filebeat is a log shipper belonging to the Beats family: a group of lightweight shippers installed on hosts for shipping different kinds of data into the ELK Stack for analysis. Each beat is dedicated to shipping different types of information. Winlogbeat, for example, ships Windows event logs, Metricbeat ships host metrics, and so forth. Filebeat, as the name implies, ships log files.

In an ELK-based logging pipeline, Filebeat plays the role of the logging agent — installed on the machine generating the log files, tailing them, and forwarding the data to either Logstash for more advanced processing or directly into Elasticsearch for indexing. Filebeat is, therefore, not a replacement for Logstash, but it can (and should in most cases) be used in tandem. You can read more about the story behind the development of Beats and Filebeat in this article.

Written in Go and based on the Lumberjack protocol, Filebeat was designed to have a low memory footprint, handle large bulks of data, support encryption, and deal efficiently with back pressure. For example, Filebeat records the last successful line indexed in the registry, so in case of network issues or interruptions in transmissions, Filebeat will remember where it left off when re-establishing a connection. If there is an ingestion issue with the output, Logstash, or Elasticsearch, Filebeat will slow down the reading of files.

Installing Filebeat

Filebeat can be downloaded and installed using various methods and on a variety of platforms. It only requires that you have a running ELK stack to be able to ship the data collected by Filebeat. I will outline two methods, using Apt and Docker, but you can refer to the official docs for more options.

Install Filebeat Using Apt

For an easier way of updating to a newer version, and depending on your Linux distro, you can use Apt or Yum to install Filebeat from Elastic's repositories.

First, you need to add Elastic's signing key so that the downloaded package can be verified (skip this step if you've already installed packages from Elastic):

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -The next step is to add the repository definition to your system:

echo "deb https://artifacts.elastic.co/packages/6.x/apt stable main" | sudo

tee -a /etc/apt/sources.list.d/elastic-6.x.listAll that's left to do is to update your repositories and install Filebeat:

sudo apt-get update && sudo apt-get install filebeatInstall Filebeat on Docker

If you're running Docker, you can install Filebeat as a container on your host and configure it to collect container logs or log files from your host.

Pull Elastic's Filebeat image with:

docker pull docker.elastic.co/beats/filebeat:6.2.3Configuring Filebeat

Filebeat is pretty easy to configure, and the good news is that if you've configured one beat, you can be pretty sure you'll know how to configure the next — they all follow the same configuration setup.

Filebeat is configured using a YAML configuration file. On Linux, this file is located at /etc/filebeat/filebeat.yml. On Docker, you will find it at /usr/share/filebeat/filebeat.yml .

YAML is syntax-sensitive. You cannot, for example, use tabs for spacing. There are a number of additional best practices that will help you avoid mistakes in this article.

Filebeat contains rich configuration options. In most cases, you can make do with using default or very basic configurations. It's a good best practice to refer to the example filebeat.reference.yml configuration file (located in the same location as the filebeat.yml file) that contains all the different available options.

Let's take a look at some of the main components that you will most likely use when configuring Filebeat.

Filebeat Prospectors

The main configuration unit in Filebeat are the prospectors. Prospectors are responsible for locating specific files and applying basic processing to them.

The main configuration applied to prospectors is the path (or paths) to the file you want to track, but you can use additional configuration options such as defining the input type and the encoding to use for reading the file, excluding and including specific lines, adding custom fields, and more.

You can configure a prospector to track multiple files or define multiple prospectors in case you have prospector-specific configurations you want to apply.

filebeat.prospectors:

- type: log

paths:

- "/var/log/apache2/*"

fields:

apache: trueThis example is defining a single prospector for tracking Apache access and error logs using the wildcard * character to include all files under the apache2 directory and adding a field called apache to the messages. Note that prospectors are a YAML array and so begin with a -.

Filebeat Processors

While not as powerful and robust as Logstash, Filebeat can apply basic processing and data enhancements to log data before forwarding it to the destination of your choice. You can decode JSON strings, drop specific fields, add various metadata (e.g. Docker, Kubernetes), and more.

Processors are defined in the Filebeat configuration file per prospector. You can define rules to apply your processing using conditional statements. Below is an example using the drop_fields processor for dropping some fields from Apache access logs:

filebeat.prospectors:

- type: log

paths:

- "/var/log/apache2/access.log"

fields:

apache: true

processors:

- drop_fields:

fields: ["verb","id"]Filebeat Output

This section in the Filebeat configuration file defines where you want to ship the data to. There is a wide range of supported output options, including console, file, cloud, Redis, and Kafka but in most cases, you will be using the Logstash or Elasticsearch output types.

Define a Logstash instance for more advanced processing and data enhancement. When your data is well-structured JSON documents, for example, you might make do with defining your Elasticsearch cluster.

You can define multiple outputs and use a load balancing option to balance the forwarding of data.

For forwarding logs to Elasticsearch:

output.elasticsearch:

hosts: ["localhost:9200"]For forwarding logs to Logstash:

output.logstash:

hosts: ["localhost:5044"]For forwarding logs to two Logstash instances:

output.logstash:

hosts: ["localhost:5044", "localhost:5045"]

loadbalance: trueLogz.io Filebeat Wizard

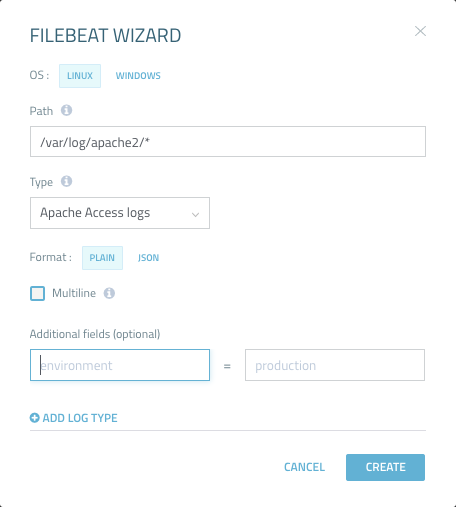

To allows users to easily define their Filebeat configuration file and avoid common syntax errors, Logz.io provides a Filebeat Wizard that results in an automatically formatted YAML file. The wizard can be accessed via the Log Shipping > Filebeat page.

In the wizard, users enter the path to the log file they want to ship and the log type. There are additional options that can be used, such as entering a REGEX pattern for multiline logs and adding custom fields.

Non-Logz.io users can make use of the wizard, as well. They simply need to remove the Logz.io specific fields from the generated YAML file.

Configuring Filebeat on Docker

The most commonly used method to configure Filebeat when running it as a Docker container is by bind-mounting a configuration file when running the container.

Then, follow the guidelines above and enter your configurations. The example below provides basic configurations to ship Docker container logs running on the same host to a locally running instance of Elasticsearch.

filebeat.prospectors:

- type: log

paths:

- '/var/lib/docker/containers/*/*.log'

json.message_key: log

json.keys_under_root: true

processors:

- add_docker_metadata: ~

output.elasticsearch:

hosts: ["localhost:9200"]Running Filebeat

Depending on how you installed Filebeat, enter the following commands to start Filebeat.

Apt

Start the Filebeat service with:

sudo service filebeat startDocker

Run the Filebeat container by defining bind-mounting to your configuration file (you can, of course, do the same thing by building your own image from a Dockerfile and running it). Be sure you have the correct permissions to connect to the Docker daemon:

sudo docker run -v

/etc/filebeat/filebeat.yml:

/usr/share/filebeat/filebeat.yml docker.elastic.co/beats/filebeat:6.2.3Filebeat Modules

Filebeat modules are ready-made configurations for common log types such as Apache, Nginx, and MySQL logs that can be used to simplify the process of configuring Filebeat, parsing the data, and analyzing it in Kibana with ready-made dashboards.

A list of the different configurations per module can be found in the /etc/filebeat/module.d (on Linux) folder. Modules are disabled by default and need to be enabled. There are various ways of enabling modules, one way being from your Filebeat configuration file:

filebeat.modules:

- module: apacheFilebeat modules are currently a bit difficult to use since they require using Elasticsearch Ingest Node, and some specific modules have additional dependencies that need to be installed and configured.

What's Next?

Filebeat is an efficient, reliable, and relatively easy-to-use log shipper, and complements the functionality supported in the other components in the stack. To make the best of Filebeat, be sure to read our other Elasticsearch, Logstash, and Kibana tutorials.

Like any piece of software, there are some pitfalls worth knowing about before starting out. These are listed in our Filebeat Pitfalls article. Tips on configuring YAML configuration files can be found in the Musings in YAML article.

Enjoy!