Exploring the Sidecar Pattern in Cloud-Native Architecture

Distributed services have indeed revolutionized the design and deployment of applications in the modern world of cloud-native architecture: flexibility, scalability, and resilience are provided by these autonomous, loosely coupled services. This also means that services add complexity to our systems, especially with cross-cutting concerns such as logging, monitoring, security, and configuration. As a fundamental design concept, the sidecar pattern enhances the distributed architecture in a seamless and scalable manner.

Throughout this article, we explore what the sidecar pattern offers, its use cases, and why it has become so widely used in cloud-native environments.

What Is the Sidecar Pattern?

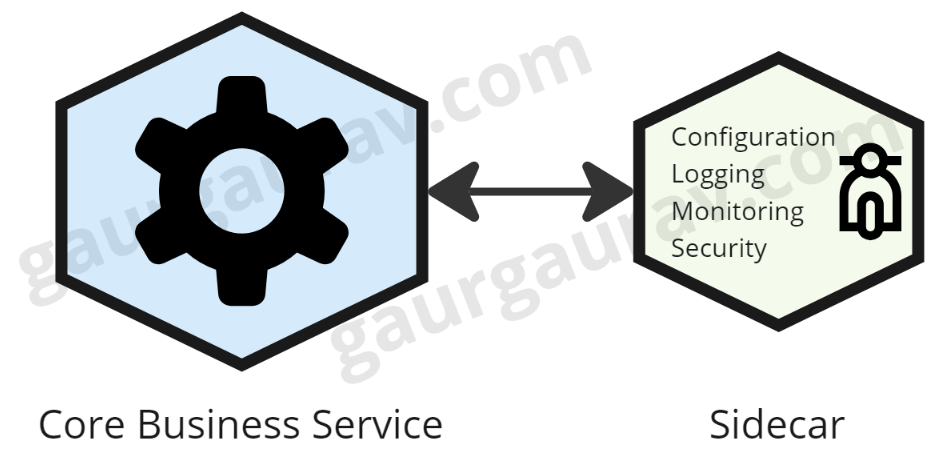

The sidecar pattern describes a design that deploys an auxiliary service — a sidecar — alongside the container of a primary application. It would run in its own container or process but would share the same context with the primary application, such as network and storage. The objective here is to offload non-core business logic functionality — security, logging, or configuration — to this auxiliary container and let the primary service focus on the core application logic.

Think of it as attaching a sidecar to a motorcycle. The motorcycle is your app, and the sidecar provides support without getting in the way of the motorcycle's operation.

Why Use the Sidecar Pattern?

The sidecar pattern offloads non-core functionalities such as authentication, logging, or configuration into a separate component. That will ensure that your main service has only one concern: business logic; thus, it will be easier to maintain and test.

Moreover, sidecars do not depend on the main application's language or technology stack. This allows one to standardize concerns across multiple services written in any language. Once a sidecar has been written, it can be reused across many services, which ensures its functionalities would remain consistent. For instance, a logging sidecar applied to multiple microservices would result in common log formatting and delivery.

Since these sidecars can take care of logging, tracing, or metrics gathering quite independently, they will indeed provide a clean way to inject observability into the services without touching their business logic. This grants much more visibility and better troubleshooting. Finally, this update in the logic of a sidecar-such as upgrading a security feature-doesn't need to make changes to the main application. This provides greater agility while reducing downtime, at least in large distributed systems. Thus, sidecars allow achieving:

- Separation of concerns

- Modular and reusable components

- Improved observability

- Allows easier service updates

Key Use Cases for the Sidecar Pattern

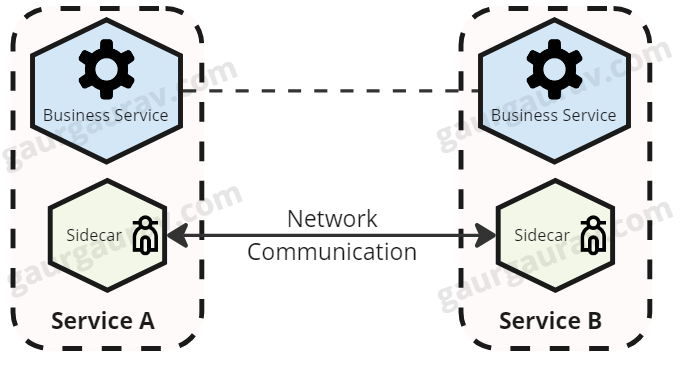

Service Meshes

One of the most well-known usages of the sidecar pattern is service meshes, such as Istio or Linkerd. The sidecar proxy (Envoy, for example) manages networking concerns such as routing, load balancing, retries, and even security between services — for example, mutual TLS. The sidecar provides a transparent layer of control without changing application code.

Security Enhancements

Various security policies could be implemented via sidecars, including secret management, certificate rotation, or data encryption. As a specific example, mutual authentication between services can be handled by a sidecar, keeping sensitive data transmissions secure.

Monitoring and Logging

Centralized logging can run in sidecar containers, such as Fluentd or Logstash, which collect and forward logs to a central server, abstracting log management from the application. Similarly, a monitoring sidecar exposes an application's metrics to a monitoring system like Prometheus.

Configuration Management

One of the use cases for sidecars is to dynamically load and inject configuration data into the main application. This is useful when configurations need to change at runtime and without restarting the main service.

Things To Consider When Using Sidecar Pattern

While the sidecar pattern enjoys several advantages, it's equally important to be aware of what trade-offs it makes:

- Resource overhead: Sidecars consume CPU, memory, and networking resources. Multiple sidecars performing different tasks, such as logging or monitoring, will increase resource consumption.

- Operational complexity: Running sidecars for many services is an operational task and a challenge. Much like the main services, sidecars need to be correctly deployed, updated, and monitored.

- Network latency: Since most of the sidecars interact over the network, proxy sidecars, for instance, could introduce additional network latency. Often negligible, but an important consideration where performance is sensitive.

Best Practices Applying the Sidecar Pattern

- Sidecars share the same resources as the main container, it is good practice to keep sidecar processes lightweight to reduce contention on resources.

- Establish sidecars for cross-cutting concerns like logging, security, and configuration. Core business logic should not go into the sidecar since this can cause tight coupling between the application and the sidecar.

- Like application services, monitor the resource consumption of your sidecars, for example failure rates and performance degradations.

- If using service mesh technologies that depend on sidecars, ensure that the benefits brought by sidecar injection, such as observability and security, justify the increased operational complexity.

Conclusion

Offloading cross-cutting concerns to sidecars creates more modular, reusable, and maintainable services. As with any pattern of architecture, one needs to balance benefits against potential overhead and the level of complexity it introduces. Used correctly, the sidecar can greatly simplify distributed architecture while retaining flexibility and scalability.

As the cloud-native architecture continues to evolve, the sidecar pattern will undoubtedly remain an important strategy for dealing with the increasing complexity of distributed systems.