How to Build a Serverless WebSockets Platform

When building modern web applications, it is increasingly important to be able to handle real-time data with an event-driven architecture to propagate messages to all connected clients instantly.

Several protocols are available, but WebSocket is arguably the most widely used as it is optimized for minimum overhead and low latency. The WebSocket protocol supports bidirectional, full-duplex communication between client and server over a persistent, single-socket connection. With a WebSocket connection, you can eliminate polling and push updates to a client as soon as an event occurs.

How to Integrate WebSockets Into Your Stack

You could deliver an event-driven architecture by setting up a dedicated WebSocket server for clients to connect and receive updates. However, this architecture has several drawbacks, including the need to manage and scale the server and the inherent latency involved in sending updates from that server to clients, who may be distributed worldwide.

To scale up your hardware across the globe, you could skip the responsibility and cost of ownership by renting from a cloud service provider. For example, you could use a managed cloud service such as Amazon ECS, or you could opt for a serverless WebSocket solution.

"A serverless application costs you nothing to run when nobody is using it, excluding data storage costs." Cloud 2.0: Code is no longer King - Serverless has dethroned it

Saving on operational costs is an excellent reason to consider a serverless framework: you reap the benefits of scale and elasticity, but you don't have to provision or manage the servers.

This article explains how to build a basic serverless WebSockets platform, ideal for simple applications such as chat, using AWS API Gateway to create a WebSocket endpoint and AWS Lambda for connection management and backend business logic.

The Basic Architecture of a Serverless WebSockets Platform

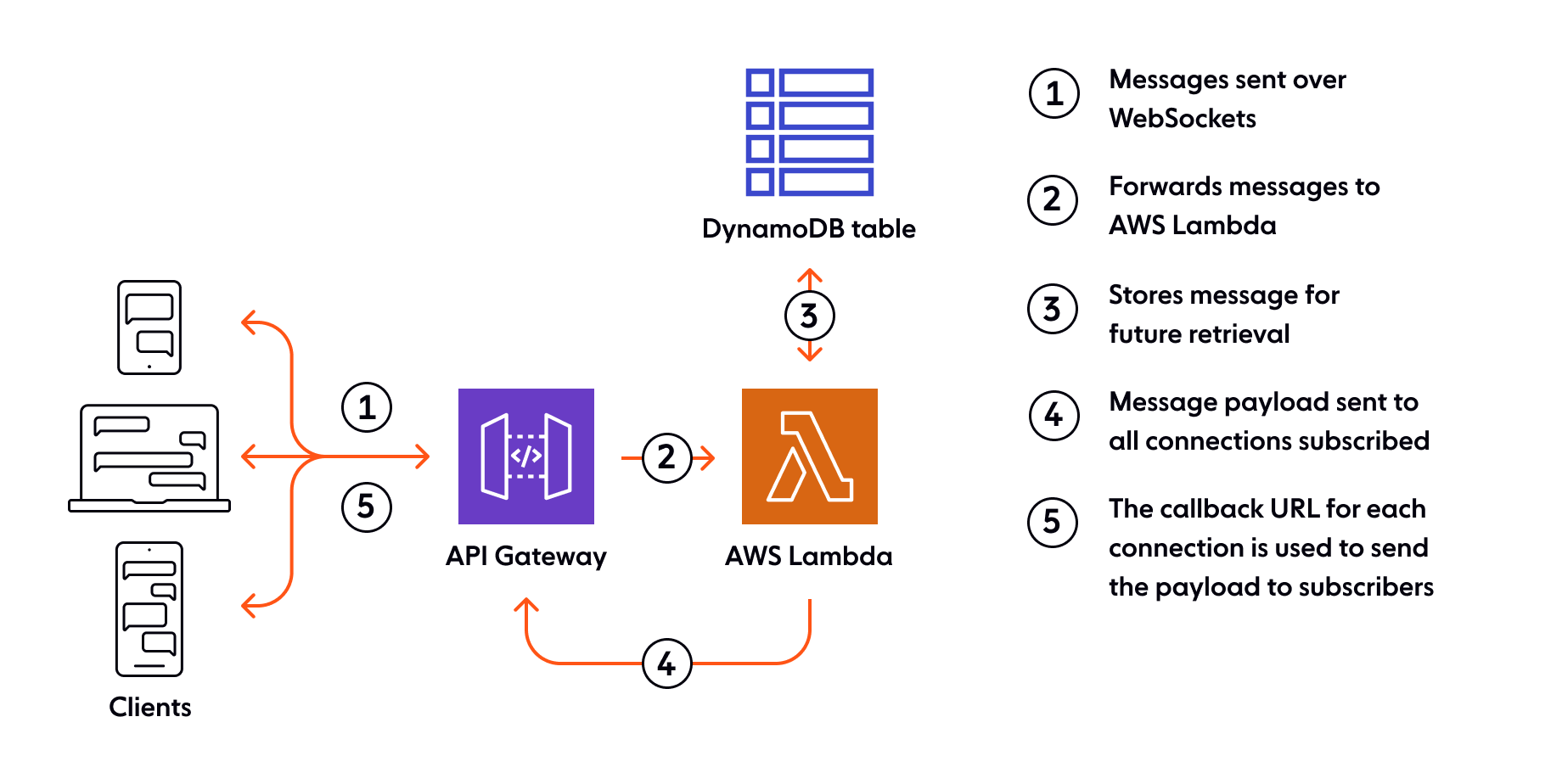

Building a simple serverless WebSockets platform is relatively straightforward, using AWS API Gateway to build out WebSockets APIs and Lambda functions for backend processing, storing message metadata in Amazon DynamoDB.

AWS API Gateway

AWS API Gateway is a service to create and manage APIs. You can use it as a stateful proxy to terminate persistent WebSocket connections and transfer data between your clients and HTTP-based backends such as AWS Lambda, Amazon Kinesis, or any other HTTP endpoint.

AWS Lambda

AWS Lambda is a serverless computing service that allows you to run code without provisioning or managing servers.

WebSocket APIs can invoke Lambda functions, for example, to deliver a message payload for further processing and then return the result to the client. The Lambda functions only run as long as necessary to handle any individual task. For client authorization, the connection request must include credentials to be verified by a Lambda authorizer function that returns the appropriate AWS IAM policy for access rights.

Example: Chat App Powered By Serverless WebSockets

As is traditional, let's look at an example chat app to illustrate how the serverless WebSockets solution powers a basic real-time application. First, consider the sequence by which a client WebSocket connection is made and stored.

- A client device establishes a single WebSocket connection to AWS API Gateway. Each active WebSocket connection has an individual callback URL in API Gateway that is used to push messages back to the corresponding client.

- The connection message is sent to an API Gateway Authorizer Lambda function to confirm that the device has the correct credentials to connect to the service.

- The connection message and metadata are sent to a separate AWS Lambda when it has been authenticated.

- The Lambda handler inspects the metadata and stores appropriate information in AWS DynamoDB, a NoSQL document database.

In a chat app, users can be involved in numerous conversations involving one or more participants. This is a typical use case for the publish and subscribe pattern. When a user sends a message to a particular conversation or channel, the chat app publishes the message to all other users who subscribe to that channel. Each subscriber to that channel receives the message in their app.

When the chat app initiates a WebSocket connection to AWS API Gateway, there needs to be a way to determine the channels for which that connection is authorized to publish and subscribe. The client code must send this information within the connection metadata.

Now let's consider the point at which the user sends a message and another user receives it:

- A client sends a chat message, including the metadata, to identify the particular channel on which to publish.

- On receipt, API Gateway sends it to the appropriate Lambda function.

- Upon invocation, Lambda checks for the connections subscribed to that channel and sends API Gateway the ID for each subscriber and the message payload.

- API Gateway uses the callback URLs for each subscriber connection to send the payload to the client on the established WebSocket connection.

- Upon disconnection, a Lambda function is invoked to clean up the datastore.

The Limitations of Basic Serverless WebSockets

The above is a simplistic example of a real-time, event-driven app using serverless WebSockets. Some of the limitations include:

Connection state tracking doesn't scale: AWS API Gateway doesn't track connection metadata, which is why it needs to be stored in a database such as Amazon DynamoDB using a Lambda function to update the store for every connection/disconnection. For large numbers of connecting clients, you'd hit Lambda scaling limits. You could instead use an AWS Integration to API Gateway to call DynamoDB directly, but you'll need to be mindful of the associated high level of database usage.

Another aspect that we've not addressed is that of abrupt disconnection. In cases where a client connection drops without warning, the active WebSocket connection isn't properly cleaned up. The database will potentially store connection identifiers that are no longer present, which can cause inefficiencies.

One way to avoid zombie connections is to periodically check if a connection is "alive" by using a heartbeat mechanism such as TCP keepalives or ping/pong control frames. Sending heartbeats negatively impacts the system's scalability and dependability, especially when handling millions of concurrent WebSocket connections, since it creates an extra load on the system.

You cannot broadcast messages to all connected clients: In this simple design, there is no way to simultaneously send a message to multiple connections, as you'd expect from a standard pub/sub channel or topic. To fan out an update by publishing it to thousands of clients would require you to fetch the connection IDs from the database and then make an API call to each, which isn't a scalable approach. You could alternatively consider Amazon SNS to add pub/sub to your app, but there are still limitations and the downside of additional complexity.

For a chat application example, you can assume that typical channels will have a low number of subscribers unless they're used in interactive live stream sessions with thousands of users. Other scenarios that can tolerate a lack of broadcast include individual update notifications and interactivity features that work on an item-by-item basis. Live data fanout use cases cannot support this limitation if there are a large number of subscribers on a channel to receive the latest sports score or news update.

There is a limit on the number of WebSocket connections allowed per second: AWS API Gateway sets a per region limit of 500 new connections per second per account and 10,000 simultaneous connections. This may be insufficient if you plan to scale your application.

The solution is bound to a single AWS region: The WebSocket connections offered by AWS API Gateway are bound to a single region, resulting in poor performance because of latency for clients geographically distant from that region. It's possible to use Amazon EventBridge, a serverless event bus, for cross-region event routing to replicate events.

Challenges of Building a Production-Ready Serverless WebSockets Platform

The architecture above has potential if used to build out a simple real-time messaging platform, although we've already seen that it has limitations at scale. If you need a production-ready system, you will require scale but also need to consider the dependability of your solution in terms of performance, the integrity of message delivery, fault tolerance, and fallback support, all of which can be complex and time-consuming to solve.

Performance

In a distributed system, latency deteriorates the further it travels, so data should be kept as close to the users as possible via managed data centers and edge acceleration points. It isn't sufficient to minimize latency, however. The user experience needs latency variance to be at a minimum to ensure predictability.

Message Integrity

When a user's connection drops and reconnects, an app needs to pick up with minimal friction from the point before they disconnect. Any missed messages need to be delivered without duplicating those already processed. The whole experience needs to be completely seamless.

Fault Tolerance and Scalability

The demand for a service can be unpredictable, and it is challenging to plan and provision sufficient capacity. A real-time solution must be highly available at all times to support surges in data. The challenge is to scale horizontally and swiftly absorb millions of connections without the need for pre-provisioning.

Your solution must also be fault-tolerant to continue operating even if a component fails. There need to be multiple components capable of maintaining the system if some are lost. There should be no single point of congestion and no single point of failure.

Fallback Transports

Despite widespread platform support, the WebSocket protocol is not supported by all proxies or browsers, and some corporate firewalls even block specific ports, which can affect WebSocket access. You may consider supporting fallback transports, such as XHR streaming, XHR polling, or long polling.

Feature Creep

Another challenge to using raw serverless WebSockets in a production-ready system may only present itself after successfully using it in the product's first iteration. While you've added the essential capability to receive real-time updates, feature creep often means that the features seed additional requirements for shared live experiences and collaborative features.

Building and maintaining a proprietary WebSocket solution to support the future real-time needs of a product can be challenging. The infrastructure that underpins the system must be stable and dependable and requires experienced engineers to build and maintain it. A development team may find they have a long-term commitment to supporting the real-time solution rather than focusing on the features that augment the core product.

Adopting a Serverless WebSocket PaaS Built for Scale

Adopting a serverless WebSocket solution from a third-party vendor makes more financial sense for many organizations. Otherwise, you could spend several months and a heap of money. And you'd end up burdened with the high cost of infrastructure ownership, technical debt, and ongoing demands for engineering investment.

Look for a fault-tolerant, highly-available, elastic global infrastructure for effortless scaling and low complexity. It should ensure that messages are delivered at low latency over a secure, reliable, and global edge network. A suitable platform will abstract the worry of building a real-time solution so that you can prioritize your product's roadmap and develop features to stay competitive.

One thing to consider is the option of fallback protocols since there is rarely a one-size-fits-all: different protocols serve different purposes better than others. Look for a vendor that offers multiple protocols such as WebSocket, MQTT, SSE, and raw HTTP.

To support live experiences, you also need features such as device presence, stream history, channel rewind, and handling for abrupt disconnections, making building rich real-time applications easier. There is a range of webhook integrations for triggering business logic in real-time. Also, consider if the vendor can offer a gateway to serverless functions from your preferred cloud service providers. You can deploy best-in-class tooling across your entire stack and build event-driven apps using the ecosystems you're already invested in.

Using a PaaS enables you to deliver a live experience without delays in going to market, runaway costs, or unhappy users. Your engineering teams can focus on core product innovation without provisioning and maintaining real-time infrastructure.

In this article, we have reviewed the architecture of a simple serverless WebSockets platform using Amazon API Gateway and AWS Lambda. We have also considered some of the limitations of this design, such as lack of support for broadcasting, single-region deployment, and connection management.