Apache Kafka Introduction, Installation, and Implementation Using .NET Core 6

We will go over Apache Kafka basics, installation, and operation, as well as a step-by-step implementation using a .NET Core 6 web application.

Prerequisites

- Visual Studio 2022

- .NET Core 6 SDK

- SQL Server

- Java JDK 11

- Apache Kafka

Agenda

- Overview of Event Streaming

- Introduction to Apache Kafka.

- Main concepts and foundation of Kafka.

- Different Kafka APIs.

- Use cases of Apache Kafka.

- Installation of Kafka on Windows 10.

- Step-by-step implementation

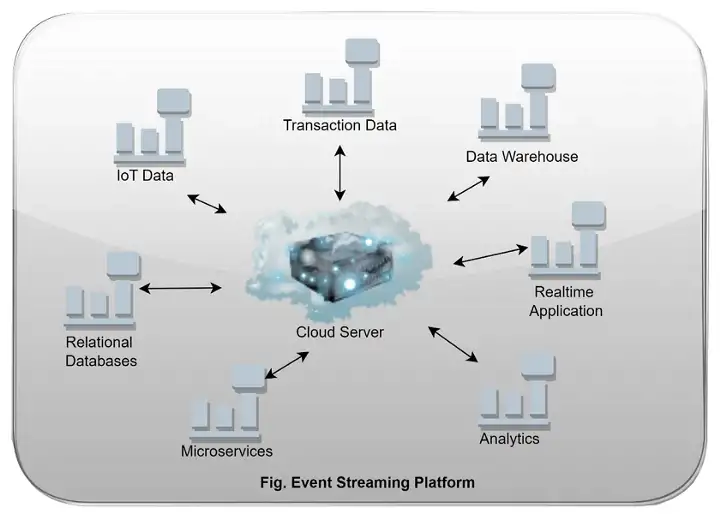

Overview of Event Streaming

Events are the things that happen within our application when we navigate something. For example, we sign up on any website and order something, so, these are the events.

The event streaming platform records different types of data like transaction, historical, and real-time data. This platform is also used to process events and allow different consumers to process results immediately and in a timely manner.

An event-driven platform allows us to monitor our business and real-time data from different types of devices like IoT and many more. After analyzing, it provides a good customer experience based on different types of events and needs.

Introduction to Apache Kafka

Below, are a few bullet points that describe Apache Kafka:

- Kafka is a distributed event store and stream-processing platform.

- Kafka is open source and is written in Java and Scala.

- The primary purpose to designed Kafka by Apache foundation is to handle real-time data feeds and provide high throughput and low latency platforms.

- Kafka is an event streaming platform that has many capabilities to publish (write) and subscribe to (read) streams of events from a different system.

- Also, to store and process events durably as long as we want, by default, Kafka stores events from seven days of the time period, but we can increase that as per need and requirement.

- Kafka has distributed system, which has servers and clients that can communicate via TCP protocol.

- It can be deployed on different virtual machines and containers in on-premise and cloud environments as per requirements.

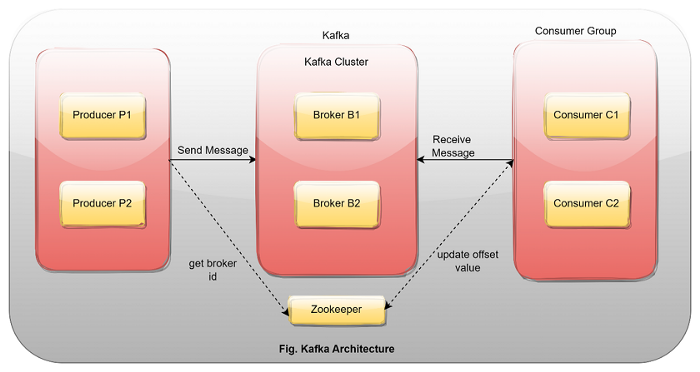

- In the Kafka world, a producer sends messages to the Kafka broker. The messages will get stored inside the topics and the consumer subscribes to that topic to consume messages sent by the producer.

- ZooKeeper is used to manage the metadata of Kafka-related things, it tracks which brokers are part of the Kafka cluster and partitions of different topics. Lastly, it manages the status of Kafka nodes and maintains a list of Kafka topics and messages.

Main Concepts and Foundation of Kafka

1. Event

An event or record is the message that we read and write to the Kafka server; we do this in the form of events in our business world, and it contains a key, a value, a timestamp, and other metadata headers. The key, value, and time stamp, in this case, are as follows:

- Key: “Jaydeep”

- Value: “Booked BMW”

- Event Timestamp: “Dec. 11, 2022, at 12:00 p.m.”

2. Producer

The producer is a client application that sends messages to the Kafka node or broker.

3. Consumer

The consumer is an application that receives data from Kafka.

4. Kafka Cluster

The Kafka cluster is the set of computers that share the workload with each other with varying purposes.

5. Broker

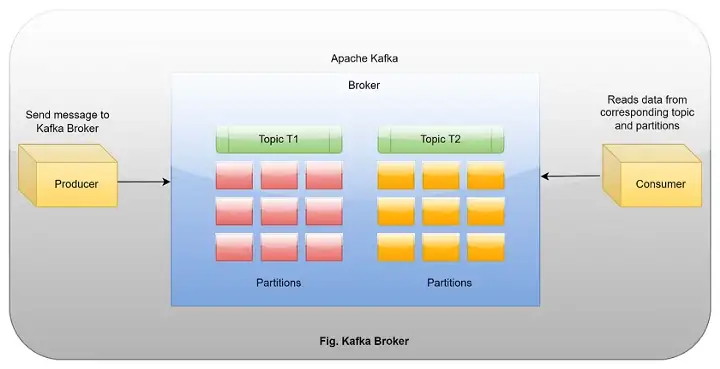

The broker is a Kafka server that acts as an agent between the producer and consumer, who communicate via the broker.

6. Topic

The events are stored inside the “topic,” it’s similar to our folder in which we store multiple files.

Each topic has one or more producers and consumers, which write and reads data from the topic. Events in “topic” can be read as often as needed because it persists events and it’s not like another messaging system that removes messages after consuming.

7. Partitions

Topics are partitions, meaning the topic is spread over multiple partitions that we created inside the topic. When the producer sends some event to the topic, it will store it inside the particular partitions, and then, the consumer can read the event from the corresponding topic partition in sequence.

8. Offset

Kafka assigns one unique ID to the message stored inside the topic partition when the message arrives from the producer.

9. Consumer Groups

In the Kafka world, the consumer group acts as a single logical unit.

10. Replica

In Kafka, to make data fault-tolerant and highly available, we can replicate topics in different regions and brokers. So, in case something wrong happens with data in one topic, we can easily get that from another to replicate the same.

Different Kafka APIs

Kafka has five core APIs that serve different purposes:

- Admin API: This API manages different topics, brokers, and Kafka objects.

- Producer API: This API is used to write/publish events to different Kafka topics.

- Consumer API: This API is used to receive the different messages corresponding to the topics that are subscribed by the consumer.

- Kafka Stream API: This API is used to perform different types of operations like windowing, joins, aggregation, and many others. Basically, its use is to transform objects.

- Kafka Connect API: This API works as a connector to Kafka, which helps different systems connect with Kafka easily. It has different types of ready-to-use connectors related to Kafka.

Use Cases of Apache Kafka

- Messaging

- User activity tracking

- Log aggregation

- Stream processing

- Realtime data analytics

Installation of Kafka on Windows 10

Step 1

Download and install the Java SDK of version 8 or more.

Note: I have Java 11, that’s why I put the same path in all commands that I used here.

Step 2

Open and install EXE.

Step 3

Set the environment variable for Java using the command prompt as admin.

Command:

setx -m JAVA_HOME “C:\Program Files\Java\jdk-11.0.16.1” setx -m PATH “%JAVA_HOME%\bin;%PATH%”

Step 4

After that, download and install Apache Kafka.

Step 5

Extract the downloaded Kafka file and rename it “Kafka.”

Step 6

Open D:\Kafka\config\ and create a “zookeeper-data” and “kafka-logs” folder inside that.

Step 7

Next, open D:\Kafka\config\zookeeper.properties file and add the folder path inside that:

D:\Kafka\config\zookeeper.propertiesdataDir=D:/Kafka/zookeeper-data

Step 8

After that, open D:\Kafka\config\server.properties file and change the log path over there:

D:\Kafka\config\server.propertieslog.dirs=D:/Kafka/kafka-logs

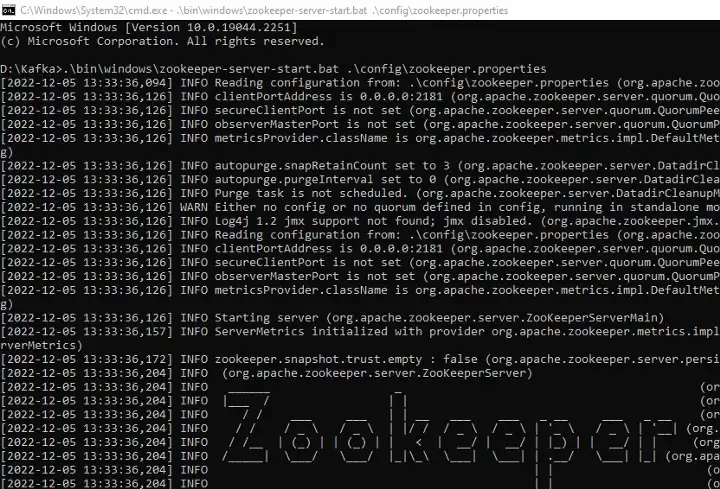

Step 9

Saves and close both files.

Step 10

Run ZooKeeper:

D:\Kafka> .\bin\windows\zookeeper-server-start.bat .\config\zookeeper.properties

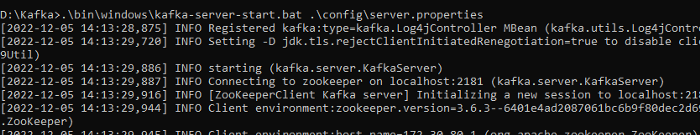

Step 11

Start Kafka:

D:\Kafka> .\bin\windows\kafka-server-start.bat .\config\server.properties

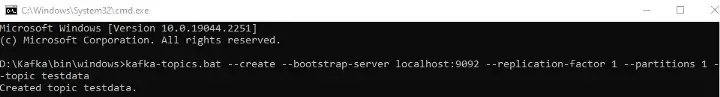

Step 12

Create Kafka topic:

D:\Kafka\bin\windows>kafka-topics.bat — create — bootstrap-server localhost:9092 — replication-factor 1 — partitions 1 — topic testdata

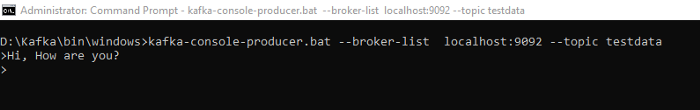

Step 13

Create a producer and send some messages after you’ve started a producer and consumer:

D:\Kafka\bin\windows>kafka-console-producer.bat — broker-list localhost:9092 — topic testdata

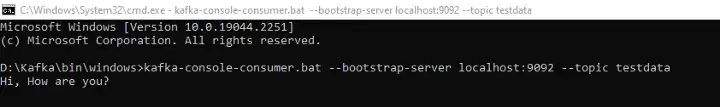

Step 14

Next, create a consumer. After, you will see the message the producer sent:

D:\Kafka\bin\windows>kafka-console-consumer.bat — bootstrap-server localhost:9092 — topic testdata

Step-by-Step Implementation

Let’s start with practical implementation.

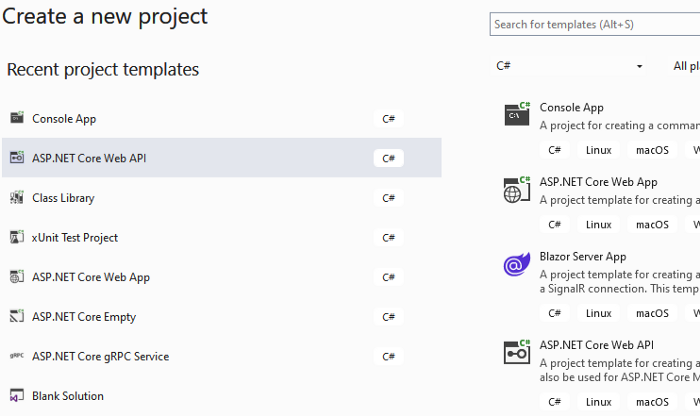

Step 1

Create a new .NET Core Producer Web API:

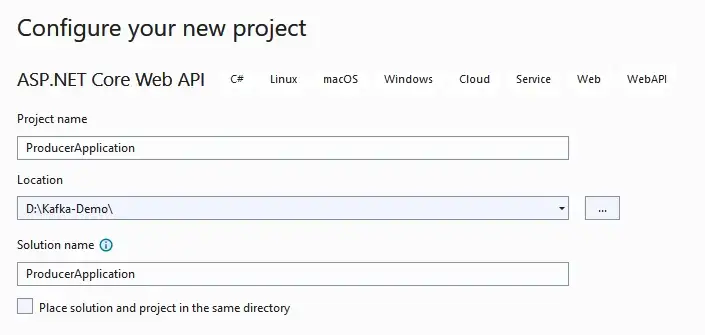

Step 2

Configure your application:

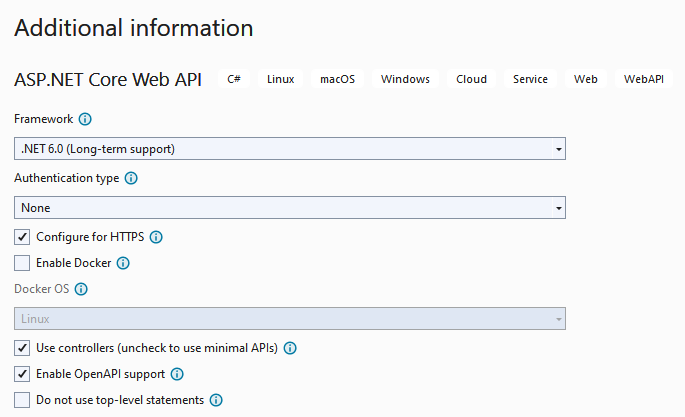

Step 3

Provide additional details:

Step 4

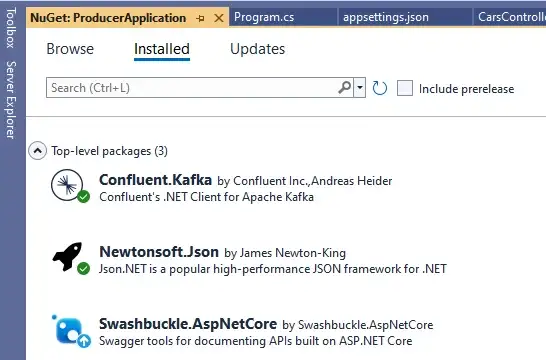

Install the following two NuGet packages:

Step 5

Add configuration details inside the appsettings.json file:

{

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft.AspNetCore": "Warning"

}

},

"AllowedHosts": "*",

"producerconfiguration": {

"bootstrapservers": "localhost:9092"

},

"TopicName": "testdata"

}Step 6

Register a few services inside the “Program” class:

using Confluent.Kafka;

var builder = WebApplication.CreateBuilder(args);

// Add services to the container.

var producerConfiguration = new ProducerConfig();

builder.Configuration.Bind("producerconfiguration", producerConfiguration);

builder.Services.AddSingleton<ProducerConfig>(producerConfiguration);

builder.Services.AddControllers();

// Learn more about configuring Swagger/OpenAPI at https://aka.ms/aspnetcore/swashbuckle

builder.Services.AddEndpointsApiExplorer();

builder.Services.AddSwaggerGen();

var app = builder.Build();

// Configure the HTTP request pipeline.

if (app.Environment.IsDevelopment())

{

app.UseSwagger();

app.UseSwaggerUI();

}

app.UseHttpsRedirection();

app.UseAuthorization();

app.MapControllers();

app.Run();Step 7

Next, create the CarDetails model class:

using Microsoft.AspNetCore.Authentication;

namespace ProducerApplication.Models

{

public class CarDetails

{

public int CarId { get; set; }

public string CarName { get; set; }

public string BookingStatus { get; set; }

}

}Step 8

Now, create the CarsController class:

using Confluent.Kafka;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Extensions.Configuration;

using Newtonsoft.Json;

using ProducerApplication.Models;

namespace ProducerApplication.Controllers

{

[Route("api/[controller]")]

[ApiController]

public class CarsController : ControllerBase

{

private ProducerConfig _configuration;

private readonly IConfiguration _config;

public CarsController(ProducerConfig configuration, IConfiguration config)

{

_configuration = configuration;

_config = config;

}

[HttpPost("sendBookingDetails")]

public async Task<ActionResult> Get([FromBody] CarDetails employee)

{

string serializedData = JsonConvert.SerializeObject(employee);

var topic = _config.GetSection("TopicName").Value;

using (var producer = new ProducerBuilder<Null, string>(_configuration).Build())

{

await producer.ProduceAsync(topic, new Message<Null, string> { Value = serializedData });

producer.Flush(TimeSpan.FromSeconds(10));

return Ok(true);

}

}

}

}Step 9

Finally, run the application and send a message:

Step 10

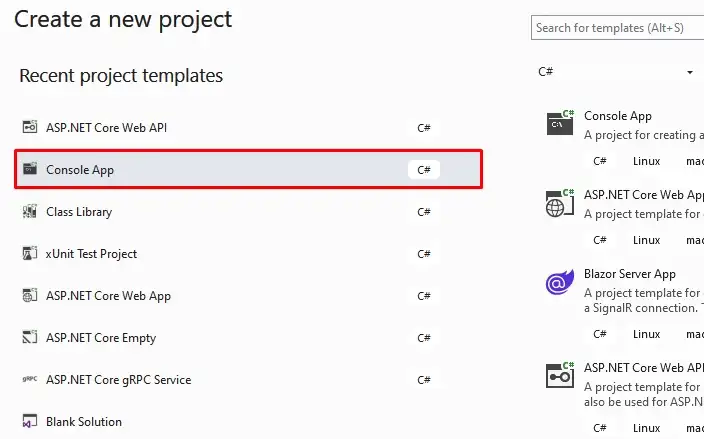

Now, create a “consumer” application:

For that, create a new .NET Core console application:

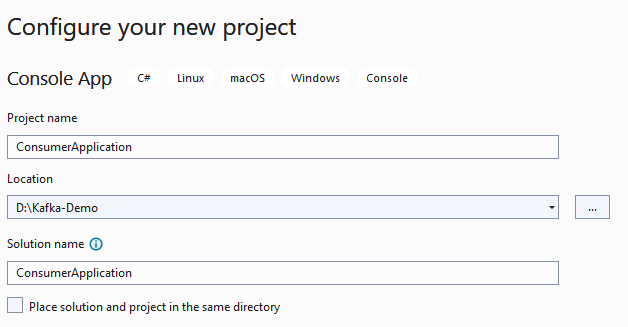

Step 11

Configure your application:

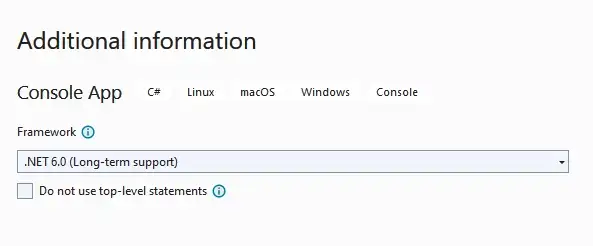

Step 12

Provide additional information:

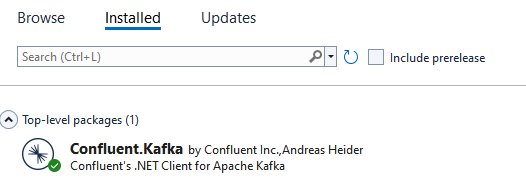

Step 13

Install the NuGet below:

Step 14

Add the following code, which consumes messages sent by the consumer:

using Confluent.Kafka;

var config = new ConsumerConfig

{

GroupId = "gid-consumers",

BootstrapServers = "localhost:9092"

};

using (var consumer = new ConsumerBuilder<Null, string>(config).Build())

{

consumer.Subscribe("testdata");

while (true)

{

var bookingDetails = consumer.Consume();

Console.WriteLine(bookingDetails.Message.Value);

}

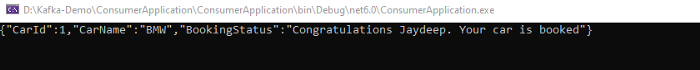

}Step 15

Finally, run the producer and consumer, send a message using the producer app, and you will see the message immediately inside the consumer console sent by the producer:

Here is the GitHub URL I used in this article.

Conclusion

Here, we discussed Apache Kafka introduction, working, benefits, and step-by-step implementation using .NET Core 6.

Happy Coding!