How To Integrate ChatGPT (OpenAI) With Kubernetes

Kubernetes is a highly popular container orchestration platform designed to manage distributed applications at scale. With many advanced capabilities for deploying, scaling, and managing containers, It allows software engineers to build a highly flexible and resilient infrastructure.

Additionally, it is important to note that it is an open-source software, that provides a declarative approach to application deployment and enables seamless scaling and load balancing across multiple nodes. With built-in fault tolerance and self-healing capabilities, Kubernetes ensures high availability and resiliency for your applications.

One of the key advantages of Kubernetes is its ability to automate many operational tasks, abstracting the underlying complexities of the infrastructure, allowing developers to focus on application logic, and optimizing the performance of solutions.

What Is ChatGPT?

You've probably heard a lot about ChatGPT, it's a renowned language model that has revolutionized the field of natural language processing (NLP). bUILT by OpenAI, ChatGPT is powered by advanced artificial intelligence algorithms and trained on massive amounts of text data.

ChatGPT's versatility goes beyond virtual assistants and chatbots as it can be applied to a wide range of natural language processing applications. Its ability to understand and generate human-like text makes it a valuable tool for automating tasks that involve understanding and processing written language.

The underlying technology behind ChatGPT is based on deep learning and transformative models. The ChatGPT training process involves exposing the model to large amounts of text data from a variety of sources.

This extensive training helps it learn the intricacies of the language, including grammar, semantics, and common patterns. Furthermore, the ability to tune the model with specific data means it can be tailored to perform well in specific domains or specialized tasks.

Integrating ChatGPT (OpenAI) With Kubernetes: Overview

Integrating Kubernetes with ChatGPT makes it possible to automate tasks related to the operation and management of applications deployed in Kubernetes clusters. Consequently, leveraging ChatGPT allows you to seamlessly interact with Kubernetes using text or voice commands, which in turn, enables the execution of complex operations with greater efficiency.

Essentially, with this integration, you can streamline various tasks such as;

- Deploying applications

- Scaling resources

- Monitoring cluster health

The integration empowers you to take advantage of ChatGPT's contextual language generation capabilities to communicate with Kubernetes in a natural and intuitive manner.

Whether you are a developer, system administrator, or DevOps professional, this integration can revolutionize your operations and streamline your workflow. The outcome is more room to focus on higher-level strategic initiatives and improving overall productivity.

Benefits of Integrating ChatGPT (OpenAI) With Kubernetes

- Automation: This integration simplifies and automates operational processes, reducing the need for manual intervention.

- Efficiency: Operations can be performed quickly and with greater accuracy, optimizing time and resources.

- Scalability: Kubernetes provides automatic scaling capabilities, allowing ChatGPT to manage to expand applications without additional effort.

- Monitoring: ChatGPT can provide real-time information about the state of Kubernetes clusters and applications, facilitating issue detection and resolution.

How To Integrate ChatGPT (OpenAI) With Kubernetes: A Step-By-Step Guide

At this point, we understand that you already have a suitable environment for integration, including the installation of Kubernetes and an OpenAI account for ChatGPT calls.

Let’s proceed to show you how to configure the credentials for ChatGPT to access Kubernetes, using the `kubernetes-client` lib in the automation script for interactions with Kubernetes.

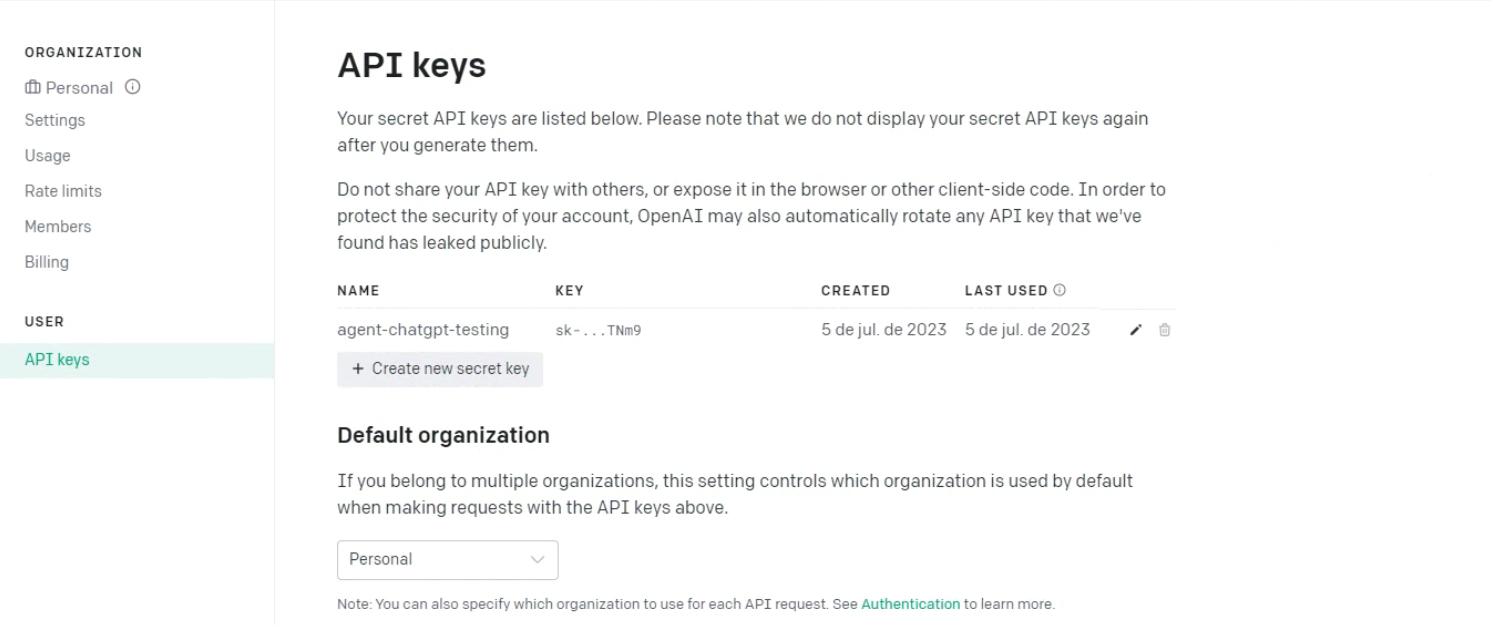

First, create your Token on the OpenAI platform:

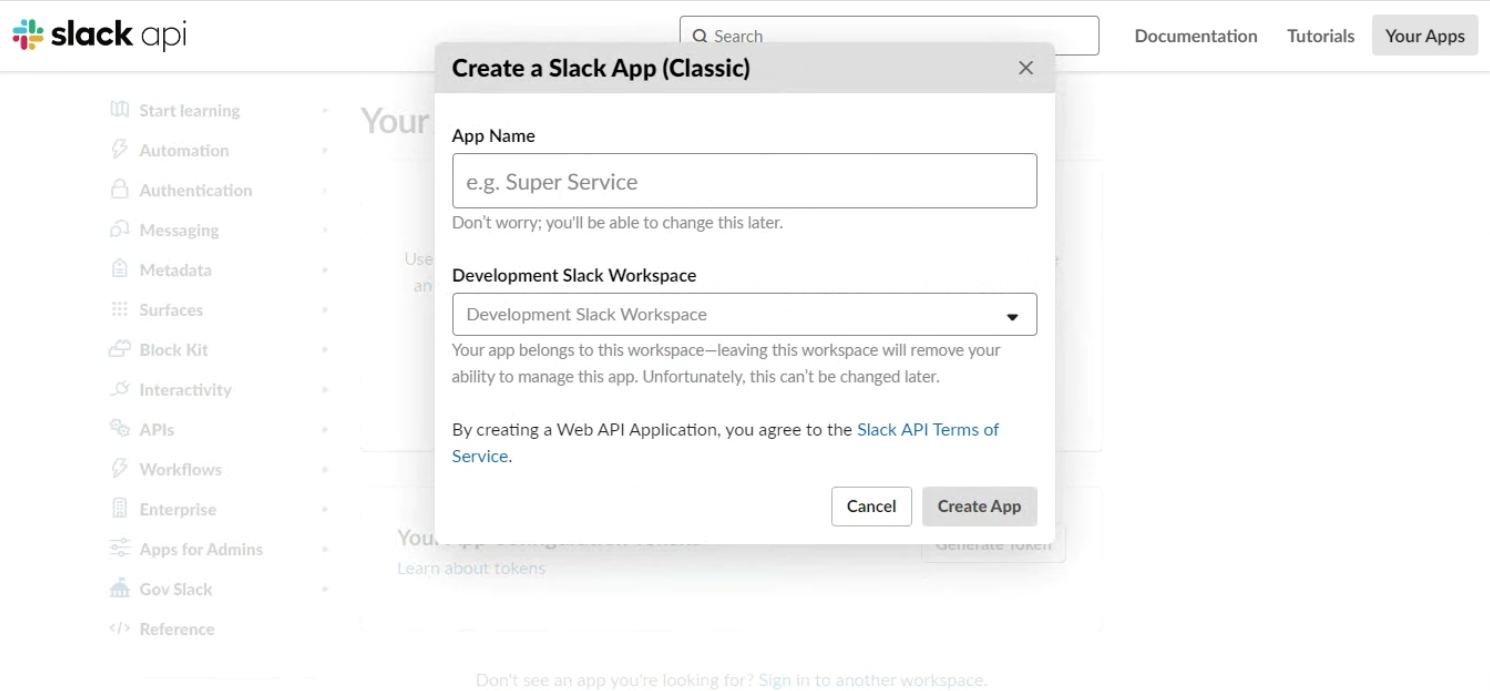

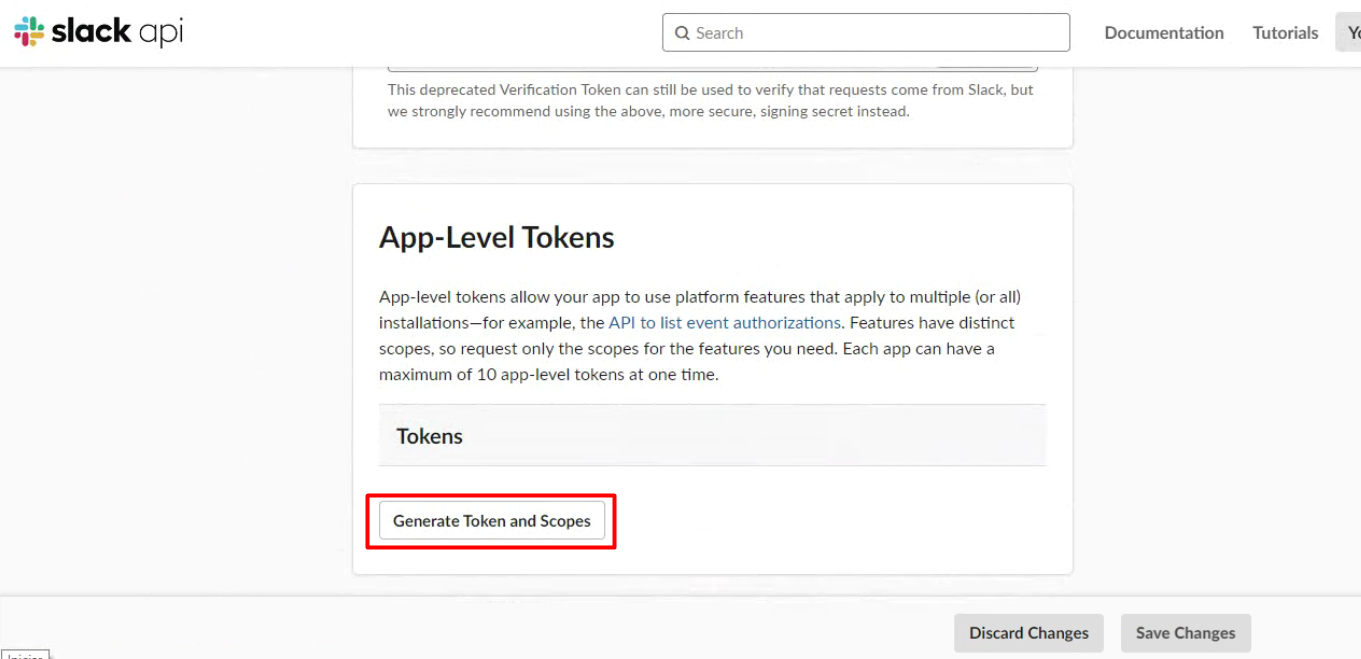

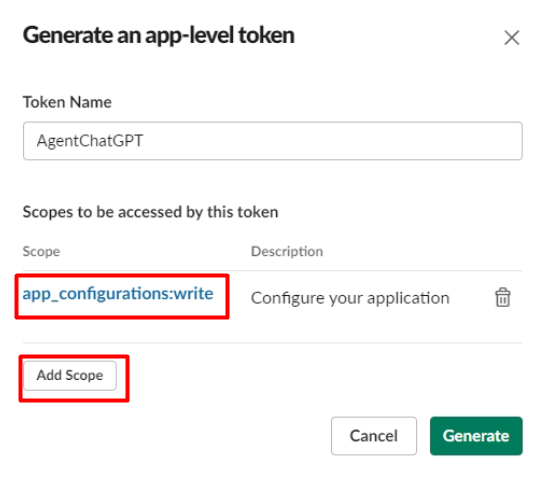

Great, now let's configure the AgentChatGPT script, remember to change this:

- Bearer <your token>

- client = WebClient(token="<your token>"

- channel_id = "<your channel id>"

import requests

from slack_sdk import WebClient

from kubernetes import client, config

# Function to interact with the GPT model

def interagir_chatgpt(message):

endpoint = "https://api.openai.com/v1/chat/completions"

prompt = "User: " + message

response = requests.post(

endpoint,

headers={

"Authorization": "Bearer ",

"Content-Type": "application/json",

},

json={

"model": "gpt-3.5-turbo",

"message": [{"role": "system", "content": prompt}],

},

)

response_data = response.json()

chatgpt_response = response_data["choices"][0]["message"]["content"]

return chatgpt_response

# Function to send notification to Slack

def send_notification_slack(message):

client = WebClient(token="")

channel_id = ""

response = client.chat_postMessage(channel=channel_id, text=message)

return response

# Kubernetes Configuration

config.load_kube_config()

v1 = client.CoreV1Api()

# Kubernetes cluster monitoring

def monitoring_cluster_kubernetes():

while True:

# Collecting Kubernetes cluster metrics, logs, and events

def get_information_cluster():

# Logic for collecting Kubernetes cluster metrics

metrics = v1.list_node()

# Logic for collecting Kubernetes cluster logs

logs = v1.read_namespaced_pod_log("POD_NAME", "NAMESPACE")

# Logic for collecting Kubernetes cluster events

events = v1.list_event_for_all_namespaces()

return metrics, logs, events

# Troubleshooting based on collected information

def identify_problems(metrics, logs, events):

problems = []

# Logic to analyze metrics and identify issues

for metric in metrics.items:

if metric.status.conditions is None or metric.status.conditions[-1].type != "Ready":

problems.append(f"The node {metric.metadata.name} not ready.")

# Logic to analyze the logs and identify problems

if "ERROR" in logs:

problems.append("Errors were found in pod logs.")

# Logic to analyze events and identify problems

for evento in events.items:

if evento.type == "Warning":

problems.append(f"A warning event has been logged: {event.message}")

return problem

# Kubernetes cluster monitoring

def monitoring_cluster_kubernetes():

while True:

metrics, logs, events = get_information_cluster()

problems = identify_problems(metrics, logs, events)

if problemas:

# Logic to deal with identified problems

for problem in problems:

# Logic to deal with each problem individually

# May include corrective actions, additional notifications, etc.

print(f"Identified problem: {problem}")

# Logic to wait a time interval between checks

time.sleep(60) # Wait for 1 minute before performing the next check

# Running the ChatGPT agent and monitoring the Kubernetes cluster

if __name__ == "__main__":

monitoring_cluster_kubernetes()

if problem_detected:

# Logic for generating troubleshooting recommendations with ChatGPT

resposta_chatgpt = interact_chatgpt(description_problem)

# Send notification to Slack with issue description and

recommendation

message_slack = f"Identified problem:

{description_problem}\nRecomendation: {response_chatgpt}"

send_notification_slack(message_slack)

# Running the ChatGPT agent and monitoring the Kubernetes cluster

if __name__ == "__main__":

monitorar_cluster_kubernetes()

Now use the Dockerfile example to build your container with ChatGPT Agent, remember it’s necessary to create volume with your Kube config:

# Define the base image

FROM python:3.9-slim

# Copy the Python scripts to the working directory of the image

COPY agent-chatgpt.py /app/agent-chatgpt.py

# Define the working directory of the image

WORKDIR /app

# Install required dependencies

RUN pip install requests slack_sdk kubernetes

# Run the Python script when the image starts

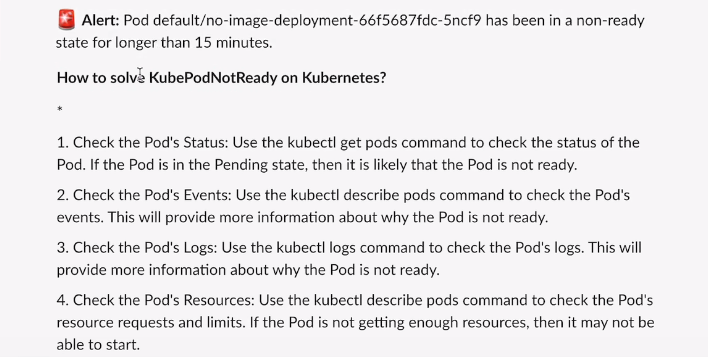

CMD ["python", "agent-chatgpt.py"]Congratulations, if everything is properly configured. Running the script at some point in the monitoring you may get messages similar to this:

Best Practices for Using Kubernetes With ChatGPT (OpenAI)

Security

Implement appropriate security measures to protect access to Kubernetes by ChatGPT.

Logging and Monitoring

Implement robust logging and monitoring practices within your Kubernetes cluster. Use tools like Prometheus, Grafana, or Elasticsearch to collect and analyze logs and metrics from both the Kubernetes cluster and the ChatGPT agent.

This will provide valuable insights into the performance, health, and usage patterns of your integrated system.

Error Handling and Alerting

Establish a comprehensive error handling and alerting system to promptly identify and respond to any issues or failures in the integration. Essentially, set up alerts and notifications for critical events, such as failures in communication with the Kubernetes API or unexpected errors in the ChatGPT agent.

This will help you proactively address problems and ensure smooth operation.

Scalability and Load Balancing

Plan for scalability and load balancing within your integrated setup. Consider utilizing Kubernetes features like horizontal pod autoscaling and load balancing to efficiently handle varying workloads and user demands.

This will ensure optimal performance and responsiveness of your ChatGPT agent while maintaining the desired level of scalability.

Backup and Disaster Recovery

Implement backup and disaster recovery mechanisms to protect your integrated environment. Regularly back up critical data, configurations, and models used by the ChatGPT agent.

Furthermore, create and test disaster recovery procedures to minimize downtime and data loss in the event of system failures or disasters.

Continuous Integration and Deployment

Implement a robust CI/CD (Continuous Integration/Continuous Deployment) pipeline to streamline the deployment and updates of your integrated system.

Additionally, automate the build, testing, and deployment processes for both the Kubernetes infrastructure and the ChatGPT agent to ensure a reliable and efficient release cycle.

Documentation and Collaboration

Maintain detailed documentation of your integration setup, including configurations, deployment steps, and troubleshooting guides. Also, encourage collaboration and knowledge sharing among team members working on the integration.

This will facilitate better collaboration, smoother onboarding, and effective troubleshooting in the future.

By incorporating these additional recommendations into your integration approach, you can further enhance the reliability, scalability, and maintainability of your Kubernetes and ChatGPT integration.

Conclusion

Integrating Kubernetes with ChatGPT (OpenAI) offers numerous benefits for managing operations and applications within Kubernetes clusters. By adhering to the best practices and following the step-by-step guide provided in this resource, you will be well-equipped to leverage the capabilities of ChatGPT for automating tasks and optimizing your Kubernetes environment.

The combination of Kubernetes' advanced container orchestration capabilities and ChatGPT's contextual language generation empowers you to streamline operations, enhance efficiency, enable scalability, and facilitate real-time monitoring.

Whether it's automating deployments, scaling applications, or troubleshooting issues, the integration of Kubernetes and ChatGPT can significantly improve the management and performance of your Kubernetes infrastructure.

As you embark on this integration journey, remember to prioritize security measures, ensure continuous monitoring, and consider customizing the ChatGPT model with Kubernetes-specific data for more precise results.

Maintaining version control and keeping track of Kubernetes configurations will also prove invaluable for troubleshooting and future updates.