Securing Your Containers—Top 3 Challenges

A cost-effective and less sophisticated alternative to Virtual Machines (Containers) have revolutionized the application delivery approach. They have dramatically reduced the intake of IT labor and resources in managing application infrastructure. Yet, while securing containers and containerized ecosystems, software teams are met with many roadblocks. Especially for enterprise teams accustomed to more traditional network security processes and strategies. We’ve usually preached that containers offer better security because they isolate the application from the host system and each other. Somewhere we have made it sound like they’re inherently secure and almost impenetrable to threats. But how far-fetched is this idea? Let’s dive right into it.

Let’s get a high-level view of what the market looks like. According to Business wire, the global Container Security Market size is projected to reach $3.9 billion by 2027, that’s a 23.5% CAGR.

Pretty much like any software, containerized applications can fall prey to security vulnerabilities that include bugs, inadequate authentication and authorization, and misconfiguration.

Container Threat Model

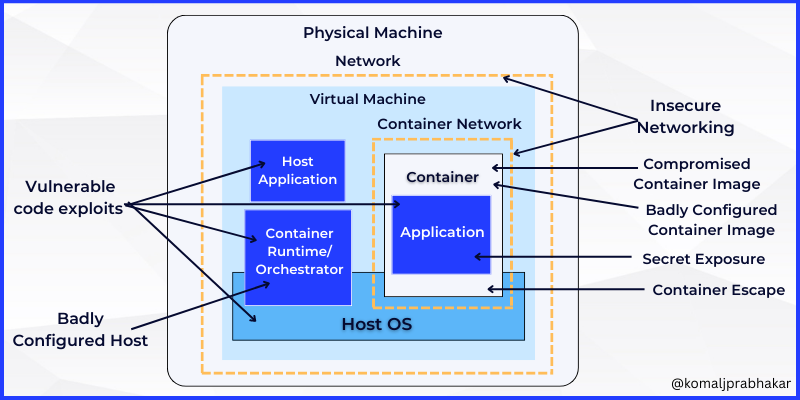

Let’s understand the container threat model below:

Possible Vulnerable Factors in a Container

- External attackers (from outside) trying to access one of our deployments (Tesla getting crypto-hacked).

- Internal attackers who have some level of access to the prod environment (not necessarily admin).

- Malicious internal factors are the privileged internal users like developers and administrators who have access to the deployment.

- Inadvertent internal factors that might accidentally cause problems like carelessly storing some secret key or certificate in the container image.

- By introducing some new service or reducing the waiting time to enhance the customer experience, companies tend to open some ports in their servers or firewall. If not thoroughly guarded, this might become the passage of hackers.

There can be several routes that can compromise your container security (and the above image pretty much summarizes so). Let’s discuss some of these factors precisely.

Challenges

1. The Fault in Container Images

It’s not all about having malicious software. Poorly configured container images can be the reason for introducing vulnerabilities. The problem begins when people think they can spin up their own image or download it from the cloud and start using it straight. We should be aware that every day new vulnerabilities are introduced on the cloud. Every container image needs to be scanned individually to prove it is secure.

Some Known Cases To Avoid

- What if the image launches an extraneous daemon or service that allows unauthorized network access?

- What if it’s configured to work with more user privileges than are necessary?

- Another danger to look out for is if any secret key or credential is stored within the image.

Note: From all the above cases, we notice that Docker will always give priority to its own network above your local network.

Recommendations

- Pulling images from trusted container registries. Trusted container registries are not poorly configured. Usually referred to private registries, but not necessarily, unless they are encrypted and have authenticated connections. This should include credentials federated with existing network security controls.

- The container registry should undergo frequent maintenance tests to keep it devoid of any stale images with lingering vulnerabilities.

- Software teams need to frame shared practices with blue-green deployments or rollbacks of the container changes before pushing them into production.

2. Watch Out for Your Orchestration Security

Popular orchestration tools like Kubernetes is unmissable while addressing security issues. It has become the prime attack surface.

According to Salt Security, approximately 34% of the organizations have absolutely no API security strategy in place. Adding to that, 27% say they have just a basic strategy that involves minimal scanning and manual reviews of API security status and no controls over them.

When Kubernetes is handling multiple containers, it is, in a way, exposing a large surface area for attack. Following industry-wide practiced field-level tokenization is not enough, when we are not securing the orchestrator’s ecosystem. Because it’s just a matter of time before the sensitive information gets decoded and exposed.

Recommendations

- Ensuring the administrative interface of the orchestrator is properly encrypted, which can include two-factor authentication and at-rest encryption of data.

- Separation of network traffic into discrete virtual networks. This segregation should be done on the basis of the sensitivity of the traffic being transmitted. (For example, public-facing web apps can be categorized as low-sensitivity workloads and something like tax reporting software as high-sensitivity workloads and separating them. The idea is to ensure each host runs containers of a certain security level.)

- The best practice can be to adhere to end-to-end encryption of all network traffic between cluster nodes and also includes authenticated network connections between cluster members.

- We should aim to introduce nodes securely into clusters, maintaining a persistent identity for each node throughout its lifecycle. (Isolate/Remove compromised nodes without affecting the cluster’s security).

3. Prevent the “Container Escape Scenario”

Popular container runtimes such as containerd, CRI-O, and rkt might have hardened their security policies over time, however, there’s still the possibility of them containing bugs. This is an important aspect to consider because they can allow mischievous code to run inside the “container escape” out onto the host.

If you remember back in 2019, a vulnerability was discovered in runC called Runscape.

This bug (CVE-2019-5736 ) had the potential to enable hackers to break away from the sandbox environment and gave root access to the host servers. This led to compromising an entire infrastructure. At first, they assumed that it could be a malicious Docker image as there has to be a malicious process inside. After all tests, they realized it was a bug in runC.

Security Needs to Shift Left

While dealing with a microservices-based environment, the best practice says to bring in automated deployments at every step. We are not agile if we are still performing deployments manually, following a weekly or monthly cadence. To actually shift left in application delivery, we need to create a modern toolchain of security plugins and their extensions throughout the pipeline.

This is how it works: if there’s any vulnerability present in the image, the process should stop right there in the build stage. Periodic audits should be conducted on RBACs to monitor all access levels. Also, all the tools and processes should align with the CIS benchmarks.

A good approach will be to adopt security-as-code practices to write the security manifest for Kubernetes-native YAML files as custom resource definitions. These are human-readable and declare the security state for the application at runtime. Now, this can be pushed into the prod environment and protected with the zero-trust model. Thus, there are never any changes to the code outside the pipeline.

Wrapping Up

It’s time to wrap our thoughts around containerization and container security handling. My goal was to highlight some of the easily doable, yet highly neglected zones while practicing containerization. Today, automated security processes across CI/CD pipelines and declarative zero-trust security policies are the need of the hour. They enhance developer productivity and are a part of DevOps best practices.