Everything You Need to Know and Do With Load Balancers

Hey there, I'm Roman, a Cloud Architect at Gart with over 15 years of experience. Today, I want to delve into the world of Load Balancers with you. In simple terms, a Load Balancer is like the traffic cop of the internet.

Its main gig? Distributing incoming web traffic among multiple servers. But let's not try to swallow this elephant in one go; we'll munch on it one piece at a time, step by step. I'll break down this complex topic into digestible chunks.

Ready to dive in?

How Load Balancers Work

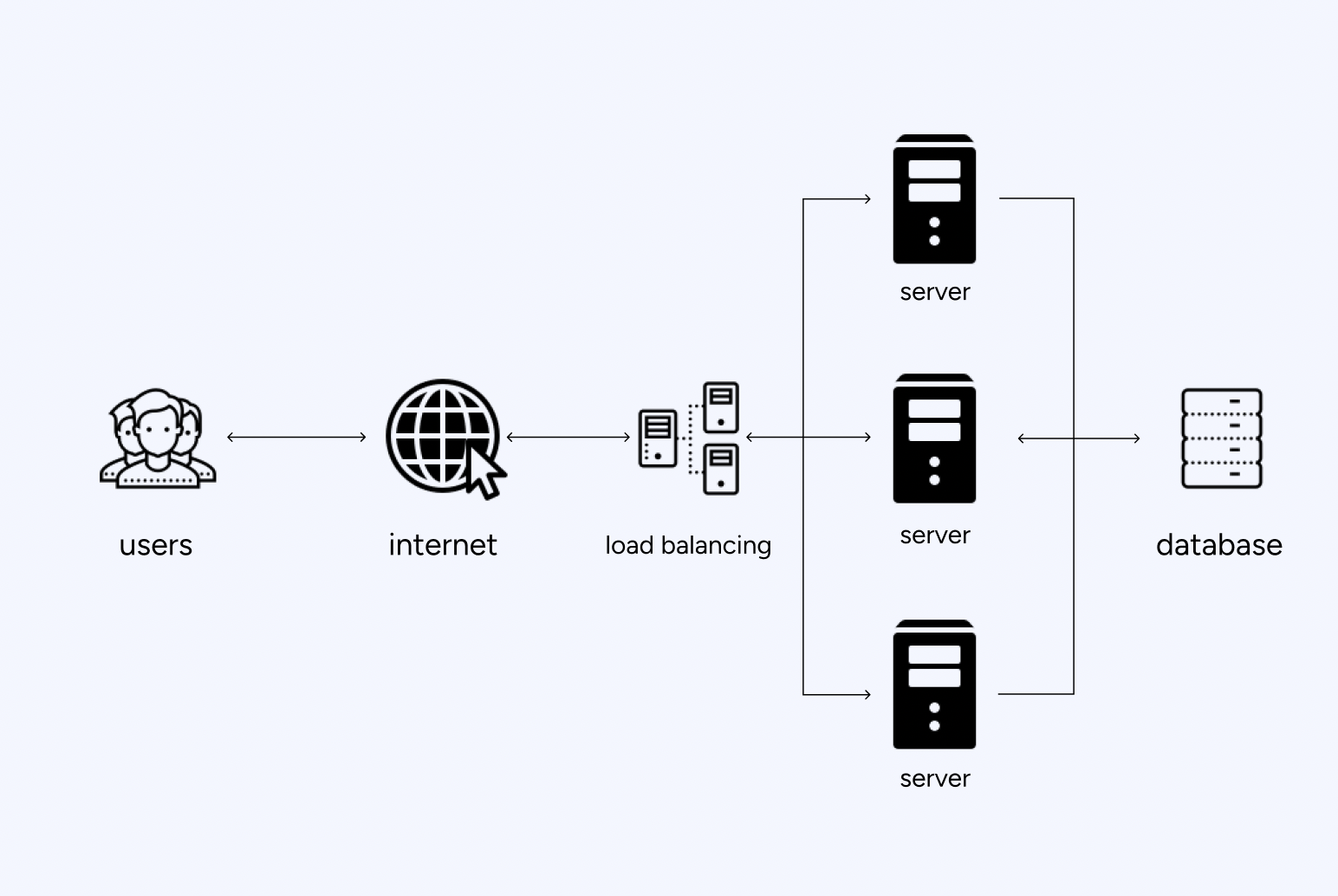

Alright, let's demystify how Load Balancers work. Think of them as the maestros of web traffic. When you've got multiple servers hanging out, the Load Balancer steps in and decides who gets what. It's like playing traffic cop in a bustling city but for data.

When your website becomes the hottest spot in town, tons of visitors start pouring in. The Load Balancer takes a look at the incoming traffic and says, "You go to Server A, you to Server B, and you over there to Server C."

Load Balancers don't just randomly toss traffic around; they're smart about it. They check each server's workload, making sure nobody's overwhelmed.

At its core, the load-balancing process involves the efficient allocation of incoming requests among a group of servers, typically referred to as a server farm or cluster. This ensures that no single server is overwhelmed by traffic while others remain underutilized. Load balancing can be implemented at various layers of the OSI model, including the application layer, transport layer, and network layer, depending on the specific requirements and architecture of the system.

Load balancers employ various algorithms to distribute incoming traffic among the servers in a way that optimizes performance and resource utilization. One common method is the Round Robin algorithm, where each new request is directed to the next server in line, creating a cycle. This simplistic approach ensures a relatively even distribution of traffic but may not consider each server's actual load or capacity.

More sophisticated algorithms, such as Least Connections or Weighted Round Robin, take into account factors like server load, response times, and server capacity. Least Connections directs traffic to the server with the fewest active connections, while Weighted Round Robin assigns a weight to each server based on its capacity, enabling a more nuanced distribution of the workload.

Additionally, some load balancers can perform content-based routing, considering specific characteristics of the incoming requests, such as URL patterns or request types. This allows for a more intelligent distribution of traffic based on the nature of the requests, optimizing the utilization of specialized server resources.

Hardware Load Balancers

Hardware Load Balancers are the muscle cars of the load balancing world. They're physical devices, built to handle the heavy lifting. Picture a dedicated, robust machine sitting in your server room, orchestrating the traffic flow. These beasts are all about raw power and come with specialized hardware to optimize performance.

Pros

- Performance Beast: Hardware Load Balancers can handle massive traffic without breaking a sweat.

- Dedicated Hardware: Since they're standalone devices, they often come with dedicated processors and memory, ensuring top-notch performance.

- Reliability: Less susceptible to issues like software bugs or operating system quirks.

Cons

- Cost: Heavy-duty performance comes with a hefty price

- Scalability Challenges: Scaling up might mean getting a new hardware balancer, and that's not always a walk in the park.

- Flexibility: Configuring these beasts can sometimes feel like steering an oil tanker; they're powerful, but maneuvering takes time.

Software Load Balancers

Software Load Balancers are the digital chameleons of the load balancing realm. Unlike their hardware counterparts, these are bits of code, often running on standard servers or virtual machines. They bring flexibility to the party, adapting to the digital landscape like seasoned shape-shifters.

Pros

- Cost-Effective: Software Load Balancers often play well with your existing infrastructure, saving you some serious cash.

- Scalability: Need to handle more traffic? No problem. Scale up by spinning up more instances in your virtual environment.

- Configurability: Tweak and tune settings without needing a hardware overhaul.

Cons

- Resource Utilization: Since they share resources with other applications on the same server, performance can take a hit during peak times.

- Complexity: Setting up and fine-tuning software load balancers might require a bit more tech-savvy compared to their hardware counterparts.

In the tug-of-war between hardware and software, it often boils down to your specific needs, budget, and the kind of digital traffic jam you're trying to navigate. Choose wisely, my friend.

Load Balancing Algorithms

Round Robin

Imagine a friendly game of pass-the-parcel. Round Robin distributes incoming traffic equally among servers in a circular order. Great for scenarios where all servers are pretty much equal in terms of processing power. It's like the "everybody gets a turn" strategy. While Round Robin is easy to implement and transparent, it may not consider variations in server load or capacity, potentially leading to suboptimal resource utilization.

Least Connections

This algorithm sends new connections to the server with the fewest active connections. It's like picking the cashier with the shortest line at the grocery store. Perfect for situations where server loads vary, ensuring that each server has a similar number of active connections.

Least Response Time

This smart algorithm directs traffic to the server with the fastest response time. It's like choosing the express lane at the toll booth. Ideal for optimizing user experience by sending requests to the server that can handle them most quickly.

Weighted Round Robin

A leveled-up version of Round Robin, this assigns a "weight" to each server, determining its proportion of traffic. It's like giving more tickets to the servers that can handle a heavier load. When servers have different capacities, and you want to distribute traffic based on their capabilities.

Weighted Least Connections

Similar to Least Connections, but with a weight factor. It sends new connections to the server with the fewest weighted connections. Useful when servers have different capacities, and you want to balance the load based on their relative strengths.

Comparison and Use Cases for Each Algorithm

- Round Robin vs. Least Connections: If servers are similar, Round Robin is a simple choice. If not, go for Least Connections to distribute the load more intelligently.

- Least Response Time vs. Weighted Round Robin: If response time matters most, use Least Response Time. If you have different server capacities, opt for a Weighted Round Robin to balance the load effectively.

Choosing the right algorithm is like picking the right tool for the job. Each has its strengths, so it's all about understanding your servers' capabilities and the nature of your traffic.

Common Protocols Used With Load Balancers

| Protocol | Description |

|---|---|

| HTTP/HTTPS | Foundation of web communication; HTTPS adds encryption for secure data transfer. |

| TCP | Ensures reliable data transmission on the internet; vital for various applications and services. |

| UDP | Lightweight protocol for real-time applications like video streaming and online gaming. |

| SSL/TLS | SSL establishes a secure connection; TLS, its successor, ensures encrypted data transmission in transit. |

HTTP (Hypertext Transfer Protocol)

The foundation of data communication on the World Wide Web. Load balancers distributing web traffic often deal with HTTP requests. Think of it as the language your browser speaks when asking for a webpage.

HTTPS (Hypertext Transfer Protocol Secure)

The secure sibling of HTTP. It adds a layer of encryption (thanks to SSL/TLS) to protect data during transmission. Load balancers play traffic cop for secure web communication.

TCP (Transmission Control Protocol)

A fundamental protocol for communication on the internet. Load balancers handling TCP traffic ensure that data gets from one point to another reliably and without errors. It's like the load balancer making sure each piece of the puzzle arrives intact.

UDP (User Datagram Protocol)

A more lightweight protocol suitable for applications where speed matters more than ensuring every bit arrives perfectly. Load balancers managing UDP traffic are like the traffic directors for real-time applications, such as video streaming or online gaming.

SSL (Secure Sockets Layer)

The older brother creating a secure connection between a client and a server. Think of it as the load balancer ensuring a secret handshake before allowing entry.

TLS (Transport Layer Security)

The newer and more secure version succeeding SSL. Load balancers ensuring TLS are like the guardians of the encrypted tunnel, making sure no unauthorized eyes get a peek.

Load Balancers in Cloud Environments

Load balancers and cloud services go together like peanut butter and jelly. Cloud providers offer load balancing services that seamlessly integrate with their ecosystems.

| Aspect | Cloud Service Providers |

|---|---|

| Integration with Cloud Services | AWS: Elastic Load Balancing (ELB) |

| Azure: Azure Load Balancer | |

| Google Cloud: Google Cloud Load Balancer | |

| Load Balancing in Virtualized Environments | VMware: NSX Load Balancer |

| Microsoft Hyper-V Load Balancing | |

| Considerations for Cloud-Native Applications | Container Orchestration (e.g., Kubernetes) |

| Microservices Architecture | |

| Auto-Scaling |

- Elastic Load Balancing (ELB) in AWS: Amazon's ELB automatically distributes incoming traffic across multiple targets, be they instances or containers. It scales with your application and plays well with other AWS services.

- Azure Load Balancer in Microsoft Azure: Azure's load balancer handles traffic distribution for virtual machines and services within the Azure environment. It's your go-to guy for ensuring availability and reliability.

- Virtualization is like load balancing's best friend. Load balancers in virtual environments work with virtual machines (VMs) instead of physical servers.

- VMware NSX Load Balancer: VMware's solution operates within its software-defined data center (SDDC) framework. It's the traffic conductor for VMs, ensuring they're not stepping on each other's toes.

- Microsoft Hyper-V Load Balancing: For those in the Hyper-V world, load balancing helps distribute the load across VMs, optimizing resource usage and ensuring a smooth user experience.

Cloud-native applications are born and bred in the cloud. Load balancers play a crucial role in their performance, scalability, and overall health.

Load balancing becomes dynamic with container orchestration tools. Kubernetes, for example, has its own load balancing mechanisms to distribute traffic among containers.

In a cloud-native world, applications often consist of microservices. Load balancers must intelligently route traffic among these services, ensuring each plays its part in delivering a cohesive user experience.

Cloud-native applications thrive on scalability. Load balancers need to seamlessly adapt to fluctuating loads, scaling up or down as needed.