Securing the Generative AI Frontier: Specialized Tools and Frameworks for AI Firewall

In the previous post — Understanding Prompt Injection and Other Risks of Generative AI, you learned about the security risks, vulnerabilities, and mitigations associated with Generative AI. The article elaborated on prompt injection and introduced other security risks.

In this article, you will learn about specialized tools and frameworks for prompt inspection and protection or AI firewalls.

The Rise of Generative AI and Emerging Security Challenges

The rapid advancements in generative artificial intelligence (AI) have ushered in an era of unprecedented creativity and innovation. Along with the advancements, this transformative technology has also introduced a host of new security challenges that demand urgent attention. As AI systems become increasingly sophisticated, capable of autonomously generating content ranging from text to images and videos, the potential for malicious exploitation has grown exponentially. Threat actors, including cybercriminals and nation-state actors, have recognized the power of these generative AI tools and are actively seeking to leverage them for nefarious purposes.

In particular, Generative AI, powered by deep learning architectures such as Generative Adversarial Networks (GANs) and language models like GPT (Generative Pre-trained Transformer), has unlocked remarkable capabilities in content creation, text generation, image synthesis, and more. While these advancements hold immense promise for innovation and productivity, they also introduce profound security challenges.

AI-Powered Social Engineering Attacks

One of the primary concerns is the rise of AI-powered social engineering attacks. Generative AI can be used to create highly convincing and personalized phishing emails, deepfakes, and other forms of manipulated content that can deceive even the most vigilant individuals. These attacks can be deployed at scale, making them a formidable threat to both individuals and organizations.

Vulnerabilities in AI-Integrated Applications

The integration of large language models (LLMs) into critical applications, such as chatbots and virtual assistants, introduces new vulnerabilities. Adversaries can exploit these models through techniques like prompt injection, where they can coerce the AI system to generate harmful or sensitive outputs. The potential for data poisoning, where malicious data is used to corrupt the training of AI models, further exacerbates the security risks.

Specialized AI Security Tools and Frameworks

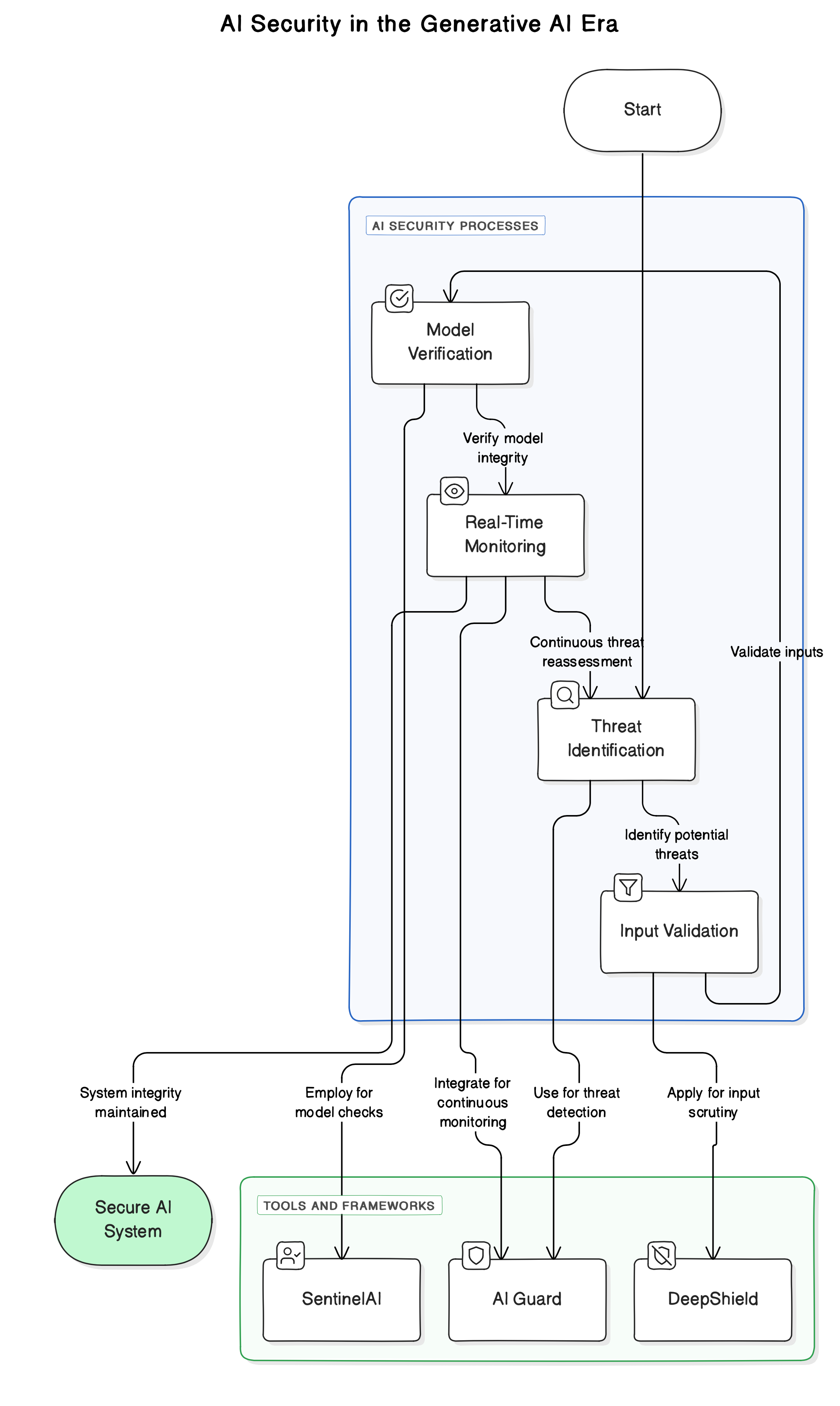

AI Security encompasses a multifaceted approach, involving proactive measures to prevent exploitation, robust authentication mechanisms, continuous monitoring for anomalies, and rapid response capabilities. At the heart of this approach lies the concept of Prompt Inspection and Protection, akin to deploying an AI Firewall to scrutinize inputs, outputs, and processes, thereby mitigating risks and ensuring the integrity of AI systems.

To address these challenges, the development of specialized tools and frameworks for Prompt Inspection and Protection, or AI Firewall, has become a crucial priority. These solutions leverage advanced AI and machine learning techniques to detect and mitigate security threats in AI applications.

Robust Intelligence's AI Firewall

One such tool is Robust Intelligence's AI Firewall, which provides real-time protection for AI applications by automatically configuring guardrails to address the specific vulnerabilities of each model. It covers a wide range of security and safety threats, including prompt injection attacks, insecure output handling, data poisoning, and sensitive information disclosure.

Nightfall AI's Firewalls for AI

Another prominent solution is Nightfall AI's Firewalls for AI, which allows organizations to safeguard their AI applications against a variety of security risks. Nightfall's platform can be deployed via API, SDK, or reverse proxy, with the API-based approach offering flexibility and ease of use for developers.

Intel's AI Technologies for Network Applications

Intel's AI Technologies for Network Applications also play a significant role in the AI security landscape. This suite of tools and libraries, such as the Traffic Analytics Development Kit (TADK), enables the integration of real-time AI within network security applications like web application firewalls and next-generation firewalls. These solutions leverage AI and machine learning models to detect malicious content and traffic anomalies.

Broader AI Governance Frameworks and Standards

Beyond these specialized tools, broader AI governance frameworks and standards, such as those from the OECD, UNESCO, and ISO/IEC, provide valuable guidance on ensuring the trustworthy and responsible development and deployment of AI systems. Companies like IBM have introduced a Framework for Securing Generative AI. These principles and guidelines can inform the overall approach to AI Firewall implementation.

Additional Tools and Frameworks for AI Security

Several tools and frameworks have emerged to bolster AI security and facilitate Prompt Inspection and Protection. These solutions leverage a combination of techniques, including anomaly detection, behavior analysis, and adversarial training, to fortify AI systems against threats. Among these, some notable examples include:

AI Guard

AI Guard

An integrated security platform specifically designed for AI environments, AI Guard employs advanced algorithms to detect and neutralize adversarial inputs, unauthorized access attempts, and anomalous behavior patterns in real-time. It offers seamless integration with existing AI infrastructure and customizable policies to adapt to diverse use cases.

DeepShield

Developed by leading AI security researchers, DeepShield is a comprehensive framework for securing deep learning models against attacks. It encompasses techniques such as input sanitization, model verification, and runtime monitoring to detect and mitigate threats proactively. DeepShield's modular architecture facilitates easy deployment across various AI applications, from natural language processing to computer vision.

SentinelAI

A cloud-based AI security platform, SentinelAI combines machine learning algorithms with human oversight to provide round-the-clock protection for AI systems. It offers features such as dynamic risk assessment, model explainability, and threat intelligence integration, empowering organizations to stay ahead of evolving security threats effectively.

Conclusion

As the generative AI era continues to unfold, the imperative for robust AI security has never been more pressing. By leveraging specialized tools and frameworks, organizations especially enterprises can safeguard their AI applications, protect sensitive data, and build resilience against the evolving threat landscape. Prompt Inspection and Protection, facilitated by the mentioned specialized tools and frameworks, serve as indispensable safeguards in this endeavor, enabling us to harness the benefits of AI innovation while safeguarding against its inherent risks. By embracing a proactive and adaptive approach to AI security, you can navigate the complexities of the Generative AI era with confidence, ensuring a safer and more resilient technological landscape for generations to come.

Further Reading

- Firewalls for AI: The Essential Guide

- Framework for Securing Generative AI