Gatling Performance Testing Pros and Cons

Here at Abstracta, Gatling is a part of our performance testing toolbox. Over the past years, we have gained a lot of experience with this tool. In this blog post, we will share this experience with you by cover the main pros and cons that we found when using it.

You may be thinking, “Why Gatling?” Well, let me summarize it for you. After having used OpenSTA for a long time, my team switched to JMeter after it gained our trust by making several improvements, such as being able to simulate hundreds of users. It was not until the beginning of 2016 when we engaged in a project where the client utilized Gatling, that we got fully involved, and since then we decided to add it to our performance engineering toolset.

As a performance tester I have had the chance to try different load testing tools, but at the same time as a developer I like to be as close to the code as I can be, and Gatling offers a powerful combination of both. After playing around with this tool for some time, there are some key points about it that I’d like to address, because I think they may help you make a decision when choosing a performance testing tool.

If you have never heard about Gatling, it is an open source load testing tool built with Scala. What it allows you to do is to simulate thousands of requests per second on your web application and get a complete report of the execution, with just a few load generators. At the end of your test, Gatling automatically generates a very useful, dynamic and colorful report, where you can get the percentiles of your response times' distributions and other interesting metrics that we are going to show you in this post.

Gatling provides you with a GUI interface for recording traffic, and then converts it to a Scala script that you can handle as you please. After recording the script, you can easily run it via the console, since Gatling does not provide a GUI for running the scripts.

You can find all the documentation about the tool on their official page, so let's go straight to the pros and cons that we have encountered while working with it.

| Pros | Cons |

| Optimal connections handling | Using the free version, the load distribution (running a higher load from a farm of load generator machines) is not so intuitive like other open source tools |

| Intuitive for use by developers | Poor level of information during execution time |

| CI/CD friendly | Few protocols supported: HTTP, WebSockets, Server-sent events, JMS |

| Automatic Reporting | Doesn’t have host monitoring integrations (third-party applications can be used) |

| Can be integrated with Taurus (allows live monitoring) |

So, let’s go through these points:

Pros

Optimal Connections Handling

A remarkable difference between Gatling and JMeter is the thread handling. While JMeter uses one thread for each user (synchronous processing), Gatling uses a more advanced engine based on Akka. Akka is a distributed framework based on the actor model, which allows fully asynchronous computing. Actors are small entities communicating with other actors through messaging. Gatling can simulate multiple virtual users with a single thread and also makes use of Async HTTP Client. We played around with both JMeter and Gatling in order to create a benchmark, and saw that Gatling requires less memory than JMeter in order to generate an equivalent load.

Intuitive to Use by Developers

Gatling is Scala based, so it requires you to know just a little bit of Java programming and a fair idea of functional programming.

Gatling's code-like scripting enables you to easily maintain your testing scenarios and automate them in your continuous delivery pipeline. That’s also a great advantage if we compare it to JMeter, because it allows you to maintain different versions of the code in a repository, which is much easier than maintaining different jmx files in GitHub.

Gatling provides you a very straightforward way of doing assertions in numerous ways, like the following:

setUp(scn).assertions(

global.responseTime.max.lt(50),

global.successfulRequests.percent.gt(95)

)

Where:

- We use global to set the scope (all requests in this case).

- You can set the statistic that we want to use, for example:

- responseTime

- successfulRequests

- You also could set the metric that you want to use in your assertion:

- max (applicable to response time)

- percent (applicable to number of requests)

- and finally you can set the condition that you want to use, for example

- lt (less than the threshold)

- gt (greater than the threshold)

CI/CD Friendly

Related to the previous point, the fact that you can store all of your tests in a repository simplifies the maintenance of the tests that are integrated in the pipeline. This is maybe one of the strongest points for Gatling regarding CI/CD practices.

Gatling allows us to monitor performance regressions after each commit directly in Jenkins. We can also parametrize email notifications, ensuring we don’t miss anything throughout the entire development cycle.

You can access all your Gatling reports from Jenkins to investigate every single performance issue. The commercial version (Gatling FrontLine) also includes an enhanced Jenkins plugin, to aid further in your automation process.

Automatic Reporting

We can obtain graphs consolidated or disaggregated by requests, making the analysis of the results easy and clear. Gatling offers the status of the execution and the summary of the response times:

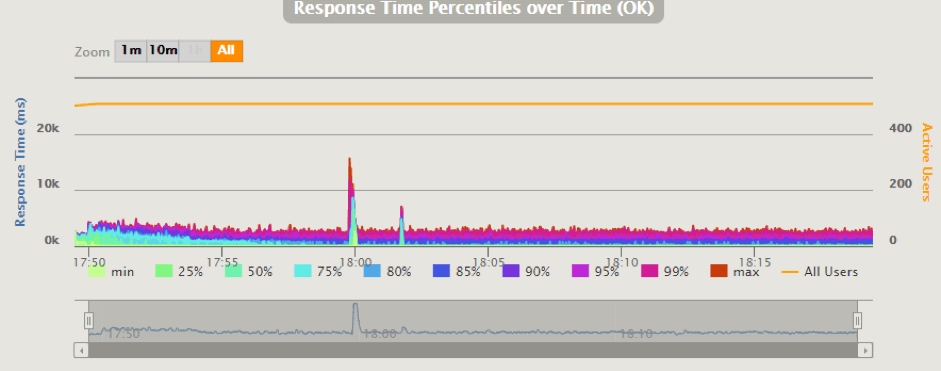

It also offers the numbers of active users during the test, response time distribution and a response time graph with the different percentiles where you can zoom in at any part of the graph, as you can see in the following image:

Gatling gives us the possibility of visualizing the load generated (request per second and response per second) all throughout the test.

Taurus Integration

Gatling can be easily integrated with Taurus, by creating a very simple Taurus script like the one below.

---

execution:

- executor: gatling

scenario: sample

scenarios:

sample:

script: .\Scaling_22_TPS.scala

simulation: Scaling_22_TPS

reporting:

- module: console

Taurus enables us to do many things, like live monitoring with the Taurus console, dumping statistics to xml or csv files that could be used by the Jenkins Performance Plugin, etc. One of most interesting features that Taurus provides is the integration with BlazeMeter, which allows us to have live monitoring with the BlazeMeter reporting service by adding the -report flag to the execution (e.g. bzt scriptName -report) and also store the resulting report for a 7 day period. If you have a BlazeMeter account you can keep the reports for longer. For more information regarding BlazeMeter reporting with Taurus, you can take a look at this link.

If you want, you can go deeper into other Gatling benefits in this blog.

Cons

Distributing Gatling Tests

If you don’t want to pay for Gatling FrontLine, but you need to take your load test a little bit further, it may not be so easy to distribute the load as it is with JMeter. Despite that, not all is lost, as Gatling actually provides a way to distribute the load with the free version of the tool.

The way of distributing load in Gatling can be found here, but the main idea of Gatling’s distribution is based on a bash script that takes care of executing the Gatling scripts located in the slaves machines, which then sends the logs generated by the simulation to the master machine, where the consolidated report will be built.

What the script does is establish an SSH connection with each remote machine, so it may generate a public key on the master machine with the following command: “ssh keygen -t rsa”. This key is stored in the route: /home/{user}/.ssh. Once this is done, we have to leave a copy of this key on each remote machine with this command: “ssh-copy-id -i ~ / .ssh / {key}”.

Poor Level of Information During Execution

When we began to use Gatling, we felt like we were blind when running simulations, because we couldn’t find any information about the response times until the test had finished, when Gatling delivers the report. That was what drove us to research the Taurus solution previously mentioned.

The only information you can get by default with the Gatling engine is Active Users, Elapsed Time (in seconds), Status of the requests, Total Requests, if the scenario is configured to scale depending on Virtual Users, as well as the completion percentage of the test.

Protocols Support

It’s a fact that Gatling is much more efficient regarding threads and connections handling, due to the reasons we have already showed you at the beginning of this post. But on the other hand, it’s also a fact that Gatling supports only HTTP, WebSockets, Server-sent events and JMS, while JMeter supports multiple protocols, because it is a much more mature tool with a bigger community behind it. For that very reason, JMeter is a very reliable option when choosing a load testing tool.

No Host Monitoring Integrations

When running a load test, it is very important to know that our own load generator is not causing a bottleneck during the test, so for that reason, is very useful to have OS metrics that can give us a full perspective during the execution. In this sense, Gatling doesn’t provide live monitoring of the host, which would be a “nice to have” but it’s not an issue because we could use NMON, Perfmon or any other third party tool we like, for that purpose.

Wrapping Up

To sum up, Gatling is a very efficient tool if you want to automate over a reduced set of protocols, a level in which it excels. Additionally, if you are involved in a CI/CD practices, Gatling is clearly a great choice, due to fact that it is easy to maintain and has versioning and refactoring. Although JMeter can be less efficient at handling connections and threads than Gatling, in some cases it’s the right option, basically when Gatling does not support the protocols your application uses.

What are your experiences with Gatling? If you haven’t used it yet, I highly encourage you to give it a try!

You can also run your Gatling load test in BlazeMeter, and enjoy cloud scaling and advanced reporting. Try us out by putting your URL in the box below. Or, request a demo.