Best Runtime for AWS Lambda Functions

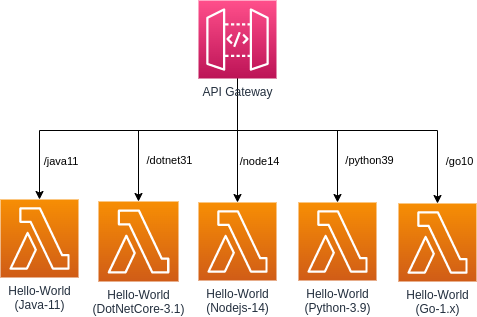

AWS Lambda is a compute service that lets you run code without any infrastructure management and it natively supports Java, Go, NodeJS, .Net, Python, and Ruby runtimes. In this article, we will compare the performances of the same hello world Lambda functions written in Java, Go, NodeJS, .Net, and Python runtimes and I hope this article helps you to decide which runtime should we use in the scenarios we have.

Structure of the Template

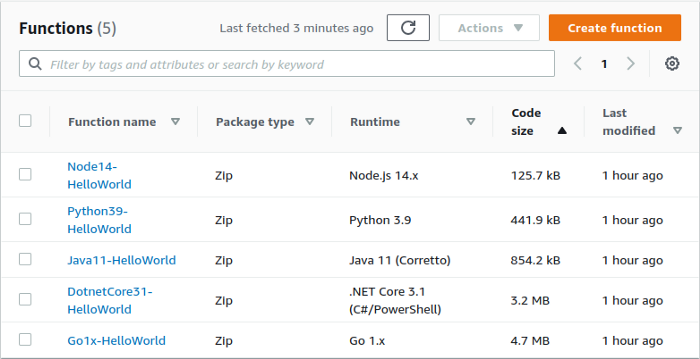

We are using simple hello world functions to test invocation times of Lambdas’ by using the AWS SAM templates. When we compare them first we will use the latest versions of the runtimes the AWS SAM provided us. You can check the complete deployment package from this Github repository.

Comparing Lambda’s by Runtime

To compare these functions dynamically, the newly introduced Thundra APM’s custom dashboard widget is a pretty proper choice. Integrated functions already exist in the Github repository, you can easily clone the repo and change the Thundra API Key with yours in SAM templates. For more information check APM docs from here or sign up for Thundra from here.

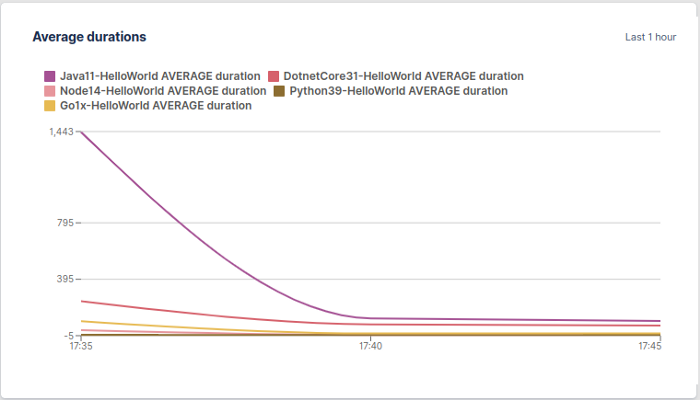

Let’s check the differences between runtimes by building widgets in a custom dashboard in Thundra APM. In this scenario, we only deployed the stack that contains the latest versions of runtimes. So filtering the functions with the runtimes and average durations would be enough for us to create the “Max durations” widget. All of the functions’ average invocation times are shown below:

Compiled languages’ cold start durations are significantly greater than the others but their max durations get closer to the others after warming up. Java has the highest cold start duration with approximately 5.000 milliseconds. In contrast to that, the interpreted languages have almost no performance difference between when they hit the cold start and they later invoke.

Also, we can clearly see that .Net has a much better cold start performance than Java even if both are compiled languages. Let’s look from a different perspective by excluding Java and taking a closer look at the other runtimes.

Go has approximately 400ms long cold start duration but invocation time goes down and invocations start to happen instantly after a cold start. We see dazzling performance results in Python and NodeJS runtimes. It looks like they are almost not affected by cold start and invocations always run instantly.

Conclusion

One of the biggest differences between compiled and interpreted languages is that interpreted languages need less time to analyze source code but the overall time to execute the process is much slower. In this context, we can clearly understand the reason why languages like NodeJS and Python have dazzling performances against cold starts.

In my experimentation, I’ve observed that Java and .Net performed worse than the other runtimes when we compare the invocation durations. I think it’s because Lambdas don’t have a large enough process to bridge the difference between interpreter and compiler. So if we tried to do a heavier and more complex process instead of returning the ‘’Hello World’ message, compiled languages would have better performances after warming up. Here are some other graphs created with the Thundra APM Dashboard queries:

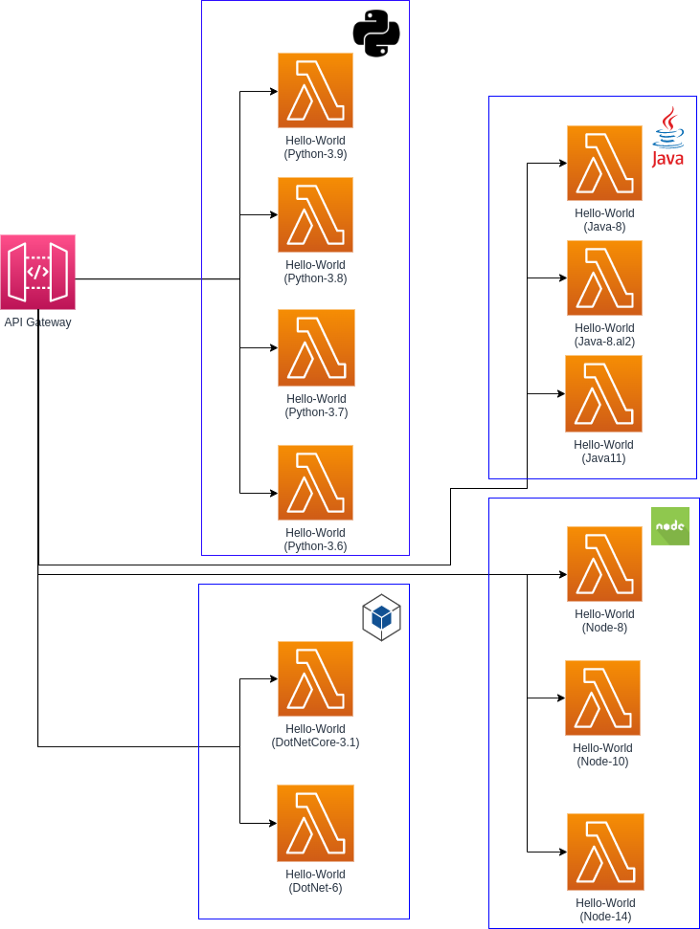

Bonus Section: Comparison Between Versions

There are different versions of the runtimes I used in my experimentation. I compared the 3 different Java versions, 3 different NodeJS versions, 4 different Python versions, and 2 different DotNet versions.

- The Java versions: Java8, Java8.al2, Java11

- The node versions: Node10, Node12, Node14

- The Python versions: Python3.6, Python3.7, Python3.8, Pyton3.9

- The DotNet versions: .netcore3.1, .net6

When comparing the versions of these runtimes, we will not be able to see any difference in Lambda functions’ behavior, but it would not hurt to check them anyway.

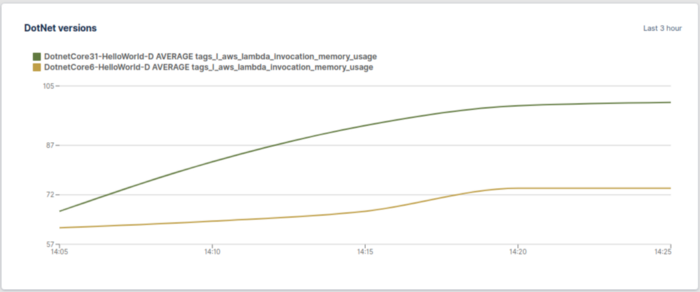

DotNet

DotNet-6 is added after others to this comparison because when I writing this article AWS Lambda environments was supporting the 3.1 version for Dotnet core. After native support was annotated by AWS I decided to insert this version of DotNet runtime into this article. Also, this runtime was specifically requested by some of the readers because Microsoft is assertive about the performance of this version.

According to Microsoft’s announcements, DotNet-6 is %40 faster than DotNet-5. They are so confident in this runtime and they claim .NET 6 is the fastest full-stack web framework which lowers compute costs if you're running in the cloud. In this post, we compared DotNet-3.1 and DotNet-6 and we can clearly see that both cold start and warmed invocation times for DotNet-6 are much better than DotNet-3.1. Especially, in cold start duration, we get amazing results. the cold start duration of DotNet-3.1 is approximately 8 seconds while the cold start duration of DotNet-6 is 800ms.

We can assume the improvement that memory usage for DotNet-6 is pretty lighter than DotNet-3.1 but actually there is no difference between these 2 runtimes. These example lambda functions created with AWS SAM and SAM assign these 2 runtimes to different memory sizes. The DotNet-6 lambda's memory size is 256Mb while the DotNet-3.1 lambda function's 128. So actually there is no difference between them but we can say the minimum memory size of Dotnet 6 is greater than DotNet 3.1.

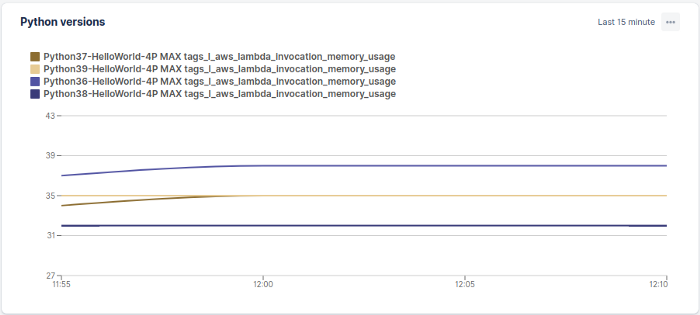

Python

There is no invocation duration difference between python versions but there are memory usage differences here. Python 3.8 has better memory usage results than the other 3 Python versions:

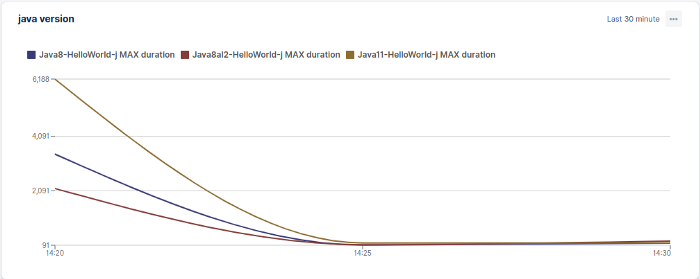

Java

You can see the invocation duration difference between Java versions below. The slowest runtime in this comparison has different performances inside it. Java 8 runtime in Amazon Linux 2 is doing a pretty good job even if it could not catch the others.

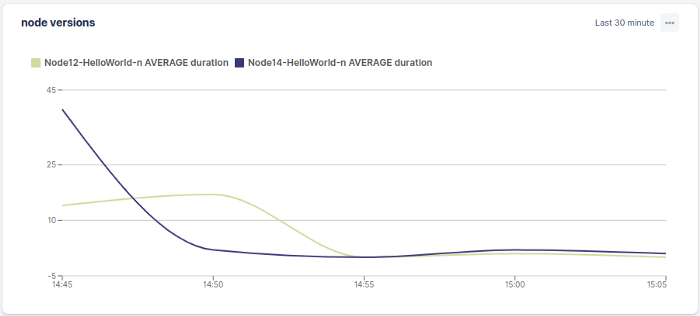

Node

Invocation durations only make difference for a cold start. As with the other interpreted languages, Node has not had much difference between its own versions. According to my test cases, the Node-12 version has a much better cold start duration. But after passing the cold start, no difference was shown in invocation durations.