How To Calculate CPU vs. RAM Costs For More Accurate K8s Cost Monitoring

Cloud providers like AWS and Azure do this one thing that makes monitoring, reporting, and forecasting compute costs so hard. They often blend the CPU and RAM costs together.

Why does that matter?

If you can’t see this cost division, you stand no chance of understanding exactly how much of your hard-earned money you’re pouring into each of these cloud resources.

That’s especially annoying if you run memory-intensive applications.

We developed a formula for calculating the exact amount of $ $ $ spent per one CPU and one GiB of RAM — and now we’re sharing it with you.

Don’t want to follow our process? Jump directly to the formula.

Why Divide Compute Costs Into CPU and Memory?

When you look at the cost data each of the major cloud providers shares, only Google Cloud Platform gives you an accurate picture of compute costs divided into CPU and memory.

If your application uses a lot of RAM, not having this type of cost breakdown will prevent you from calculating the full cost accurately.

You need to figure out how to expose the real costs of memory and CPU so that cost monitoring can give you the most accurate information and help you save some $.

This is definitely worth the trouble since you get:

- Better monitoring of your resources

- Improved understanding of what you’re paying for

- Cost breakdown per workload, namespaces, and allocation group

- Easy cost allocation – you can instantly see which workload, namespace, or allocation group is eating most of your money with details on CPU and RAM

- Insights into CPU and RAM utilization enables you to plan your cloud budget better

To provide more accurate cost monitoring to our users, we came up with a method to calculate CPU vs. memory costs for AWS and Azure machines. And now, we’re sharing this method with you to help boost your cost management efforts.

Estimating Costs Based on Price Differences Between Instance Families in AWS

Before implementing this feature into the CAST AI platform, we considered two approaches.

We started by exploring two different AWS instance families (general purpose and memory-optimized) to evaluate the price increases resulting from increasing memory and increasing CPU.

Prices of Memory-Optimized Instances and General Purpose

| Instance | Hourly price | vCPUs | Memory |

| c5.12xlarge | $2.04000 | 48 | 96 |

| r5.12xlarge | $3.02400 | 48 | 384 |

| c5.2xlarge | $0.34000 | 8 | 16 |

| r5.large | $0.12600 | 2 | 16 |

Let’s estimate the price distribution between memory and CPU:

| Hourly delta per extra GiB: | $0.003416667 | Price d(r5.12xlarge, c5.12xlarge) /Memory d(r5.12xlarge, c5.12xlarge) |

| Hourly delta per extra CPU: | $0.035666667 | Price d(c5.2xlarge, r5.large) /CPU d(c5.2xlarge, r5.large) |

| Total: | $0.039083333 | SUM (Hourly delta per extra GiB, Hourly delta per extra CPU) |

| % GiB | 8.742% | Hourly delta per extra GiB/Total |

| % CPU | 91.258% | Hourly delta per extra CPU/Total |

While the numbers look interesting, this approach has some downsides. Pricing ratios can be very different for various instance families

So, we tried another approach based on extrapolating from the CPU and memory cost data Google Cloud Platform shares publicly. The GCP ratio is as follows:

- CPU makes up 88% of the total instance price

- RAM makes up 12% of the total instance price

Now that you know the CPU and memory ration, you’re ready to use the formula and save a buck on compute!

Formula for Calculating CPU vs. Memory Cost in Cloud Instances

We developed the following formula for calculating the costs of CPU and memory:

instancePrice / ((vCPU Count * CPURatio) + (RAM GiB * RAMRatio)) * <resource_ratio>

Just as a reminder, the CPU ratio is 88%, and RAM ratio is 12%.

Note: Some cloud instances have GPU, and that resource pricing breakdown needs to be calculated, including GPU ratio. We’re currently investigating this and will share the results soon!

Try Automation Instead of Calculating Costs Manually

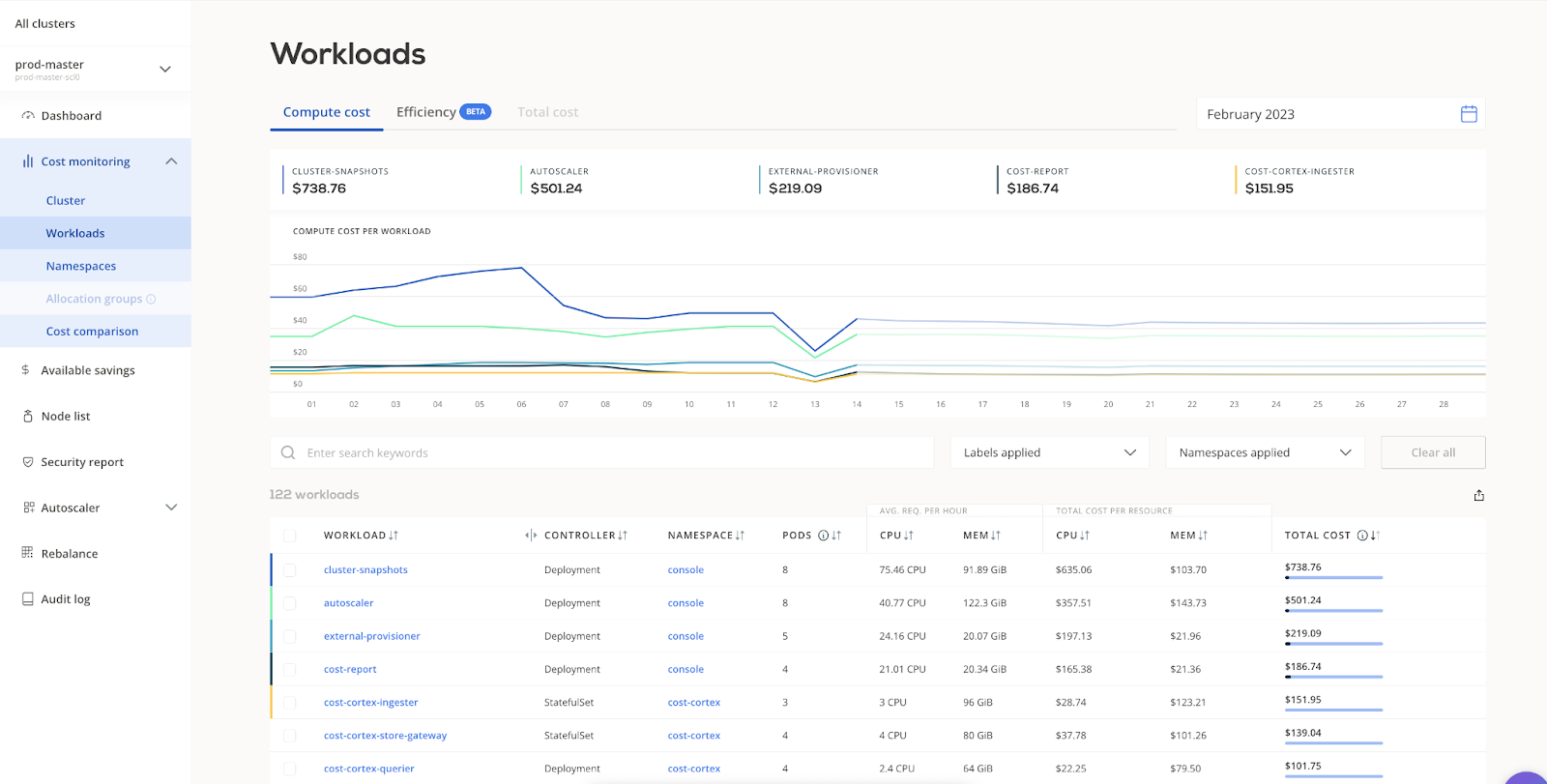

If you use Kubernetes, you can try out the free cost monitoring module to see how this aspect of cloud pricing plays out in your deployment. All of the calculations we talked about above are done automatically by the solution, and you get the results in seconds.

You can see the average requests and costs per resource in a table like this:

Connect your cluster and evaluate your cloud costs in real time, and check how much you’re really spending on CPUs and memory.