IBM MQ in ECS on AWS Fargate

AWS Fargate is a serverless compute resource specifically designed for running containers. With AWS Fargate the deployment and management of the container(s) are controlled by either Elastic Container Service (ECS) or Elastic Kubernetes Service (EKS).

Initially, users often get confused by the relationship between Fargate and the container management solution (EKS or ECS). Therefore, I want to labor the point of the difference, when deploying a container within the context of an enterprise, any solution will include two components:

- Compute resources: the machines where the containers run. This is Fargate.

- Container management: software that assures that the containers are deployed onto the compute based on the configuration. This is EKS or ECS.

Therefore, Fargate is often referred to as Fargate ECS and Fargate EKS, and are two different solutions to running and managing a container. One of the first decisions in any Fargate project is deciding if the target environment will be Fargate ECS or Fargate EKS. This paper will focus exclusively on Fargate ECS, and Fargate EKS will be left for another discussion.

The next conceptual leap is regarding the term “serverless”. There are various definitions for “serverless” ranging from Functions as a Service to Containers as a Service with the ability to scale to zero. As many people often highlight there is always a server in serverless, but it is instead a question of the visibility of the server from a development, deployment, maintenance, and often most importantly from a cost/billing standpoint. If we measure Fargate against this definition it becomes more obvious that it would qualify for the term “serverless”:

- Development: developers are completely independent of the Fargate compute resources, they develop their application and build a container image for deployment.

- Deployment: as we will discuss more fully later there is no concept of a machine, simply the resources associated with a task running on Fargate.

- Maintenance: there is no operating system patching, as there is no access to the running container, or the Fargate compute resource.

- Billing: is based on the time and amount of Fargate compute resources used, on a second-by-second basis. If the deployment is stopped, charging is immediately stopped, there are no underlying EC2 instances to continue paying for.

This leads us to the strength and the weakness of Fargate ECS, or to be more precise ECS itself. The focus is on the first-time experience, and a simplified number of concepts and artifacts to understand. This can be hugely powerful, however experienced users of Kubernetes would miss certain features such as enhanced security, scalability, and deployment features.

Usage Scenarios for IBM MQ and Fargate ECS

When thinking about Fargate and its serverless aspects, a normally stateful application such as IBM MQ may seem like an odd fit. There are considerations for certain, but there are many use cases where IBM MQ and Fargate ECS are a natural fit:

- Applications using an IBM MQ Client, connecting to an MQ Queue Manager (server) outside of the Fargate ECS environment. This may be the most common design approach for applications deployed in Fargate ECS due to the restrictive runtime environment especially around storage. Although a common approach, the remainder of the paper will consider the other options as these provide more unique aspects to discuss, while this scenario follows the well-documented and understood IBM MQ client pattern.

- Applications built using a Microservices architecture that requires non-persistent messaging. IBM MQ can be used both for non-persistent and persistent messaging, in scenarios where non-persistent messaging is only required and the MQ instance is not part of a larger MQ network, this removes the need for a persistent storage layer.

- IBM MQ deployments with EFS storage. Fargate ECS supports EFS storage, this can be used for the IBM MQ storage layer, supporting persistent messaging and MQ network scenarios. There are some characteristics to using EFS that customers should consider. EFS limits the number of file locks an application can acquire and as IBM MQ scales it becomes increasingly sensitive. These aspects have been documented here. The reference is often considered to only be a factor for IBM MQ running in a Multi-Instance mode, however, they are also relevant to a single instance of IBM MQ.

Within this paper, we will demonstrate how to set up and configure IBM MQ on Fargate ECS with EFS providing the storage layer. Prior to jumping into the steps let's understand the key building blocks of a Fargate ECS deployment:

- Task Definition: this is an immutable, versioned, description of deployment in Fargate ECS. It describes the container, resources, network, and storage requirements. I want to emphasize the point, it is only the description, when you create this in AWS no container is created or running, it is simply the document describing what you could deploy. To have a running task definition there are two options.

- Run Task: this does exactly what it suggests on the tin, runs a task definition. There is the minimal configuration required and represents a one-off occurrence.

- Service: references a task definition and provides a reusable definition that can be started and stopped. There are also additional configuration options, such as the ability to scale the number of running tasks based on CPU or memory utilization.

There are additional aspects that need to be considered around the security permissions and network configuration, these will be covered in the step-by-step instructions, but the three concepts above are the most important to understand.

Deploy IBM MQ Advanced Developer Edition on Fargate ECS

In this section, we will guide you through the process of deploying the freely available IBM MQ Advanced Developer Edition on Fargate ECS.

Pre-requisites

Prior to creating any Fargate ECS resources, there are several prerequisites required. Depending on your setup on AWS these steps may differ, but these have been verified in a new AWS account.

- Creating EFS storage for IBM MQ

- Creation of EFS mount points

- Creation of EFS access point

- Creation of a Security Group to allow communication into the MQ deployment

Creating EFS storage for IBM MQ

As mentioned in the section, there are various approaches to deploying IBM MQ on Fargate. These instructions focus on the IBM MQ deployments with EFS scenario. This was deliberately chosen as it will be a common scenario and represents the more comprehensive setup. The storage layer will be EFS and this needs to be created. Like all the instructions this will be completed using the standard AWS command line to minimize the likelihood of changes (which are more likely to occur in the AWS Web UI).

aws efs create-file-system \

--performance-mode generalPurpose \

--throughput-mode bursting \

--encrypted \

--tags Key=Name,Value=ibm-mq-fargate-storage The output of this command will return a large JSON structure, review this output to identify the FileSystemId. This will need to be used at a later stage and therefore stored in a safe location.

Creation of EFS Mount Points

The EFS storage has been created, but currently, there are no mount points, this makes the EFS storage not particularly useful as no one is able to connect. Mount points are created for each Availability Zone where containers may run. In the following case, we only have containers within a single Availability Zone, but the process is logically the same if you had multiple, you simply specify a subnet in each of the Availability Zones.

Prior to creating the mount point we need to create a new security group that allows NFS communication into the service. This involves two commands:

aws ec2 create-security-group --description "IBM MQ EFS Security Group" --group-name ibmmqfargateEFSSG --vpc-id $vpcIdWhere $vpcId is your AWS VPC identifier. If you are unsure of this value, you can retrieve this by running:

aws ec2 describe-vpcsThe request will result in a JSON response that will include a GroupId value. Retain this for future use. An authorization rule must be added to the security group to allow inbound NFS communication on port 2049.

aws ec2 authorize-security-group-ingress --group-id $GroupId --protocol tcp --port 2049 --cidr=0.0.0.0/0Where $GroupId corresponds to the GroupId value from the previous command. The final piece of information required is the subnets to be associated with the mount point. You may already be aware of this ID, but if you are not you can run the following command to view:

aws ec2 describe-subnetsWith these pieces of information, you are ready to create the mount points by running the following command:

aws efs create-mount-target \

--file-system-id $FileSystemId \

--subnet-id $subnetid \

--security-groups $GroupId

Creation of EFS Access Point

An access point represents an application-specific entry point into an EFS file system that makes it easier to manage application access. In certain situations, such as running the container as the root user, it is not required, however for completeness this can be created using the command below:

aws efs create-access-point \

--file-system-id $FileSystemId \

--posix-user "Uid=1001,Gid=0" \

--root-directory "Path=/mqm,CreationInfo={OwnerUid=1001,OwnerGid=0,Permissions=777}" \

--tags Key=Name,Value MQAccessPointThe EFS ID ($FileSystemId) needs to be customized in the above command based on your environment, using the identifier from the section. A new directory called mqm is created on the EFS storage with the specified ownership permissions. Any connection via the access point will also use the Uid=1001 and Gid=0 for access, which is the same user that the MQ container uses by default.

Assumed to have been created already

These instructions assume you have completed the following:

- Created an ECS Fargate Cluster, if this has not been completed, please consult the AWS documentation here.

- Within ECS it is common to create an ecsTaskExecutionRole to facilitate effective logging. These instructions assume that this has been created. If this is not the case, please consult the AWS documentation here.

Steps for Deployment

Now all the pre-requisites have been completed we are ready to create the ECS Task Definition and deploy the ECS Service.

Register the MQ Fargate ECS Task Definition

Below is a complete task definition with three sections that require mandatory customization for your AWS environment:

- ExecutionRoleArn: this corresponds to ARN value of the ecsTaskExecutionRole role. If you are unsure, please consult the Pre-requisites section.

- AccessPointId: this is the access point ID created in the Pre-requisites section.

- FileSystemId: this is the file system ID created in the Pre-requisites section.

There are many other customizations that you may want to complete, for instance, you may want to include additional environment variables (to change the behavior of the deploy Queue Manager), memory and CPU limits, change the ulimits associated with the compute resources, or expose different ports. All these options and many more are available and described in the AWS RegisterTaskDefinition documentation.

{

"executionRoleArn": "$ExecutionRoleArn",

"containerDefinitions": [

{

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "ibmmqfargate",

"awslogs-region": "eu-west-2",

"awslogs-stream-prefix": "ibmmq/fargate"

}

},

"portMappings": [

{

"hostPort": 9443,

"protocol": "tcp",

"containerPort": 9443

},

{

"hostPort": 1414,

"protocol": "tcp",

"containerPort": 1414

}

],

"environment": [

{

"name": "LICENSE",

"value": "accept"

},

{

"name": "MQ_APP_PASSWORD",

"value": "password"

},

{

"name": "MQ_QMGR_NAME",

"value": "QM1"

}

],

"ulimits": [

{

"name": "nofile",

"softLimit": 10240,

"hardLimit": 10240

},

{

"name": "nproc",

"softLimit": 4096,

"hardLimit": 4096

}

],

"user": "1001:0",

"mountPoints": [

{

"sourceVolume": "queuemanager",

"containerPath": "/mnt/mqm",

"readOnly": false

}

],

"image": "ibmcom/mq:9.2.3.0-r1-amd64",

"healthCheck": {

"command": [

"CMD-SHELL",

"chkmqhealthy || exit 1"

],

"startPeriod": 300

},

"essential": true,

"name": "ibmmq922"

}

],

"memory": "1024",

"family": "ibmmq",

"requiresCompatibilities": [

"FARGATE"

],

"networkMode": "awsvpc",

"cpu": "512",

"volumes": [

{

"efsVolumeConfiguration": {

"transitEncryption": "ENABLED",

"authorizationConfig": {

"iam": "DISABLED",

"accessPointId": "$AccessPointId"

},

"fileSystemId": "$FileSystemId"

},

"name": "queuemanager"

}

],

"tags": [

{

"key": "productId",

"value": " f3beb980b6ca487ea6a3db33262afa3c"

}

]

}Once you have completed the customizations and are ready to register the task definition, run the following command:

aws ecs register-task-definition --cli-input-json Where fargate-task-definition-ibm-mq.json corresponds to the location of the JSON file describing the task definition.

Create the MQ Fargate ECS Service on the Cluster

To allow communication to the MQ deployment a new security group needs to be created. This will allow communication on the MQ data port (1414) and access to the Web Console (9443). Clearly, if you don’t require access to both customize as required. This involves three commands:

aws ec2 create-security-group --description "IBM MQ Fargate ECS Security Group" --group-name ibmmqfargateSG --vpc-id $vpcIdWhere $vpcId is your AWS VPC identifier. If you are unsure of this value, you can retrieve this by running:

aws ec2 describe-vpcsThe original request will result in a JSON response that will include a GroupId value. Retain this for future use. Two authorization rules must be added to allow inbound communication on ports 1414 and 9443.

aws ec2 authorize-security-group-ingress --group-id $GroupId --protocol tcp --port 9443 --cidr=0.0.0.0/0

aws ec2 authorize-security-group-ingress --group-id $GroupId --protocol tcp --port 1414 --cidr=0.0.0.0/0Where $GroupId corresponds to the GroupId value from the previous command.

The Fargate ECS service will be exposed on the subnets within your VPC. You need to identify the subnet IDs and specify these when creating the service. If you are unsure what these values would be, you can run the following command to discover:

aws ec2 describe-subnetsYou now have all the information required to run the create-service command:

aws ecs create-service \

--cluster $clusterName \

--service-name ibmmqdev \

--task-definition $taskarn \

--desired-count 1 \

--launch-type FARGATE \

--platform-version LATEST \

--deployment-configuration maximumPercent=100,minimumHealthyPercent=0 \

--network-configuration "awsvpcConfiguration={subnets=[$subnetids],securityGroups=[$GroupId],assignPublicIp=ENABLED}" \

--tags key=productId,value=208423bb063c43288328b1d788745b0c \

--propagate-tags SERVICE- $clusterName: corresponds to the name of the ECS Cluster where the service will be deployed.

- $taskarn: the Task ARN returned from the register task definition within the section.

- $subnetids: the common separated list of subnet identifiers that the service should be exposed to.

- $GroupId: the security group identifier that you created in the previous step.

Once the task is running it will be exposed to a public IP address. Within the AWS web UI this can be seen on the running task details page, but from the command line several commands are required:

Retrieval of the running task ID:

aws ecs list-tasks --service-name ibmmqdev --cluster $clusterName

Retrieve the networkInterfaceId from the running task details

aws ecs describe-tasks --tasks $runningTaskARN --cluster $clusterName

Retrieve the public IP address from the network interface

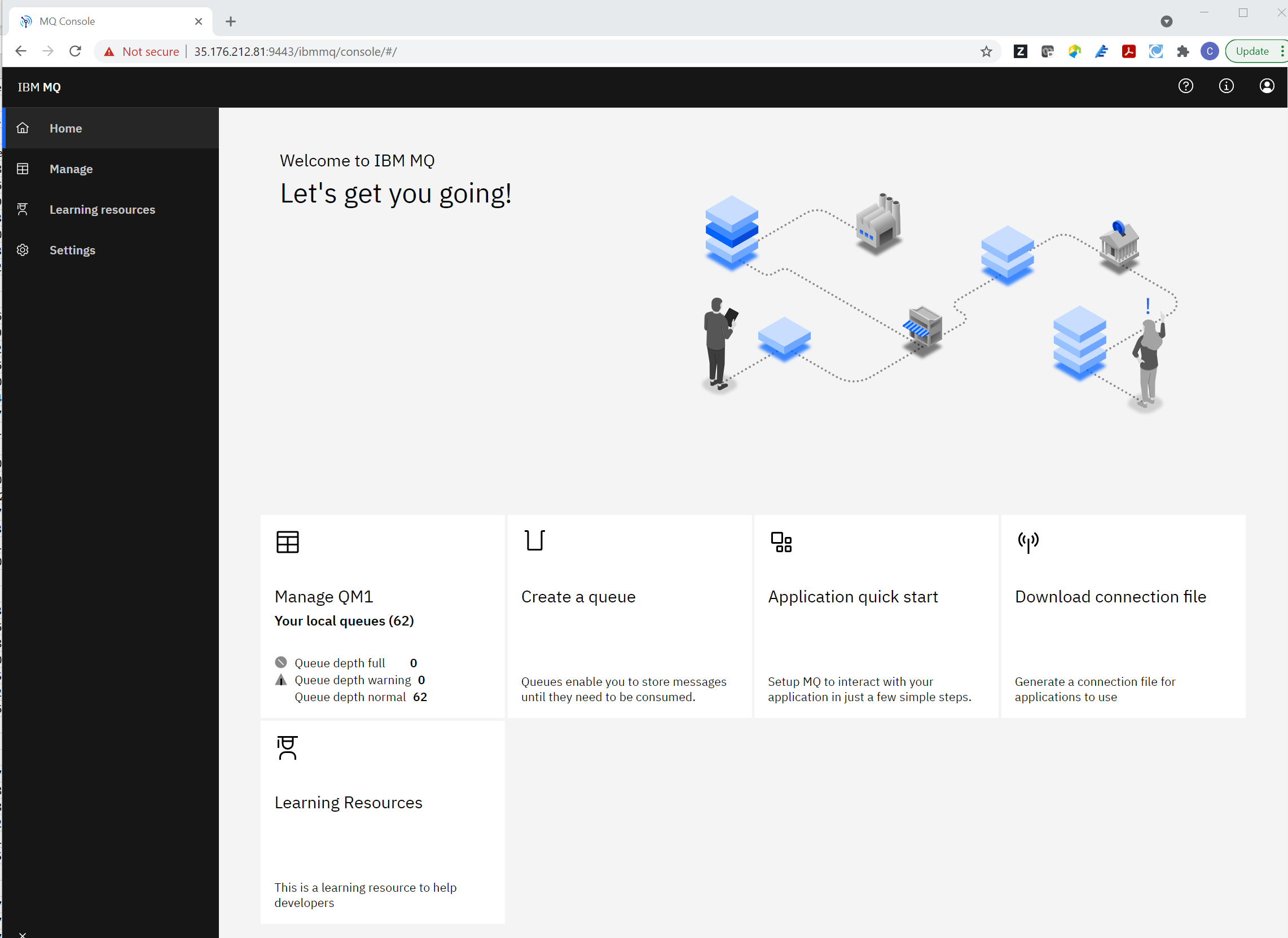

aws ec2 describe-network-interfaces --network-interface-ids $networkInterfaceId | grep PublicIpWith this information, you can use a standard web browser to access the MQ Web UI.

Assuming you have not changed the task description, the username is “admin” and the password “passw0rd” and using this information you will be able to access the MQ Web UI.

Summary

AWS Fargate ECS is an easy-to-use container orchestration solution with the added advantage that resources can be quickly and easily scaled to 0. There are many scenarios where IBM MQ is a natural fit and we have demonstrated how you can get started in minutes.