Taming the Virtual Threads: Embracing Concurrency With Pitfall Avoidance

In the previous article, you learned about the history of Java threads and how Quarkus helps developers execute traditional blocking applications on top of the virtual thread (Project Loom) dead simply using a single @RunOnVirtualThread annotation on both method and class levels.

Unfortunately, there’s a long way to go with the virtual threads across all Java ecosystems. For example, you probably get used to importing Java libraries such as Maven dependencies or Gradle modules in your Java projects. It means you might not know how the libraries work on platform threads, or you don’t even need to know it because you just need to use them, which is the common development practice. With a vast ecosystem of Java libraries, you will probably hit the following challenges and pitfalls that could break the virtual thread runtimes in the end.

Pinning

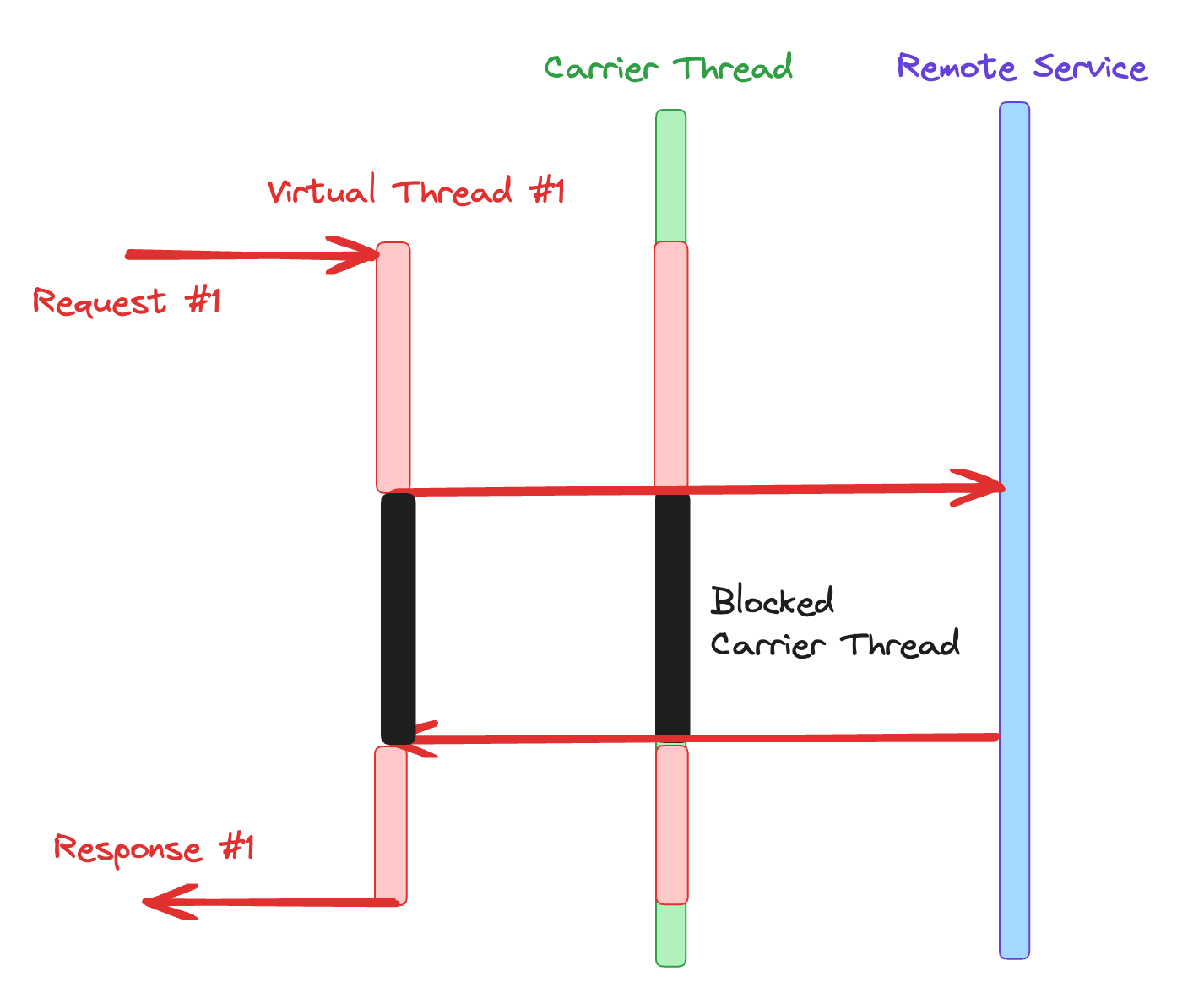

In Java virtual threads, pinning refers to the condition where a virtual thread is stuck to its carrier thread that maps the platform thread. It means that the virtual thread can’t be unmounted from the carrier threads because the state of the virtual thread can’t be stored in the heap memory for several reasons.

For example, when a virtual thread enters a synchronized block or method, it becomes pinned to its carrier thread because synchronized blocks and methods require exclusive access to a shared resource, and only one thread can enter a synchronized block or method at a time, as shown in Figure 1.

Figure 1: Pinning carrier thread

When you perform a blocking operation on a virtual thread, such as waiting for input or output, it also becomes pinned to its carrier thread because the virtual thread cannot continue executing until the blocking operation completes. The pinning also happens when you make JNI calls executed by native code because the native code can only run on one thread at a time.

Here is an example of the pinning code:

Object monitor = new Object();

//...

public void makePinTheCarrierThread() throws Exception {

synchronized(monitor) {

Thread.sleep(1000);

}

}While virtual threads offer enhanced concurrency and performance potential, many Java libraries currently cause carrier thread pinning, potentially hindering application performance. Quarkus has proactively addressed this issue by patching several libraries, such as Narayana and Hibernate ORM, to eliminate pinning. However, as developers adopt virtual threads, it remains crucial to exercise caution when utilizing third-party libraries, as not all code has been optimized for virtual thread compatibility. A gradual transition towards virtual thread-friendly code is underway, and the benefits will continue to accrue as the ecosystem adapts.

Monopolization

When a virtual thread needs to process a long computation, the virtual thread excessively occupies its carrier thread, preventing other virtual threads from utilizing that carrier thread. For example, when a virtual thread repeatedly performs blocking operations, such as waiting for input or output, it monopolizes the carrier thread, preventing other virtual threads from making progress. Inefficient resource management within the virtual thread can also lead to excessive resource utilization, causing monopolization of the carrier thread.

Monopolization can have detrimental effects on the performance and scalability of virtual thread applications. It can lead to increased contention for carrier threads, reduced overall concurrency, and potential deadlocks. To mitigate these issues, developers should strive to minimize monopolization by breaking down lengthy computations into smaller, more manageable tasks to allow other virtual threads to interleave and utilize the carrier thread.

Object Pooling

To mitigate consuming expensive platform threads, object pooling was invented to manage and reuse frequently used objects to optimize performance and reduce memory overhead. Object pooling also involves maintaining a pool of pre-created objects that are available for use by platform threads. For example, Threadlocal is one of the good examples to implement object pooling. However, a virtual thread requires a new object by creating a new instance rather than retrieving it from the object pool. In addition, when the virtual thread is finished, the object is deleted rather than returning it to the pool for later reuse.

When you use Java libraries that utilize these pooling patterns, your application will reduce the performance of the virtual threads because you will see many allocations of large objects, as every virtual thread will get its instance of the object. It should be an easy job to rewrite the libraries to avoid the object pooling pattern. Quarkus also uses Jackson to store expensive objects in the thread locals. Luckily, there’s already PR to address this problem, which makes the Quarkus application more virtual thread-friendly.

Conclusion

You learned what challenges you probably face when you try to run your microservices on virtual threads. The challenge might be more complex and bigger if you need to import more Java libraries to implement a variety of business domains, such as REST client, messaging, gRPC, event bus, and more. This tells us that there’s a long way to go for running all business services with virtual threads.

In the meantime, Quarkus has already patched and rewritten existing Quarkus extensions such as Apache Kafka, AMQP, Apache Pulsar, JMS, REST client, Redis, Mailer, event bus, scheduled methods, gRPC, and more for virtual threads. In the next article, I’ll introduce an insightful performance comparison of how virtual threads can be better or worse compared to blocking and reactive applications.