Cache in Java With LRU Eviction Policy

Introduction

LRU (or Least Recently Used) is a cache eviction strategy, wherein if the cache size has reached the maximum allocated capacity, the least recently accessed objects in the cache will be evicted. Also, the objects in the cache could be accessed by multiple threads in an application so is very important that the cache has good synchronization mechanism in-built. This article describes the implementation of a Java based cache with LRU eviction strategy; but fundamentally applies to any programming language.

Background

Many a times, developers embed caching frameworks in their application like Ehcache (which is a great library for general purpose, in-memory caching). But there are ocassions when there is no liberty to use such frameworks or libraries; like cases for lightweight Java applications or mobile applications which are targeted to run on minimal memory footprint (or sometimes due to delivery deadlines there is no extra time learn and configure 3rd party caching frameworks and deploy to production). In these times, such a simple, light-weight LRUCache class can be used and with sufficient unit test coverage the cache can be heaviliy relied upon.

This article explains implementing a cache with LRU policy using ConcurrentHashMap and a Doubly-Linked-List data structure. The same can be achieved using LinkedHashMap, however it is not thread safe by default (and maybe in the next article I'll submit one using LinkedHashMap).

Design

Before designing the LRU based cache, we need to understand the some important properties of a cache:

Should offer minimum latency for get operations in order of O(1)

Supports concurrent read/write access

Cache limit should be set to a fixed size (in terms of object count)

Inserting a new object into the cache should always be successful and will be identified by a key (that is unique)

The object eviction policies should be pre-defined, in this case it is LRU!

Considering above 5 properties, a simple LRU cache can be implemented using Java's ConcurrentHashMap (which meets properties 1-4) and simple Doubly-Linked-List (meets property 5).

A ConcurrentHashMap is used - to save objects and access them by keys so that the access time is O(1) order, offers concurrent access, size limit can be set and objects can be accessed by using a key.

A Doubly-Linked-List (DLL) maintains the pointers to the same objects (in the map). This DLL helps us achieve the LRU eviction strategy, where the objects accessed frequently will be at the tail end and the objects accessed the least will be towards the head of the list. When a new object is being inserted into the cache and if the cache has reached it's maximum capacity, the LRU eviction is triggered. Basically from DLL, the head node (which is least recently used object) will be removed and the same will be deleted from the map.

Flow Diagram

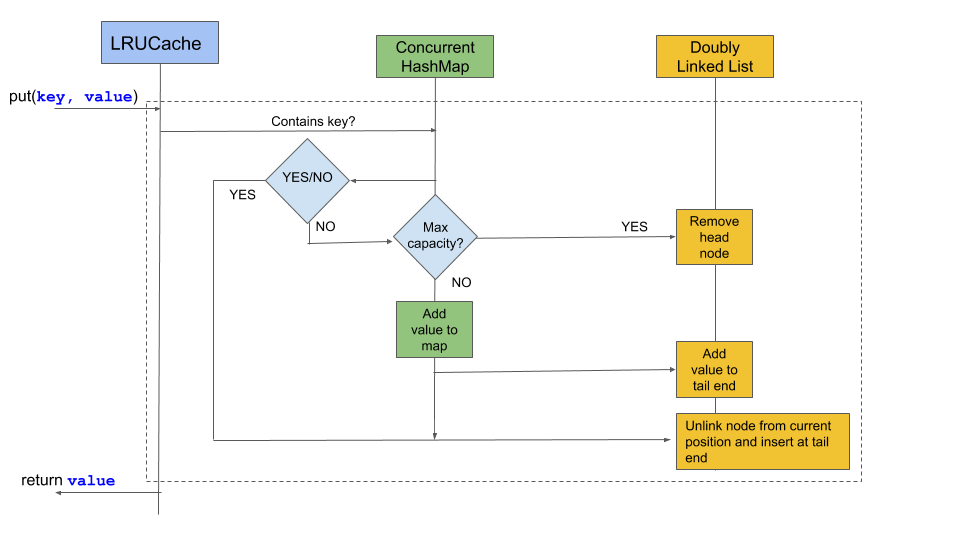

Adding object to cache put(K k, V v)

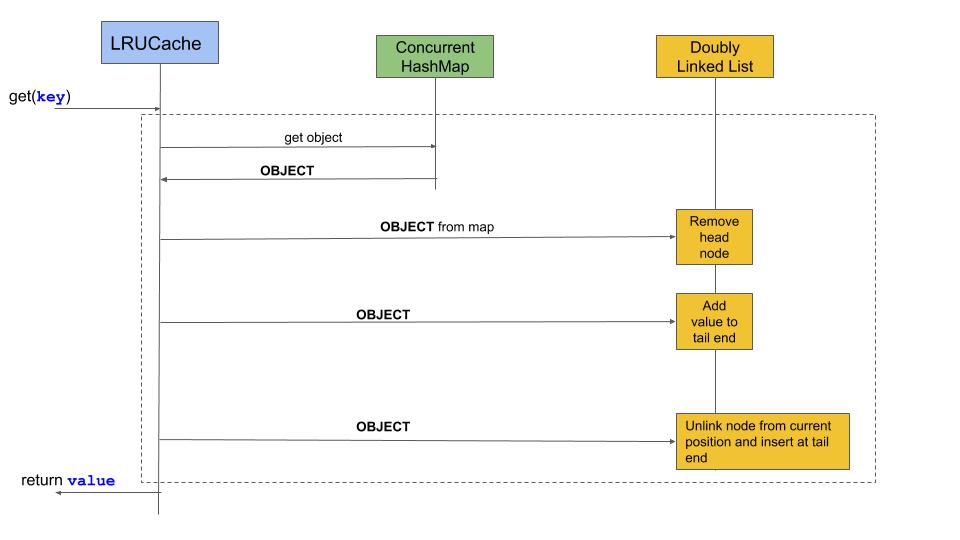

Fetching object from cache get(K k)

Using the Code

The LRU cache implementation is realized by implementing class LRUCache<K, V> which works on generics. This cache objects are identified by a key, K - uniquely identifiable parameter (immutable objects highly preferred) and V - value can be any type of custom object. If a key is repeated for another object, then the cache would replace the older object with newer one.

Object instantiation:

// 1. Create a new LRUCache instance by passing the initial storage capacity (10 in below example)

LRUCache<String, String> cache = new LRUCache<String, String>(10);Inserting objects into cache using the put(K k, V v) method

// inserts 10 objects to cache

for(int i=1; i<=10; i++) {

cache.put(String.format("key-%d", i), String.format("value-%d", i));

}Utility method to print objects in the cache using method printCache() (will be printed in order of least recently accessed to latest object accessed)

// print the cache objects

cache.printCache();Method to get objects stored inthe cache using corressponding key using method get(K k)

// access the first object and print the cache

cache.get("key-1");

// printing cache after accessing key-1

cache.printCache();

Improvements

The LRUCache class is very basic and there are other functionalities missing like deleting object from cache. It will be a good idea if the LRUCache class implements a Cache interface, so that there can be multiple implementations of caches using different eviction strategies. Some of the other evictions that can be considered could be:

First-in-first-out

Random LRU

Sorted (based on some sorting order)

Points of Interest

Read Java's ConcurrentHashMap - it is built to perform and has wonderful abilities over the normal HashMap if you are running in a multi-threaded environment. There are multiple cache eviction strategies like Most-recently used (MRU), count-min sketch, time-to-live etc and all of these have specific use cases. LRU is the most commonly used eviction strategy used and solves most of our needs.

The use of caching frameworks and distributed caches have grown in the last couple of years due to high data volume applications. The challenge of applying these eviction strategies gets really interesting when memory is limited, the objects stored are distributed across multiple nodes and eviction has to applied across this distribution, saving large objects and binary data etc. REDIS and Memcache are 2 well know distributed caching servers used. REDIS offers a little more than plain caching, it is a data-structure cache with persistence option to the disk.

In the current implementation, a plain simple class is used to implement LRU based cache and storing objects using key-value pairs.

In the next iteration of this implementation, the plan is to build a simple caching library with various functionalities like adding POJO objects, support Set interfaces. Addition of Factory patter to create various caches and externalize the caching strategy (like LRU, MRU, time-based etc).

Full Code

package io.howto;

import java.util.concurrent.ConcurrentHashMap;

/**

*

* LRUCache: Class to cache objects based on LRU (Least Recently Used) cache eviction strategy,

* wherein if the cache size has reached the maximum allocated capacity, the

* least recently used objects in the cache will be evicted.

*

* See the runner function(a.k.a main(...)) to see the working.

*

* @author sunil

*

* @param <K>

* @param <V>

*

* <p>

* Date: 14/Nov/2019

* </p>

*/

public final class LRUCache<K, V> {

/**

* A doubly-linked-list implementation to save objects into the hashmap

* as key-value pari.

*

* @author sunil

*

* @param <K>

* @param <V>

*/

private static class Node<K, V> {

private V value;

private K key;

private Node<K, V> next, prev;

public Node(K key, V value) {

this.key = key;

this.value = value;

}

@Override

public String toString() {

return value.toString();

}

}

/**

* The maximum number of elements that can be cached, should be set during instantiation

* time.

*/

private final int maxCapacity;

/**

* Use {@linkplain ConcurrentHashMap} here to maintain the cache of objects.

* Also this offers thread safe access of the cache.

*/

private ConcurrentHashMap<K, Node<K, V>> map;

/**

* A key-value representation of the cache object identified by a cache key.

* This is actually a doubly-linked list which maintains the most recently accessed

* objects (read/write) at the tail-end and the least read objects at the head.

*/

private Node<K, V> head, tail;

public LRUCache(int maxCapacity) {

this(16, maxCapacity);

}

public LRUCache(int initialCapacity, int maxCapacity) {

this.maxCapacity = maxCapacity;

if (initialCapacity > maxCapacity)

initialCapacity = maxCapacity;

map = new ConcurrentHashMap<>(initialCapacity);

}

/**

* Removes a node from the head position doubly-linked list.

* @param node

*/

private void removeNode(Node<K, V> node) {

if (node == null)

return;

if (node.prev != null) {

node.prev.next = node.next;

} else {

head = node.next;

}

if (node.next != null) {

node.next.prev = node.prev;

} else {

tail = node.prev;

}

}

/**

* Offers a node to the tail-end of the doubly-linked list because

* it was recently read or written.

* @param node

*/

private void offerNode(Node<K, V> node) {

if (node == null)

return;

if (head == null) {

head = tail = node;

} else {

tail.next = node;

node.prev = tail;

node.next = null;

tail = node;

}

}

/**

* Adds a new object to the cache. If the cache size has reached it's capacity,

* then the least recently accessed object will be evicted.

*

* @param key

* @param value

*/

public void put(K key, V value) {

if (map.contains(key)) {

Node<K, V> node = map.get(key);

node.value = value;

removeNode(node);

offerNode(node);

} else {

if (map.size() == maxCapacity) {

System.out.println("maxCapacity of cache reached");

map.remove(head.key);

removeNode(head);

}

Node<K, V> node = new Node<K, V>(key, value);

offerNode(node);

map.put(key, node);

}

}

/**

* Fetches an object from the cache (could be null if no such mapping exists).

* If the object is found in the cache, then it will be moved to the tail-end of the

* doubly-linked list to indicate that it was recently accessed.

*

* @param key

* @param value

*/

public V get(K key) {

Node<K, V> node = map.get(key);

removeNode(node);

offerNode(node);

return node != null ? node.value : null;

}

/**

* Utility function to print the cache objects.

*/

public void printCache() {

Node<K, V> curr = head;

while (curr != null) {

System.out.print(curr.value + " -> ");

curr = curr.next;

}

System.out.println();

}

/**

* Runner program to test the LRU cache

* @param args

*/

public static void main(String[] args) {

/**

* 1. create LRUCache of initial capacity 10

* 2. insert 10 objects to cache

* 3. print the cache objects

* 4. access the first object and print the cache

* 5. insert new objects to cache

* 6. print the cache and observe that the least recently used

* objects are evicted

*/

// 1. initiate the cache with capacity 10

LRUCache<String, String> cache = new LRUCache<String, String>(10);

// 2. insert 10 objects to cache

for(int i=1; i<=10; i++) {

cache.put(String.format("key-%d", i), String.format("value-%d", i));

}

// 3. print the cache objects

System.out.println("printing cache:");

cache.printCache();

// 4. access the first object and print the cache

cache.get("key-1");

System.out.println("printing cache after accessing key-1:");

cache.printCache();

// 5. insert new objects to cache

for(int i=11; i<=15; i++) {

cache.put(String.format("key-%d", i), String.format("value-%d", i));

}

// 6. print the cache and observe that the least recently used objects are evicted

System.out.println("printing cache after adding new objects:");

cache.printCache();

}

}