Optimizing Pgbench for CockroachDB Part 2

Previous Articles

I've written about pgbench before, you may find those articles below:

- Using PGBench with CockroachDB Serverless

- Optimizing pgbench for CockroachDB Part 1

Motivation

I am writing a two-part article on optimizations in pgbench. Pgbench is a common benchmarking utility that comes bundled with PostgreSQL. It's ubiquitous and widely accepted as a standard tool to test database performance. Up until version 15 of pgbench, I was unable to improve performance with CockraochDB and pgbench. With this version, we now have the ability to retry transactions and it improves the performance posture for CockroachDB. However, this is not the only way to address performance issues. Today, I am going to expand on the options available to us and move the performance needle further.

High-Level Steps

- Demonstrate the inefficiencies

- Demonstrate the workaround

Step-by-Step Instructions

Deploy a CockroachDB Cluster

I've designed this tutorial to be reproducible, in fact, I have a Docker environment you may use to try or use the serverless tier, the same way as I did in the original article.

Demonstrate the Inefficiencies

I am going to pick up where I left off and will assume the database was initialized the same way and the same scale was used.

Let's capture a baseline run for posterity. I am going to use my serverless cluster in GCP for the demonstration.

pgbench \

--host=${PGHOST} \

--file=tpcb-original.sql@1 \

--client=8 \

--jobs=8 \

--username=${PGUSER} \

--port=${PGPORT} \

-T 60 \

-P 5 \

--failures-detailed \

--max-tries=10

${PGDATABASE}pgbench (15.1 (Homebrew), server 13.0.0)

progress: 5.0 s, 12.4 tps, lat 469.790 ms stddev 512.002, 0 failed, 1 retried, 1 retries

progress: 10.0 s, 13.6 tps, lat 609.798 ms stddev 1000.936, 0 failed, 6 retried, 8 retries

progress: 15.0 s, 13.2 tps, lat 534.850 ms stddev 700.306, 0 failed, 6 retried, 6 retries

progress: 20.0 s, 13.4 tps, lat 660.056 ms stddev 1169.177, 0 failed, 6 retried, 8 retries

progress: 25.0 s, 13.8 tps, lat 508.987 ms stddev 615.082, 0 failed, 5 retried, 7 retries

progress: 30.0 s, 13.4 tps, lat 604.392 ms stddev 1254.808, 0 failed, 4 retried, 6 retries

progress: 35.0 s, 13.2 tps, lat 707.448 ms stddev 1245.547, 0 failed, 4 retried, 11 retries

progress: 40.0 s, 14.0 tps, lat 509.807 ms stddev 599.558, 0 failed, 5 retried, 7 retries

progress: 45.0 s, 13.6 tps, lat 596.629 ms stddev 1029.388, 0 failed, 4 retried, 10 retries

progress: 50.0 s, 13.4 tps, lat 519.641 ms stddev 999.496, 0 failed, 3 retried, 5 retries

progress: 55.0 s, 13.4 tps, lat 706.287 ms stddev 1522.329, 0 failed, 6 retried, 14 retries

progress: 60.0 s, 13.0 tps, lat 552.622 ms stddev 814.466, 0 failed, 3 retried, 3 retries

transaction type: tpcb-original.sql

scaling factor: 1

query mode: simple

number of clients: 8

number of threads: 8

maximum number of tries: 10

duration: 60 s

number of transactions actually processed: 810

number of failed transactions: 0 (0.000%)

number of serialization failures: 0 (0.000%)

number of deadlock failures: 0 (0.000%)

number of transactions retried: 55 (6.790%)

total number of retries: 89

latency average = 592.250 ms

latency stddev = 1016.218 ms

initial connection time = 364.134 ms

tps = 13.436101 (without initial connection time)Recall the original tpcb transaction. This is typical of a legacy application where several statements represent a business function wrapped in an explicit transaction.

\set aid random(1, 100000 * :scale)

\set bid random(1, 1 * :scale)

\set tid random(1, 10 * :scale)

\set delta random(-5000, 5000)

BEGIN;

UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

SELECT abalance FROM pgbench_accounts WHERE aid = :aid;

UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

END;There are five statements wrapped with a BEGIN and an END. In CockroachDB, they are executed as a single explicit transaction. The problem stems from having pgbench execute these statements one at a time. Each statement incurs round-trip latency to the server. To demonstrate the problem, I am going to run pgbench with a debug flag, i.e. -d.

pgbench \

--host=${PGHOST} \

--no-vacuum \

--file=tpcb-original.sql@1 \

--client=1 \

--jobs=1 \

--username=${PGUSER} \

--port=${PGPORT} \

-T 1 \

-P 1 \

--failures-detailed \

-d \

${PGDATABASE}pgbench: client 0 executing script "tpcb-original.sql"

pgbench: client 0 executing \set aid

pgbench: client 0 executing \set bid

pgbench: client 0 executing \set tid

pgbench: client 0 executing \set delta

pgbench: client 0 sending BEGIN;

pgbench: client 0 receiving

pgbench: client 0 receiving

pgbench: client 0 sending UPDATE pgbench_accounts SET abalance = abalance + -3135 WHERE aid = 60855;

pgbench: client 0 receiving

pgbench: client 0 receiving

pgbench: client 0 sending SELECT abalance FROM pgbench_accounts WHERE aid = 60855;

pgbench: client 0 receiving

pgbench: client 0 receiving

pgbench: client 0 sending UPDATE pgbench_tellers SET tbalance = tbalance + -3135 WHERE tid = 2;

pgbench: client 0 receiving

pgbench: client 0 receiving

pgbench: client 0 sending UPDATE pgbench_branches SET bbalance = bbalance + -3135 WHERE bid = 1;

pgbench: client 0 receiving

pgbench: client 0 receiving

pgbench: client 0 sending INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (2, 1, 60855, -3135, CURRENT_TIMESTAMP);

pgbench: client 0 receiving

pgbench: client 0 receiving

pgbench: client 0 sending END;

pgbench: client 0 receiving

pgbench: client 0 receivingNotice the statements are sent to the server one at a time. Every statement is sent in a separate round trip to the cluster. When a client application is farther from the server, this round-trip latency can add up quickly. Considering you have five statements in the tbcb-original.sql file and each roundtrip are 30ms, completing the entire transaction will tack on 150ms of additional latency.

artem@artem-serverless-2963.g8z.cockroachlabs.cloud:26257/defaultdb> select ?column?

------------

1

(1 row)

Time: 34ms total (execution 0ms / network 34ms)Here's a screenshot of this transaction executed by my client in New Jersey against a cluster in us-east1, South Carolina in GCP, where my client latency is 30ms.

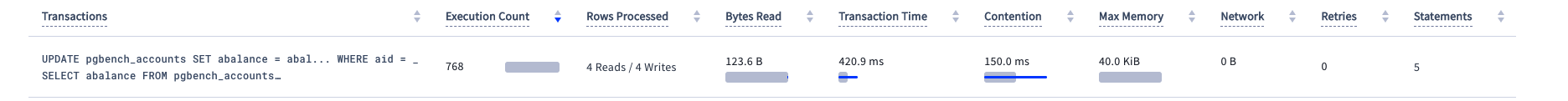

Notice the transaction time is 420ms

A lot of the time comes from the round trip time as each individual statement cannot individually contribute to the high transaction time above.

Demonstrate the Workaround

The very first thing we are going to do is see if we can reduce the number of statements. I can see a quick optimization when I see a write followed by a read from the same row. There is a name for that pattern, called "read your writes". Our docs have an example of the pattern and the associated workaround.

Given the following two statements

UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

SELECT abalance FROM pgbench_accounts WHERE aid = :aid;We can rewrite them as one

UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid RETURNING abalance;

Which will do the same and return a balance in the same network hop with the update.

Our new script will look like so:

BEGIN;

UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid RETURNING abalance;

UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

END;Let's see if it made any difference

pgbench \

--host=${PGHOST} \

--no-vacuum \

--file=tpcb-no-select.sql@1 \

--client=8 \

--jobs=8 \

--username=${PGUSER} \

--port=${PGPORT} \

-T 60 \

-P 5 \

--failures-detailed \

--max-tries=10

${PGDATABASE}pgbench (15.1 (Homebrew), server 13.0.0)

progress: 5.0 s, 11.2 tps, lat 556.449 ms stddev 560.141, 0 failed, 7 retried, 8 retries

progress: 10.0 s, 13.4 tps, lat 463.555 ms stddev 851.994, 0 failed, 4 retried, 6 retries

progress: 15.0 s, 13.4 tps, lat 521.209 ms stddev 1117.149, 0 failed, 4 retried, 5 retries

progress: 20.0 s, 13.4 tps, lat 445.519 ms stddev 853.578, 0 failed, 5 retried, 5 retries

progress: 25.0 s, 12.4 tps, lat 1013.037 ms stddev 2471.913, 0 failed, 10 retried, 21 retries

progress: 30.0 s, 13.8 tps, lat 522.921 ms stddev 836.386, 0 failed, 7 retried, 8 retries

progress: 35.0 s, 13.8 tps, lat 634.993 ms stddev 1251.407, 0 failed, 7 retried, 12 retries

progress: 40.0 s, 13.6 tps, lat 498.344 ms stddev 888.117, 0 failed, 4 retried, 5 retries

progress: 45.0 s, 7.2 tps, lat 862.869 ms stddev 1712.450, 0 failed, 3 retried, 6 retries

progress: 50.0 s, 11.6 tps, lat 1007.349 ms stddev 2035.622, 0 failed, 4 retried, 11 retries

progress: 55.0 s, 14.2 tps, lat 477.565 ms stddev 569.848, 0 failed, 7 retried, 9 retries

progress: 60.0 s, 8.4 tps, lat 1063.413 ms stddev 1290.893, 0 failed, 4 retried, 6 retries

transaction type: tpcb-no-select.sql

scaling factor: 1

query mode: simple

number of clients: 8

number of threads: 8

maximum number of tries: 10

duration: 60 s

number of transactions actually processed: 739

number of failed transactions: 0 (0.000%)

number of serialization failures: 0 (0.000%)

number of deadlock failures: 0 (0.000%)

number of transactions retried: 66 (8.931%)

total number of retries: 102

latency average = 647.771 ms

latency stddev = 1310.887 ms

initial connection time = 342.022 ms

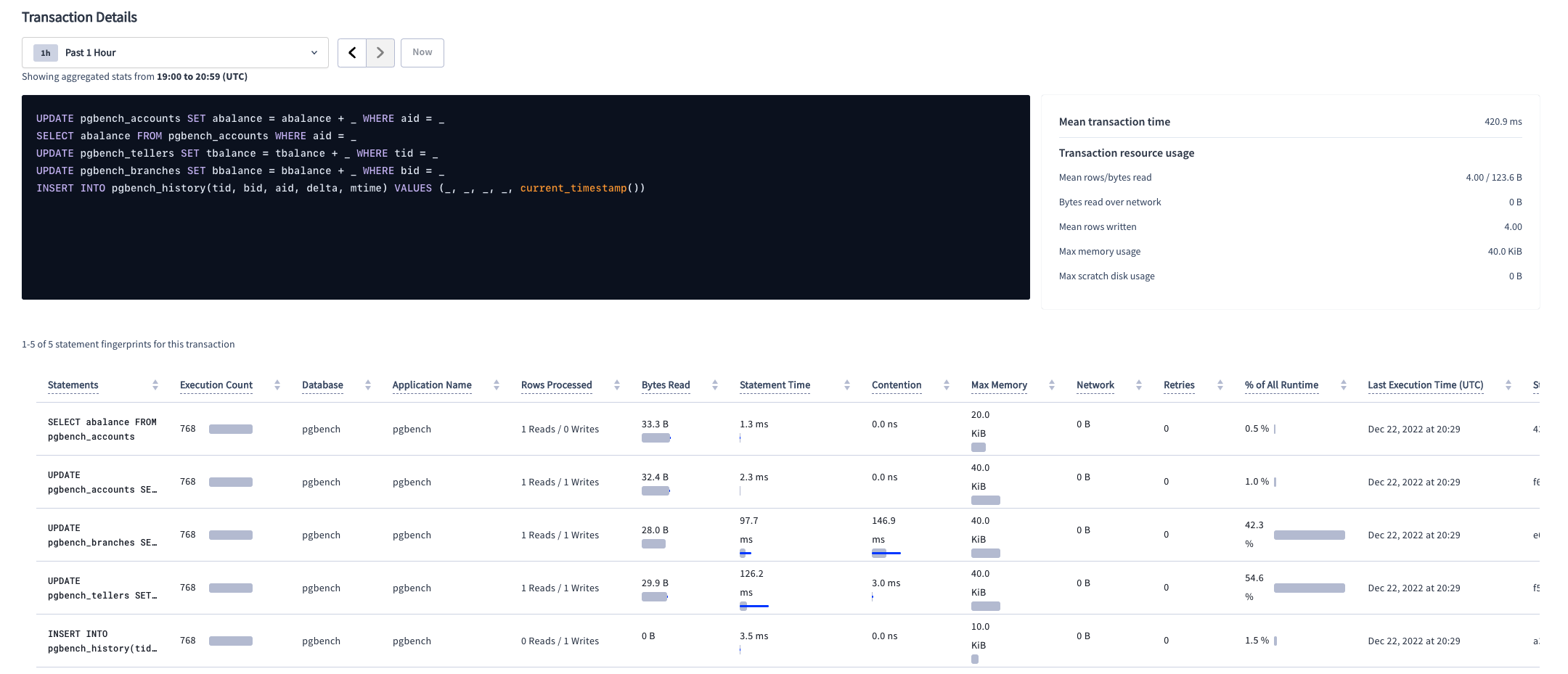

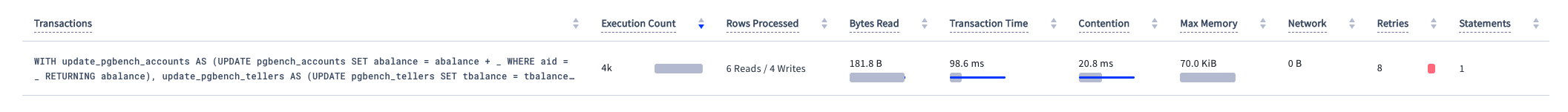

tps = 12.272662 (without initial connection time)Though it is impossible to tell from the poor results compared to the previous run, here's the transaction time after this batch

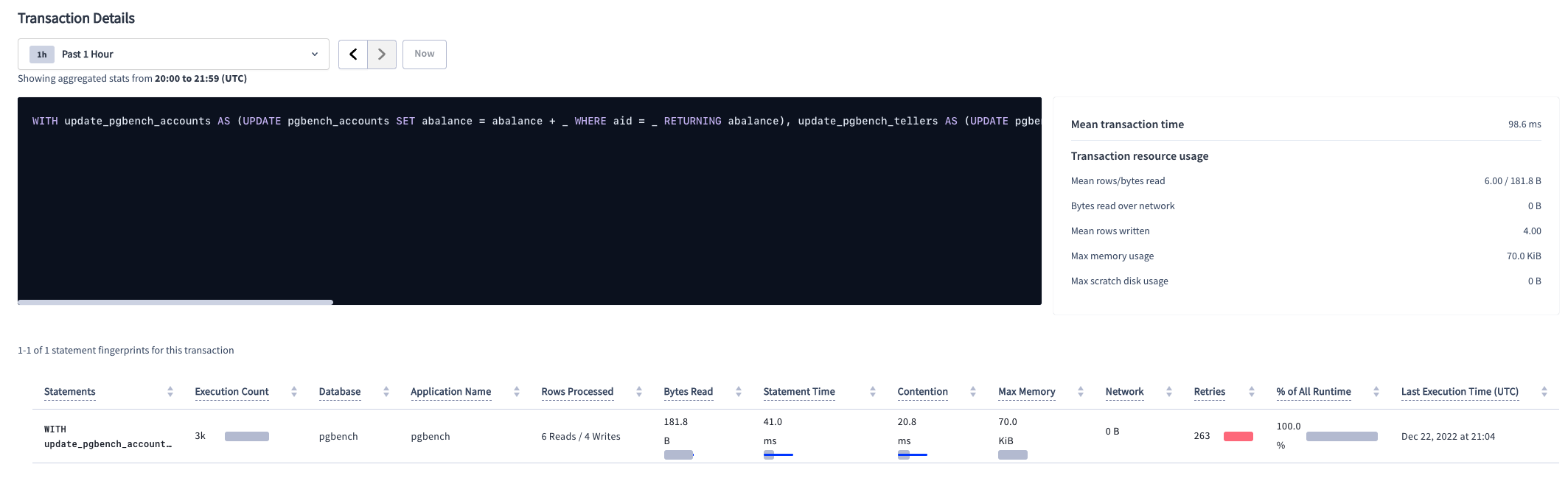

We reduced the time a bit. Also notice that our UI shows four statements now, not five.

The next thing we are going to do is convert the entire explicit transaction into a single implicit transaction. The benefit here will be that the entire transaction will be treated as a whole and sent to the cluster as a single batch in a single network round trip. One way you can do it is by using a common table expression or CTE for short.

We can take the four remaining statements and rewrite them as a single CTE:

WITH

update_pgbench_accounts AS

(UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid RETURNING abalance),

update_pgbench_tellers AS

(UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid RETURNING NULL),

update_pgbench_branches AS

(UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid RETURNING NULL),

insert_pgbench_history AS

(INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP) RETURNING NULL)

SELECT abalance FROM update_pgbench_accounts;You may ask why did I not include the BEGIN and END? The reason being CockroachDB operates in serializable isolation and each statement is executed as if it had exclusive access to the cluster. There is no need to treat this transaction explicitly and furthermore, converting it to an implicit transaction allows CockroachDB to retry it implicitly. We do not have to manage to retry externally. I don't even need to pass the --max-tries flag as Cockroach will handle them.

pgbench \

--host=${PGHOST} \

--no-vacuum \

--file=tpcb-cockroach.sql@1 \

--client=8 \

--jobs=8 \

--username=${PGUSER} \

--port=${PGPORT} \

-T 60 \

-P 5 \

--failures-detailed \

${PGDATABASE}pgbench (15.1 (Homebrew), server 13.0.0)

progress: 5.0 s, 66.6 tps, lat 104.636 ms stddev 159.464, 0 failed

progress: 10.0 s, 69.4 tps, lat 109.922 ms stddev 242.839, 0 failed

progress: 15.0 s, 62.4 tps, lat 133.633 ms stddev 327.632, 0 failed

progress: 20.0 s, 37.6 tps, lat 220.530 ms stddev 209.474, 0 failed

progress: 25.0 s, 60.8 tps, lat 123.867 ms stddev 230.953, 0 failed

progress: 30.0 s, 55.2 tps, lat 142.291 ms stddev 354.723, 0 failed

progress: 35.0 s, 56.2 tps, lat 118.442 ms stddev 324.745, 0 failed

progress: 40.0 s, 52.4 tps, lat 151.271 ms stddev 499.161, 0 failed

progress: 45.0 s, 49.4 tps, lat 171.952 ms stddev 632.761, 0 failed

progress: 50.0 s, 50.6 tps, lat 171.422 ms stddev 537.913, 0 failed

progress: 55.0 s, 74.8 tps, lat 116.202 ms stddev 278.388, 0 failed

progress: 60.0 s, 69.6 tps, lat 109.035 ms stddev 282.751, 0 failed

transaction type: tpcb-cockroach.sql

scaling factor: 1

query mode: simple

number of clients: 8

number of threads: 8

maximum number of tries: 1

duration: 60 s

number of transactions actually processed: 3533

number of failed transactions: 0 (0.000%)

number of serialization failures: 0 (0.000%)

number of deadlock failures: 0 (0.000%)

latency average = 135.273 ms

latency stddev = 358.253 ms

initial connection time = 330.761 ms

tps = 59.081567 (without initial connection time)We can see a significant boost in performance compared to the baseline, dare I say 4x? Also, notice there are no serialization errors thrown. Let's see what the UI says:

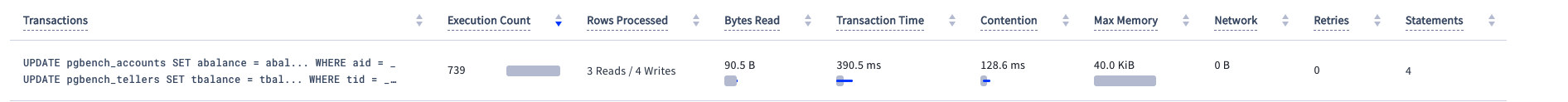

The total transaction time is now 98ms. There were 8 retries thrown and Cockroach handled them internally. The total number of statements is 1.

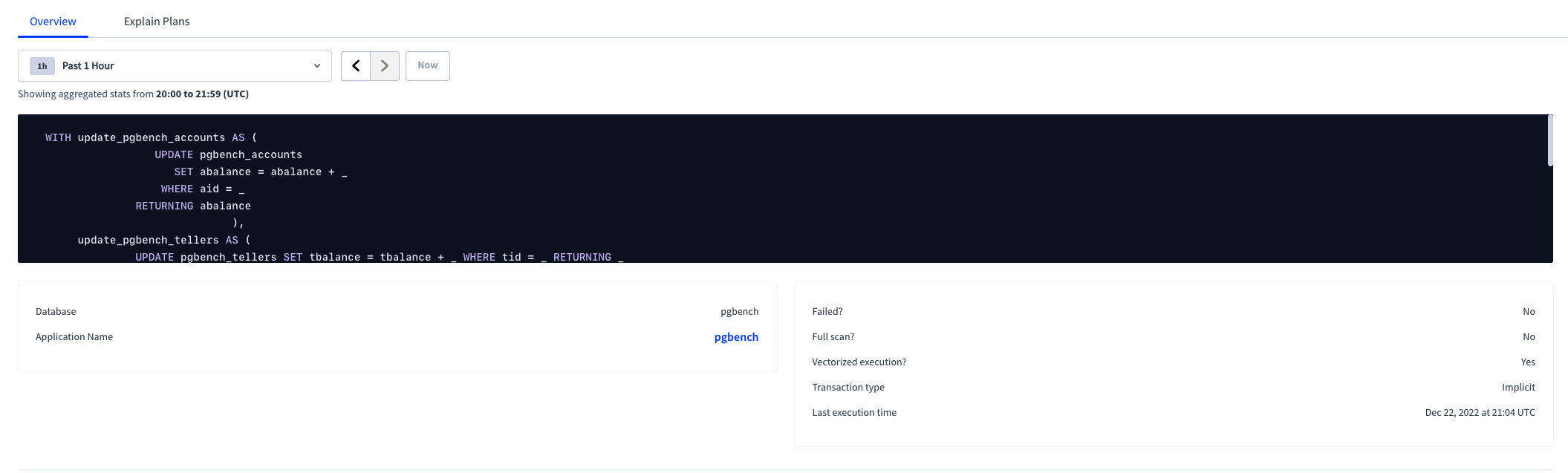

If we click into the statement, we can also confirm it is an implicit transaction.

If we navigate to the explain plans and click on the plan gist, we can view the plan

Finally, let's look at the debug and see what the round-trip execution looks like

pgbench \

--host=${PGHOST} \

--no-vacuum \

--file=tpcb-cockroach.sql@1 \

--client=1 \

--jobs=1 \

--username=${PGUSER} \

--port=${PGPORT} \

-T 1 \

-P 1 \

--failures-detailed \

-d \

${PGDATABASE}pgbench: client 0 executing script "tpcb-cockroach.sql"

pgbench: client 0 executing \set aid

pgbench: client 0 executing \set delta

pgbench: client 0 executing \set tid

pgbench: client 0 executing \set bid

pgbench: client 0 sending WITH

update_pgbench_accounts AS

(UPDATE pgbench_accounts SET abalance = abalance + 78 WHERE aid = 65583 RETURNING abalance),

update_pgbench_tellers AS

(UPDATE pgbench_tellers SET tbalance = tbalance + 78 WHERE tid = 9 RETURNING NULL),

update_pgbench_branches AS

(UPDATE pgbench_branches SET bbalance = bbalance + 78 WHERE bid = 90 RETURNING NULL),

insert_pgbench_history AS

(INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (9, 90, 65583, 78, CURRENT_TIMESTAMP) RETURNING NULL)

SELECT abalance FROM update_pgbench_accounts;

pgbench: client 0 receiving

pgbench: client 0 receiving

pgbench: client 0 receiving

pgbench: client 0 receiving

transaction type: tpcb-cockroach.sql

scaling factor: 1

query mode: simple

number of clients: 1

number of threads: 1

maximum number of tries: 1

duration: 1 s

number of transactions actually processed: 18

number of failed transactions: 0 (0.000%)

number of serialization failures: 0 (0.000%)

number of deadlock failures: 0 (0.000%)

latency average = 43.167 ms

latency stddev = 4.644 ms

initial connection time = 266.912 ms

tps = 23.152525 (without initial connection time)And to drive my point home if you're still not convinced, let's look at yet another flag in pgbench, -r which will report per command latencies

Report the following statistics for each command after the benchmark finishes: the average per-statement latency (execution time from the perspective of the client), the number of failures, and the number of retries after serialization or deadlock errors in this command. The report displays retry statistics only if the --max-tries option is not equal to 1.

this is the original:

pgbench \

--host=${PGHOST} \

--no-vacuum \

--file=tpcb-original.sql@1 \

--client=1 \

--jobs=1 \

--username=${PGUSER} \

--port=${PGPORT} \

-T 1 \

-P 5 \

--failures-detailed \

-r \

${PGDATABASE}pgbench (15.1 (Homebrew), server 13.0.0)

transaction type: tpcb-original.sql

scaling factor: 1

query mode: simple

number of clients: 1

number of threads: 1

maximum number of tries: 1

duration: 1 s

number of transactions actually processed: 4

number of failed transactions: 0 (0.000%)

number of serialization failures: 0 (0.000%)

number of deadlock failures: 0 (0.000%)

latency average = 219.822 ms

latency stddev = 3.501 ms

initial connection time = 275.868 ms

tps = 4.545186 (without initial connection time)

statement latencies in milliseconds and failures:

0.004 0 \set aid random(1, 100000 * :scale)

0.002 0 \set bid random(1, 1 * :scale)

0.001 0 \set tid random(1, 10 * :scale)

0.001 0 \set delta random(-5000, 5000)

28.845 0 BEGIN;

36.148 0 UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid;

27.261 0 SELECT abalance FROM pgbench_accounts WHERE aid = :aid;

33.950 0 UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

32.388 0 UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

29.709 0 INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

31.515 0 END;We got 4.5 tps and notice the per-statement latencies reported. Let's look at the same query without select

pgbench \

--host=${PGHOST} \

--no-vacuum \

--file=tpcb-no-select.sql@1 \

--client=1 \

--jobs=1 \

--username=${PGUSER} \

--port=${PGPORT} \

-T 1 \

-P 5 \

--failures-detailed \

-r \

${PGDATABASE}pgbench (15.1 (Homebrew), server 13.0.0)

transaction type: tpcb-no-select.sql

scaling factor: 1

query mode: simple

number of clients: 1

number of threads: 1

maximum number of tries: 1

duration: 1 s

number of transactions actually processed: 4

number of failed transactions: 0 (0.000%)

number of serialization failures: 0 (0.000%)

number of deadlock failures: 0 (0.000%)

latency average = 187.979 ms

latency stddev = 9.649 ms

initial connection time = 266.751 ms

tps = 5.316505 (without initial connection time)

statement latencies in milliseconds and failures:

0.004 0 \set aid random(1, 100000 * :scale)

0.001 0 \set bid random(1, 1 * :scale)

0.001 0 \set tid random(1, 10 * :scale)

0.001 0 \set delta random(-5000, 5000)

31.392 0 BEGIN;

32.440 0 UPDATE pgbench_accounts SET abalance = abalance + :delta WHERE aid = :aid RETURNING abalance;

31.329 0 UPDATE pgbench_tellers SET tbalance = tbalance + :delta WHERE tid = :tid;

32.212 0 UPDATE pgbench_branches SET bbalance = bbalance + :delta WHERE bid = :bid;

28.083 0 INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (:tid, :bid, :aid, :delta, CURRENT_TIMESTAMP);

32.517 0 END;We got an additional 1 tps gained an additional gain of 27ms, let's now look at the CTE example

pgbench \

--host=${PGHOST} \

--no-vacuum \

--file=tpcb-cockroach.sql@1 \

--client=1 \

--jobs=1 \

--username=${PGUSER} \

--port=${PGPORT} \

-T 1 \

-P 5 \

--failures-detailed \

-r \

${PGDATABASE}pgbench (15.1 (Homebrew), server 13.0.0)

transaction type: tpcb-cockroach.sql

scaling factor: 1

query mode: simple

number of clients: 1

number of threads: 1

maximum number of tries: 1

duration: 1 s

number of transactions actually processed: 17

number of failed transactions: 0 (0.000%)

number of serialization failures: 0 (0.000%)

number of deadlock failures: 0 (0.000%)

latency average = 42.552 ms

latency stddev = 3.151 ms

initial connection time = 305.064 ms

tps = 23.481960 (without initial connection time)

statement latencies in milliseconds and failures:

0.007 0 \set aid random(1, 100000 * :scale)

0.001 0 \set bid random(1, 1 * :scale)

0.001 0 \set tid random(1, 10 * :scale)

0.002 0 \set delta random(-5000, 5000)

42.541 0 WITHTps is 23 vs. 4 and the total latency for the CTE is 42ms.

Hopefully, this gives you an idea of how to improve your legacy apps and work around the serialization errors. Weaker isolation is not always an answer to gaining performance!